Avegant, makers of Glyph personal media HMD, are turning their attention to the AR space with what they say is a newly developed light field display for augmented reality which can display multiple objects at different focal planes simultaneously.

Most of today’s AR and VR headsets have something called the vergence-accommodation conflict. In short, it’s an issue of biology and display technology, whereby a screen that’s just inches from our eye sends all light into our eyes at the same angle (where’s normally the angle changes based on how far away an object is) causing the lens in our eye to focus (called accommodation) on only light from that one distance. This comes into conflict with vergence, which is the relative angle between our eye eyes when they rotate to focus on the same object. In real life and in VR, this angle is dynamic, and normally accommodation happens in our eye automatically at the same time, except in most AR and VR displays today, it can’t because of the static angle of the incoming light.

For more detail, check out this primer:

Accommodation

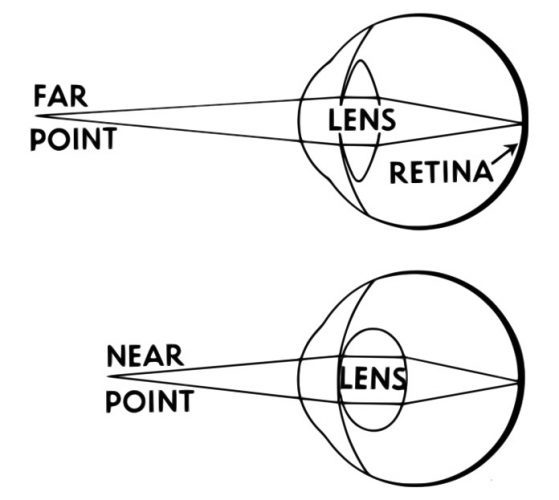

In the real world, to focus on a near object, the lens of your eye bends to focus the light from that object onto your retina, giving you a sharp view of the object. For an object that’s further away, the light is traveling at different angles into your eye and the lens again must bend to ensure the light is focused onto your retina. This is why, if you close one eye and focus on your finger a few inches from your face, the world behind your finger is blurry. Conversely, if you focus on the world behind your finger, your finger becomes blurry. This is called accommodation.

Vergence

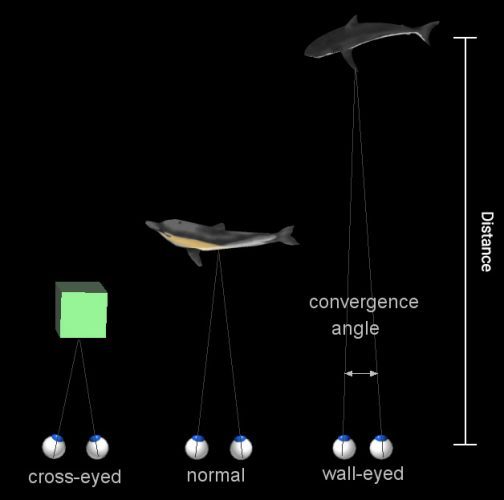

Then there’s vergence, which is when each of your eyes rotates inward to ‘converge’ the separate views from each eye into one overlapping image. For very distant objects, your eyes are nearly parallel, because the distance between them is so small in comparison to the distance of the object (meaning each eye sees a nearly identical portion of the object). For very near objects, your eyes must rotate sharply inward to converge the image. You can see this too with our little finger trick as above; this time, using both eyes, hold your finger a few inches from your face and look at it. Notice that you see double-images of objects far behind your finger. When you then look at those objects behind your finger, now you see a double finger image.

The Conflict

With precise enough instruments, you could use either vergence or accommodation to know exactly how far away an object is that a person is looking at. But the thing is, both accommodation and vergence happen in your eye together, automatically. And they don’t just happen at the same time; there’s a direct correlation between vergence and accommodation, such that for any given measurement of vergence, there’s a directly corresponding level of accommodation (and vice versa). Since you were a little baby, your brain and eyes have formed muscle memory to make these two things happen together, without thinking, any time you look at anything.

But when it comes to most of today’s AR and VR headsets, vergence and accommodation are out of sync due to inherent limitations of the optical design.

In a basic AR or VR headset, there’s a display (which is, let’s say, 3″ away from your eye) which shows the virtual scene and a lens which focuses the light from the display onto your eye (just like the lens in your eye would normally focus the light from the world onto your retina). But since the display is a static distance from your eye, the light coming from all objects shown on that display is coming from the same distance. So even if there’s a virtual mountain five miles away and a coffee cup on a table five inches away, the light from both objects enters the eye at the same angle (which means your accommodation—the bending of the lens in your eye—never changes).

That comes in conflict with vergence in such headsets which—because we can show a different image to each eye—is variable. Being able to adjust the imagine independently for each eye, such that our eyes need to converge on objects at different depths, is essentially what gives today’s AR and VR headsets stereoscopy. But the most realistic (and arguably, most comfortable) display we could create would eliminate the vergence-accommodation issue and let the two work in sync, just like we’re used to in the real world.

Solving the vergence-accommodation conflict requires being able to change the angle of the incoming light (same thing as changing the focus). That alone is not such a huge problem, after all you could just move the display further away from your eyes to change the angle. The big challenge is allowing not just dynamic change in focus, but simultaneous focus—just like in the real world, you might be looking at a near and far object at the same time and each have a different focus. Avegant claims it’s new light field display technology can do both dynamic focal plane adjustment and simultaneous focal plane display.

We’ve seen proof of concept devices before which can show a limited number (three, or so) of discrete focal planes simultaneously, but that means you only have a near, mid, and far focal plane to work with. In real life, objects can exist in an infinite number of focal planes, which means that three is far from enough if we endeavor to make the ideal display.

Avegant CTO Edward Tang tells me that “all digital light fields have [discrete focal planes] as the analog light field gets transformed into a digital format,” but also says that their particular display is able to interpolate between them, offering a “continuous” dynamic focal plane as perceived by the viewer. The company also says that objects can be shown at varying focal planes simultaneously, which is essential for doing anything with the display that involves showing more than one object at a time.

Above: CGI representation of simultaneous display of varying focal planes. Note how the real hand and rover go out of focus together. This is an important part of making augmented objects feel like they really exist in the world.

Avegant hasn’t said how many simultaneous focal planes can be shown at once, or how many discrete planes there actually are.

From a feature standpoint, this is similar to reports of the unique display that Magic Leap has developed but not yet shown publicly. Avegant’s announcement video of this new tech (heading this article) appears to invoke Magic Leap with solar system imagery which looks very familiar to what Magic Leap has teased previously. A number of other companies are also working on displays which solve this issue.

Tang is being tight lipped on just how the tech works, but tells me that “this is a new optic that we’ve developed that results in a new method to create light fields.”

So far the company is showing off a functioning prototype of their light field display (seen in the video) as well as a proof-of-concept headset that they represents the form factor that the company says could eventually be achieved.

We’ll be looking hoping to get our hands on the headset soon to see what impact the light field display makes, and to confirm other important information like field of view and resolution.