Google’s I/O 2015 conference last week was packed with technology with potential uses with VR or AR. The most unusual among them, Project Soli, uses radar technology to detect minute movements and gestures and translate them for input.

Google ATAP (Advanced Technology and Products), a division of the search and mobile OS giant you may very well not have heard of before, was prominent in the least at 2015’s Google I/O developer conference. From touch sensitive clothing to SD cards capable of seamless encryption for cross-platform mobile devices, the R&D lab at times gave off a definite mad scientist air during their presentations.

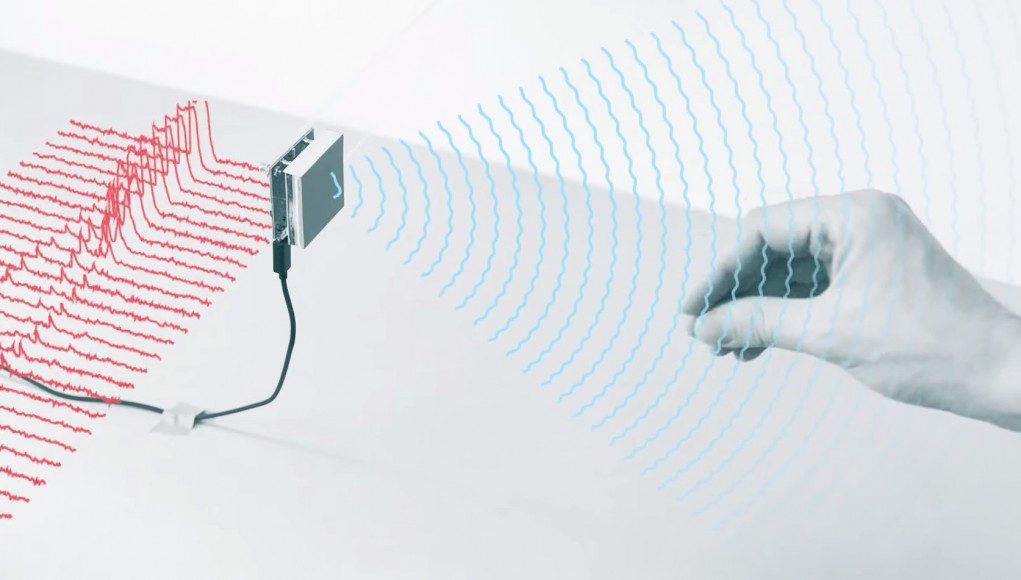

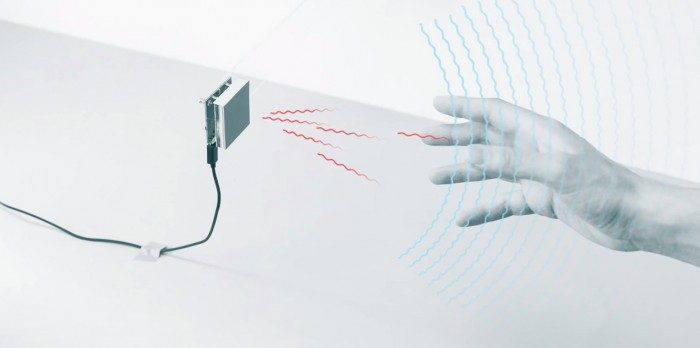

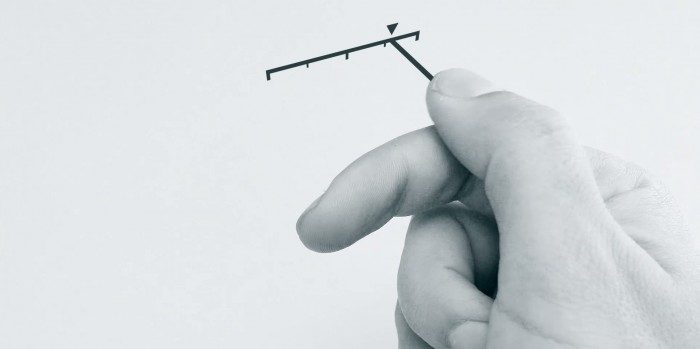

One project in particular however might hold specific interest to the virtual reality industry, although its application for VR or AR may not be immediately obvious. Project Soli uses radar (RAdio Detection And Ranging) to detect micro-movements in your hands and fingers. It transmits radio waves and picks up up reflected responses from any target it hits. So far so World War 2, but it’s the gesture recognition pipeline Google have built that means this becomes interesting for input. The pipeline translates reflected signals from you, say, rubbing your index finger and thumb together and recognises that as a gesture or action and redirect that gesture as input to an application.

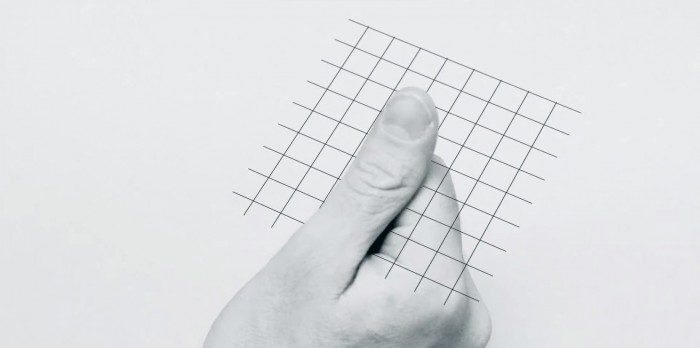

The technology has thus far been developed to enable the hardware transmitting and receiving these radio waves to fit onto a single chip, embeddable into mobile devices or any other type of hardware. And whilst it looks as if Project Soli may not offer the range required for broad VR input, it does hold some interesting, if not immediately apparent uses for the refinement of control within virtual worlds.

Imagine embedding Soli into a room space tracking system for example. Mounting a radar capable sensor on the side of, for example, Valve’s Lighthouse controllers would allow minute levels of control for situations when broader hand and arm gestures don’t quite cut it. For example, you may be operating a VR CAD package where precise control is required to adjust the thickness or positioning of a line on your drawing. You’re most of the way there using the lighthouse controllers, but need to finish with precision. Raising a thumb over the Soli sensor, using your fine motor control to get the result you want before returning to Lighthouse control.

And perhaps there are applications for broader, full body control – at present though it’s difficult to see how a wider target (say your upper body) could be accurately and reliably translated to usable gestures within an application – there simply may be too much noise. For the moment it seems that using Soli to augment an existing control system might the more interesting way to go.

We don’t yet know or where or how Google plan to roll out Project Soli tech. It’s certainly true that there are more immediate and obvious applications for the system in the firm’s more traditional market of mobile phone interfaces. However, as each Google developer conference comes and goes, the company is clearly putting more emphasis on looking to the next generation of consumer hardware beyond the world of plateauing and diminishing phone hardware advances.

Two areas are virtual and augmented reality, so it’s not a complete flight of fancy to think that Google’s kerrazy ATAP boffins may well find ways to combine their burgeoning suite of adaptable technologies, Project Tango and Cardboard for example, into the next generation of VR and AR enabled mobile devices. It’s intriguing to think what we’ll be seeing from ATAP and Google at next years conference.

See Also: Google Wants to Use Tango Tech for VR, But Admits Current Dev Kits Aren’t Optimized