Google has revealed a new ‘surface light-field’ rendering technology that it’s calling ‘Seurat’ (after the famous Pointillism painter). The company says that the tech will not only bring CGI quality visuals to mobile VR, but it will do so at a miniscule filesize—a hurdle that other light-field approaches have struggled to surmount.

Today at I/O 2017 Google introduced Seurat, a new rendering technology that’s designed to take ultra high-quality CGI assets that couldn’t be run in real-time even on the highest performance desktop hardware, and format them in a way that retains their visual fidelity while allowing them to run on mobile VR hardware. Now, that wouldn’t be very impressive if we were just talking about 360 videos, but Google’s Seurat approach actually generates sharp, properly rendered geometry which means that it retains real volumetric data, allowing players to walk around in a room-scale space rather than having their head stuck in one static point. This also means that developers can composite traditional real-time assets into the scene to create interactive gameplay within these high fidelity environments.

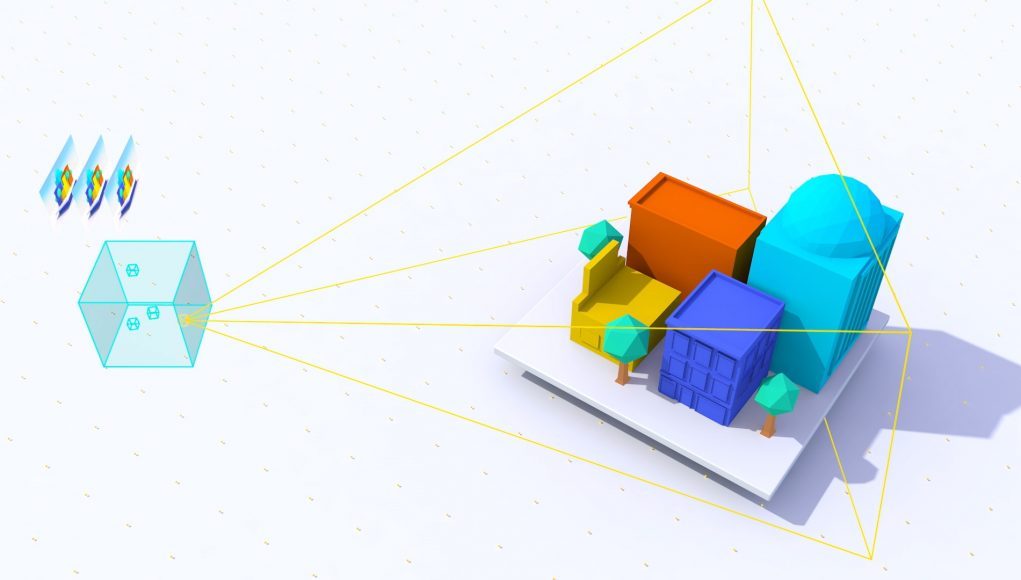

So how does it work? Google says Seurat makes use of something called surface light-fields, a process which involves taking original ultra-high quality assets, defining a viewing area for the player, then taking a sample of possible perspectives within that area to determine everything that possibly could be viewed from within it. The high-quality assets are then reduced to a significantly smaller number of polygons—few enough that the scene can run on mobile VR hardware—while maintaining the look of high quality assets, including perspective-correct specular lightning.

While other light-field approaches that we’ve seen are fundamentally constrained by the huge volumes of data they take up (making them hard to deliver to users), Google says that individual room-scale view boxes made with Seurat can be as small as just a few megabytes, and that a complex app containing many view boxes, along with interactive real-time assets, would not be larger than a typical mobile app.

That’s a huge deal because it means developers can create mobile VR games that approximate the graphical quality that users might expect from a high-end desktop VR headset—which may be an important part of convincing people to drop nearly the same amount of money on a standalone Daydream headset.

Google seems to still be in the early phases of the Seurat rendering tech, and we’re still waiting for a deeper technical explanation; it’s possible that potential pitfalls are yet to be revealed, and there’s no word yet on when developers will be able to use the tech, or how much time/cost it takes to render such environments. If it all works as Google says though, this could be a breakthrough for graphics on mobile VR devices.