In the last few years, Lytro has made a major pivot away from consumer-facing digital camera products now to high-end production cameras and tools, with a major part of the company’s focus on the ‘Immerge’ light-field camera for VR. In February, Lytro announced it had raised another $60 million to continue developing the tech. I recently stopped by the company’s offices to see the latest version of the camera and the major improvements in capture quality that come with it.

The first piece of content captured with an Immerge prototype was the ‘Moon’ experience which Lytro revealed back in August of 2016. This was a benchmark moment for the company, a test of what the Immerge camera could do:

Now, to quickly familiarize yourself with what makes a light-field camera special for VR, the important thing to understand is that light-field cameras shoot volumetric video. So while the basic cameras of a 360-degree video rig output flat frames of the scene, a light-field camera is essentially capturing data enough to recreate the scene as complete 3D geometry as seen within a certain volume. The major advantage is the ability to play the scene back through a VR headset with truly accurate stereo and allow the viewer to have proper positional tracking inside the video; both of which result in much more immersive experience, or what we recently called “the future of VR video.” There’s also more advantages of light-field capture that will come later down the road when we start seeing headsets equipped with light-field displays… but that’s for another day.

So, the Moon experience captured with Lytro’s early Immerge prototype did achieve all those great things that light-field is known for, but it wasn’t good enough just yet. It’s hard to tell unless you’re seeing it through a VR headset, but the Moon capture had two notable issues: 1) it had a very limited capture volume (meaning the space around which your head can freely move while keeping the image in tact), and 2) the fidelity wasn’t there yet; static objects looked great, but moving actors and objects in the scene exhibited grainy outlines.

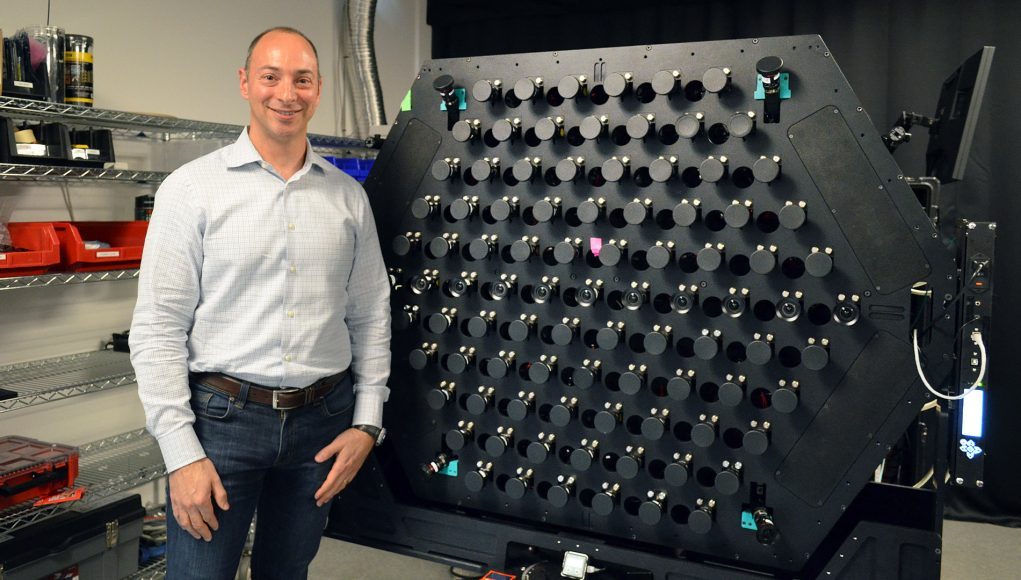

So Lytro took what they learned from the Moon shoot, went back to the drawing board, and created a totally new Immerge prototype, which solved those problems so effectively that the company now proudly says their camera is “production ready,” (no joke, scroll to the bottom of this page on their website and you can submit a request to shoot with the camera.).

The new, physically larger Immerge prototype brings a physically larger capture volume, which means the view has more freedom of movement inside the capture. And the higher quality cameras provide more data, allowing for greater capture and playback fidelity. The latest Immerge camera is significantly larger than the prototype that captured the Moon experience, by about four times. It features a whopping 95 element planar light-field array with a 90-degree field of view. Those 95 elements are larger than on the precursor too, capturing higher quality data.

I got to see a brand new production captured with the latest Immerge camera, and while I can’t talk much about the content (or unfortunately show any of it), I can talk about the leap in quality.

The move from Moon to this new production is substantial. Not only does the apparent resolution feel higher (leading to sharper ‘textures’), but the depth information is more precise which has largely eliminated the grainy outlines around non-static scene elements. That improved depth data has something of a double-bonus on visual quality, because sharper captures enhance the stereoscopic effect by creating better edge contrast.

Do you recall early renders of a spherical Immerge camera? Purportedly due to feedback informed by early productions using a spherical approach, the company decided to switch to a flat (planar) capture design. With this approach, capturing a 360 degree view requires the camera to be rotated to individually shoot each side of an eventual pentagonal capture volume. This sounds harder than capturing the scene all at once in 360, but Lytro says it’s easier for the production process.

The size of the capture volume has been increased significantly over Moon, though it can still feel limiting at this size. While you’re well covered for any reasonable movements you’d do while your butt is planted in a chair, if you were to take a large step in any direction, you’ll still leave the capture volume (causing the scene to fade to black until you step back inside).

And, although this has little to do with the camera, the experience I saw featured incredibly well-mixed spatial audio which sold the depth and directionality of the light-field capture in which I was standing. I was left very impressed with what Immerge is now capable of capturing.

The new camera is impressive, but the magic is not just in the hardware, it’s also in the software. Lytro is developing custom tools to fuse all the captured information into a coherent form for dynamic playback, and to aid production and post-production staff along the way. The company doesn’t succeed just by making a great light-field camera, they’re responsible for creating a complete and practical pipeline that actually delivers value to those that want to shoot VR content. Light-field capture provides a great many benefits, but needs to be easy to use at production scale, something that Lytro is focusing on just as heavily on as they are the hardware itself.

All-in-all, seeing Lytro’s latest work with Immerge has further convinced me that today’s de facto 360-degree film capture is a stopgap. When it comes to cinematic VR film production, volumetric capture is the future, and Lytro is on the bleeding edge.