The annual presentation at Oculus Connect by Michael Abrash, Chief Scientist at Oculus, is always a highlight of the company’s annual developer event, projecting a forward-thinking and ever inspirational look at the future of virtual reality. This time, at Oculus Connect 3, he made some bold, specific predictions about the state of VR in five years.

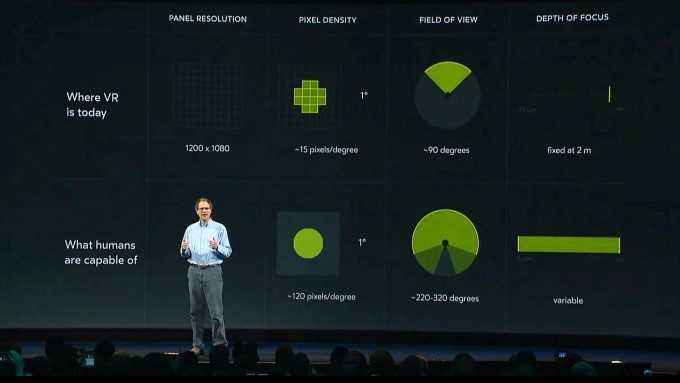

Firstly, the visuals, as this is the most critical area for near-term improvement. Current high-end headsets like the Rift and Vive, with their roughly 100 degree field of view and 1080×1200 display panels, equate to around 15 pixels per degree. Humans are capable of seeing at least 220 degrees field of view at around 120 pixels per degree (assuming 20/20 vision), Abrash says, and display and optics technologies are far away from achieving this (forget 4K or 8K, this is beyond 24K per eye). In five years, he predicts a doubling of the current pixels per degree to 30, with a widening of FoV to 140 degrees, using a resolution of around 4000×4000 per eye. In addition, the current fixed depth of focus of current headsets should become variable. Widening the FOV beyond 100 degrees and achieving variable focus both require new advancements in displays and optics, but Abrash believes this will be solved within five years.

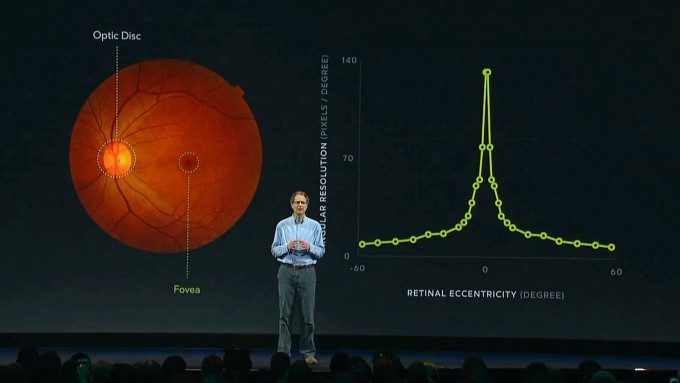

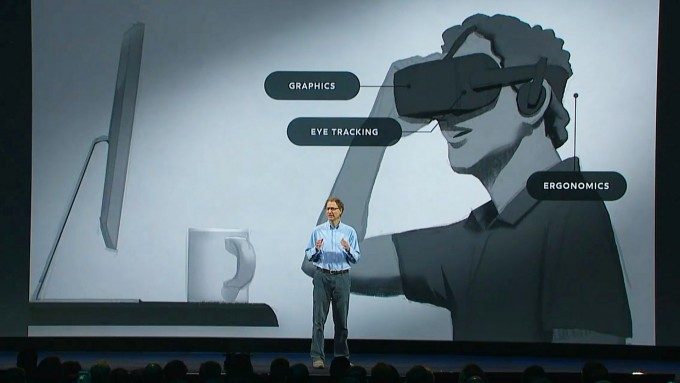

Rendering 4K x 4K per eye at 90Hz is an order of magnitude more demanding than the current spec, so for this to be achievable in the next five years, foveated rendering is essential, Abrash says. This is a technique where only the tiny portion of the image that lands on the fovea—the only part of the retina that can see significant detail—is rendered at full quality, with the rest blending to a much lower fidelity (massively reducing rendering requirements). Estimating the position of the fovea requires “virtually perfect” eye tracking, which Abrash describes as “not a solved problem at all” due to the variability of pupils, eyelids, and the complexities of building a system that works across the full range of eye motion for a broad userbase. But as it is so critical, Abrash believes it will be tackled in five years, but admits it has the highest risk factor among his predictions.

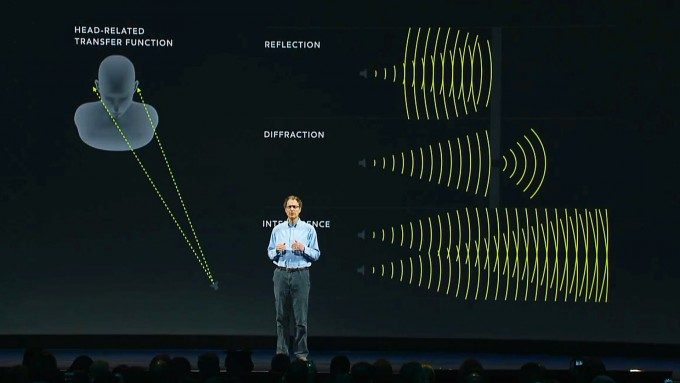

Next, he moved briefly to audio; personalised head-related transfer functions (HRTFs) will enhance the realism of positional audio. The Rift’s current 3D audio solution generates a real-time HRTF based on head tracking, but this is general across all users. HRTFs vary by individual due to the size of the torso and head and the shape of the ears; creation of personalised HRTFs should significantly improve the audio experience for everyone, Abrash believes. While he didn’t go into detail as to how this would be achieved (it typically requires an anechoic chamber), he suggested it could be “quick and easy” to generate one in your own home within the next five years. In addition, he expects advancements to the modelling of reflection, diffraction and interference patterns will improve sound propagation to a more realistic level. Accurate audio is arguably even more complex than visual improvement due to the discernible effect of the speed of sound; despite these impressive advancements, real-time virtual sound propagation will likely remain simplified well beyond five years, as it is so computationally expensive, Abrash says.

On controllers, Abrash believes hand-held motion devices like Oculus Touch could remain the default interaction technology “40 years from now”. Ergonomics, functionality and accuracy will no doubt improve over that period, but this style of controller could well become “the mouse of VR.” Interestingly, he suggested hand tracking (without the use of any controller or gloves) would become standard within five years, accurate enough to represent precise hand movements in VR, and particularly useful for expressive avatars, and for simple interactions that don’t require holding a Touch-like controller, such as web browsing or launching a movie. I think there are parallels with smartphones compared to consoles and PC here; touchscreens are great for casual interaction, but nothing beats physical buttons for typing or intense gaming. It makes sense that no matter how good hand tracking becomes, you’ll still want to be holding something with physical resistance in many situations.

Addressing more general improvements, Abrash predicts that despite the complexities of eye tracking and wider-FoV displays and optics, headsets will be lighter in five years, with better weight distribution. Plus, he says, they should have more convenient handling of prescription correction (perhaps a bonus improvement that comes with depth of focus technology). Most significantly, at the high end, VR headsets will become wireless. We’ve heard many times that existing wireless solutions are simply not up the task of meeting even the current bandwidth and latency requirements of VR, and Abrash repeated this sentiment, but believes it can be achieved in five years, assuming foveated rendering is part of the equation.

Next he talked about the potential of bringing the real world into the virtual space, something he referred to as “augmented VR”; this would scan your real environment to be rendered convincingly in the headset, or it could place you in another scanned environment. This could serve as the ultimate mixed-reality ‘chaperone’ system for confidently moving around your real space, picking up real objects, and seeing who just walked in, but also to make you feel like you were anywhere on the planet, blurring the line between real-world and VR. While we can already create a believable, high-resolution pre-scanned recreation of many environments (see Realities), doing this in real-time in a consumer product has significant hurdles to negotiate, but Abrash believes many of them will be solved in five years. He clarified that augmented VR would be very different to AR glasses (e.g. Hololens) that use displays to overlay the real world, as augmented VR would allow complete control over every pixel in the scene, allowing for much more precise changes, complete transformations of the entire scene, and anything in between.

The real significance of augmented VR is being able to share any environment with other people, locally or across the world. The VR avatars coming soon to Oculus Home are primitive compared to what Abrash expects to be possible in five years. Even with hand tracking close to the accuracy of retroreflective-studded gloves in a motion capture environment, advancements in facial expression capture/reproduction and markerless full-body tracking, the realistic representation of virtual humans is by far the most challenging aspect of VR, Abrash says, due to the way we are so finely tuned to the most subtle changes in expression and body language of people around us. We can expect a huge number improvements to the believability of sharing a virtual space with others, but staying on the right side of the uncanny valley will still be the goal in five years he believes. It could be decades before anyone gets close to feeling they are in the presence of a “true human” in VR.

Finally, Abrash revisited his “dream workspace” he discussed last year, with unlimited whiteboards, monitors, or holographic displays in any size and configuration, instantly switchable depending on the task at hand, for the most productive work environment possible. Add virtual humans, and it becomes an equally powerful group working tool. But in order for this to be comfortable to use as an all-day work environment, all of the advancements he covered would be required. For example, the display technology would need to be sharp enough so that virtual monitors could replace real monitors, augmented VR would need to be capable of reproducing and sharing the real environment with accuracy, the FOV would need to be wide enough to see everyone in the meeting at once, and spatial audio would need to be accurate enough to pinpoint who is speaking. Not every aspect of this dream will come true in five years, he says, but Abrash believes we will be well along the path.

The prospect of such a giant step forward in so many areas in such a short space of time is exciting, but can it really happen? And will it all be within one generational leap, or can we expect to be on third-generation consumer headsets by then? Well, Abrash made decent predictions about the specifications of consumer VR headsets almost three years ago, so let’s hope he’s been just as accurate this time.