Microsoft researchers have published a paper detailing a technique to record live action ‘holographic’ video suitable for the company’s HoloLens augmented reality headset. The high quality capture can be viewed from any angle and streamed over the web.

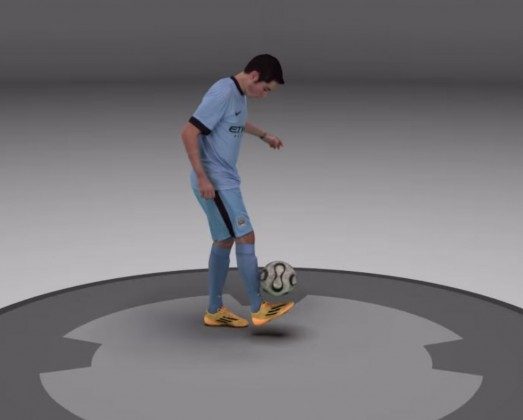

While VR video capture focuses on placing users in a completely new scene, AR’s unique capability is combining the user’s real world with digital elements. Inserting a computer-generated object (say, a cube) in AR is simple enough as new views of the object can be computed on the fly as the user moves about. Capturing and playing back a live-action scene in AR is however quite a bit more challenging as the subjects of the scene not only need to be captured from every angle, but also need to be extracted from the background of the capture space such that they can be transplanted into the user’s own environment for convincing AR.

Microsoft has published a research paper detailing a technique to do just this; they say it’s the “first end-to-end solution to create high-quality free-viewpoint video encoded as a compact data stream,”—that last part about the data stream meaning that the content can be streamed to users over the web. The paper was published in the ACM Transactions on Graphics journal, Volume 34 Issue 4. The abstract continues with wonderful technical jargon:

Our system records performances using a dense set of RGB and IR video cameras, generates dynamic textured surfaces, and compresses these to a streamable 3D video format. Four technical advances contribute to high fidelity and robustness: multimodal multi-view stereo fusing RGB, IR, and silhouette information; adaptive meshing guided by automatic detection of perceptually salient areas; mesh tracking to create temporally coherent subsequences; and encoding of tracked textured meshes as an MPEG video stream. Quantitative experiments demonstrate geometric accuracy, texture fidelity, and encoding efficiency. We release several datasets with calibrated inputs and processed results to foster future research.

Although motion capture systems have been doing something similar for many years, most systems only capture the motion of performers which is then used to animate digital models. Microsoft’s technique essentially captures the performance and generates a model at the same time.

See Also: New HoloLens Video Shows Glimpses of Detailed Internals and Early Prototypes

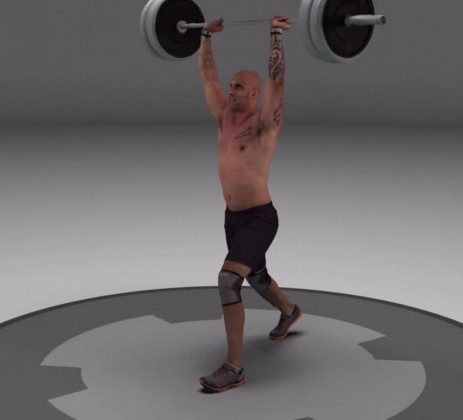

The setup used for the recordings uses 106 RGB and infrared cameras. Starting with a detailed capture of some 2.7 million points in a 3D point cloud. The points are then formed into a solid mesh which is cleaned up algorithmically to reduce surface artifacts, with the result consisting of more than 1 million triangles per frame. Areas of particular detail (like hands and faces) are kept at a higher level of quality than more simple areas as the entire mesh is brought down to a more manageable triangle count. After applying the texture to the mesh, the results are compressed and encoded into a streamable MPEG file (one example shown is running at 12 Mbps).

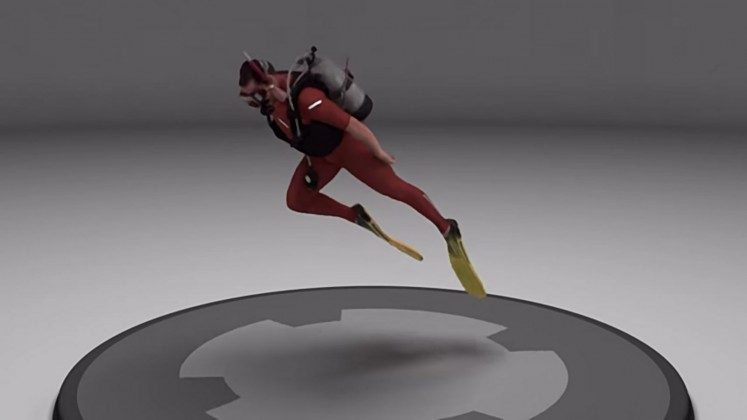

The system looks quite capable, resulting in high quality output for a wide variety of captures including quickly moving objects, bright colors, reflections, and thin fabric, though it isn’t clear how much manual post-processing is needed to achieve such results. While it all looks quite good on video, it remains to see how the models will hold up when seen more closely through an immersive headset.

Although the setup required is quite extensive, the results are indeed impressive and result in ‘holographic’ video that’s clearly applicable for the company’s forthcoming AR headset, HoloLens.

The output from the technique should be equally applicable for VR headset which makes it a potentially attractive method for capturing live action performance for immersive displays.