Founded in 1993, Immersion Corp. designs and licenses haptic technology that’s come to be used in gamepads you’re well familiar with. Now the company is developing a new haptic programming system which aims to help game developers make better haptics effects for their games, faster.

The latest generation of VR controllers use more advanced haptics than the basic rumble that you find in today’s gamepads. But if you’ve played much VR, you’ve probably found that the capabilities of these haptics have gone largely underutilized by a wide swath of today’s VR games.

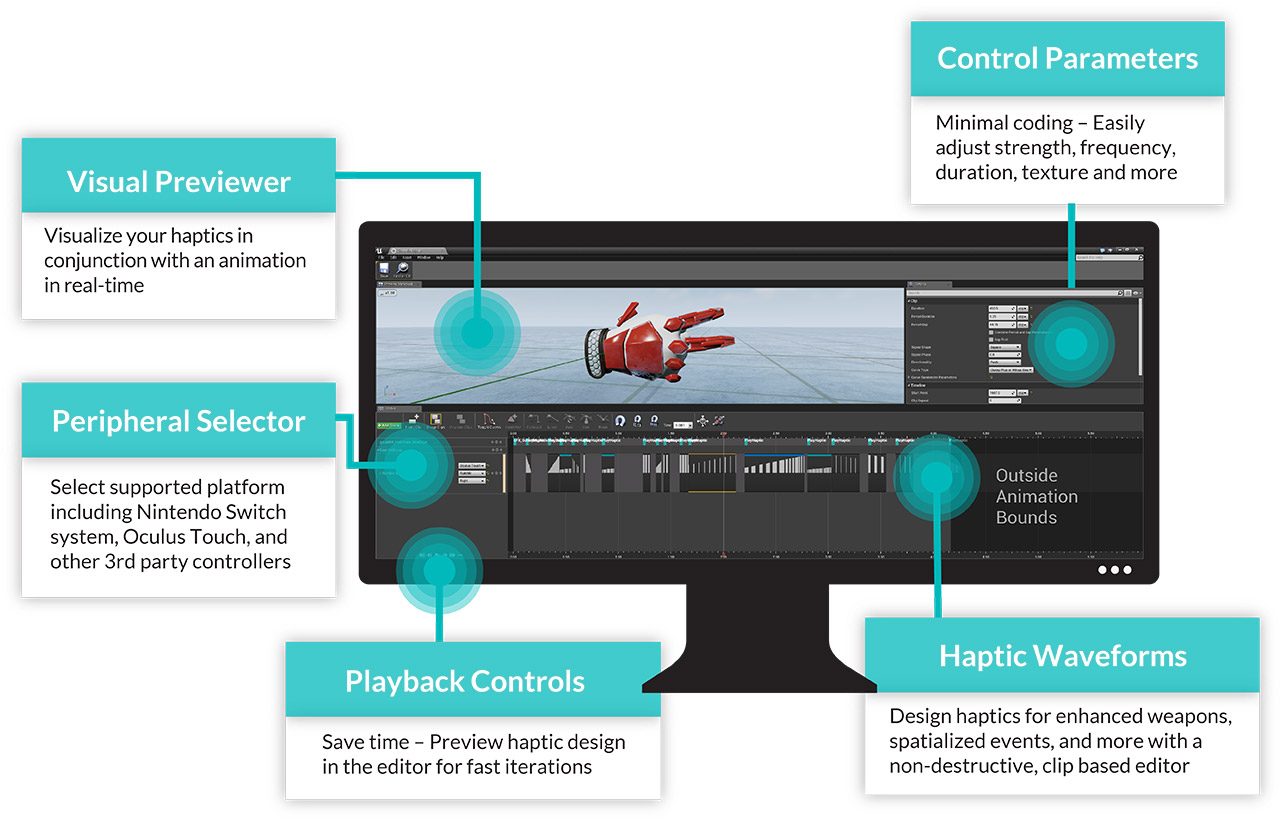

Immersion Corp. says this is because programming haptic effects is hard, and today it generally involves code-level input of values like amplitude, frequency, and time signatures in order to trigger the haptics inside of a controller. Now the company says they’ve made a better way, thanks to the TouchSense Force plugin (and API) which makes the creation of haptic effects into a much more intuitive visual process.

Rather than coding specific timing, amplitude, and frequency values, TouchSense Force (launching initially for UE4) creates a ‘clip’-based timeline interface which will be instantly familiar to anyone who has edited audio or video files. The timeline allows developers to pull an animation into the system, very easily design haptic effects that are finely tuned to the animation, and play those effects back with the animation on the fly for testing and tweaking.

So instead of a reloading gun animation just causing a simple rumble for a second or two, a developer using TouchSense Force could create a complex series of haptic effect clips that closely match every part of the animation for added realism.

Immersion Corp was demonstrating TouchSense Force at SVVR 2017 this week where they were showing an animation of a robotic glove enclosing the hand of a VR user (definitely not inspired by Iron Man’s suit-up scenes), which had a lot of intricate detail and moving parts.

In something like 10 minutes, according to the company, they were able to use the TouchSense Force plugin to design a series of varied effects which carefully synced to the activity on the glove; something which traditionally could have taken hours of careful tweaking. Trying the result for myself using a Rift headset and Touch controller, it was indeed very impressive, far beyond the level of haptic detail I’ve seen from any VR game to date.

Actually creating these effects with the plugin is completely essentially code-free. I have a basic understanding of audio editing and waveforms, and I very quickly understood the process of creating each effect; I’m confident I would be able to create my own using the plugin, which is pretty cool considering that I have no game development experience. That level of intuitivity means that creating such effects is easier and faster, and gives a huge level of control to developers. There’s hope that this will open the door to developers bringing much more attention to detail in their use of haptics for VR games.

And there’s a few other cool functions that Immersion Corp. is building into this tool. For one, if the animation ends up getting changed, the haptic effects can change with it automatically. This works by associating specific haptic clips to specific moments in the animation using UE4’s Notifies animation system. Because the animation and the haptic clips are linked, changing the animation will also change the playback timing of the haptic clips, which means developers can tweak the haptics and the animation independently without needing to repeat their work if they decide to make a tweak after the fact.

The plugin also offers cross-platform support, so that developers can author their haptic effects once, and have those effects play back as closely as possible on other controllers (which could even have different haptic technologies in them) without re-authoring for that controller’s own haptic API.

TouchSense Force is now available to select developers in early access as a UE4 plugin (you can sign up for access here). The pricing model is presently unannounced. The company says they also plan to make available a Unity plugin in Q3, and will release an API to allow integration of the same feature set into custom game engines. Presently the system supports Oculus Touch, Nintendo Switch, and unspecified “TouchSense Force-compatible hardware,” though we imagine the company is working to get Vive controllers integrated ASAP.