Road to VR speaks to Mike Nichols, Softkinetic’s VP of Content & Applications, about how they’re helping bridge the gap between virtual reality and human interaction.

Bridging the Gap Between Virtual Reality and Interaction

Softkinetic are are new breed of technology company built on the back of increased interest in solving problems with human interaction with digital interfaces and worlds. The trend for natural human interactivity was arguably kickstarted with the advent and success of the Nintendo Wii and it’s Wii-Mote then the Microsoft Kinect, both allowing consumers previously untapped ways to reach into their games and expanding the appeal of gaming to other markets.

Softkinetic refer to themselves as a 3D vision company, who create depth sensors, tracking middleware, and content. They offer a variety of white label depth cameras, and recently worked with both Creative Labs and Intel to release the first desktop depth webcam, Senz3D, as well as Intel’s Perceptual Computing and Real Sense SDK.

The Senz3D webcam and iisu middleware are designed to work around a desktop or laptop. The advertised applications are facial tracking and recognition, hand & finger tracking, 3D scanning, as well as gaming, but the reason Mike is here today is to show how the camera can be used in a virtual reality environment. Unlike devices like the Razer Hydra which can be used to control an avatars hands, the Senz3D can be used to track your hand and finger movements so you can see and use your actual hands to interact with objects in VR.

If you have an Oculus Rift and access to a 3D printer, you can print a mount from the free file available from Thingiverse, we printed this on our MakerBot and there are a couple of simple Unity3D demos which Oculus Rift owners can try out.

Road to VR readers might remember Ben Lang trying the application himself at CES 2014, wearing an Oculus Rift with a Creative Labs Senz3D depth camera secured to the front by a 3D printed bracket. A simple Unity demo allowed Ben to manipulate basic shapes in VR space, seeing his real hands rather avatars.

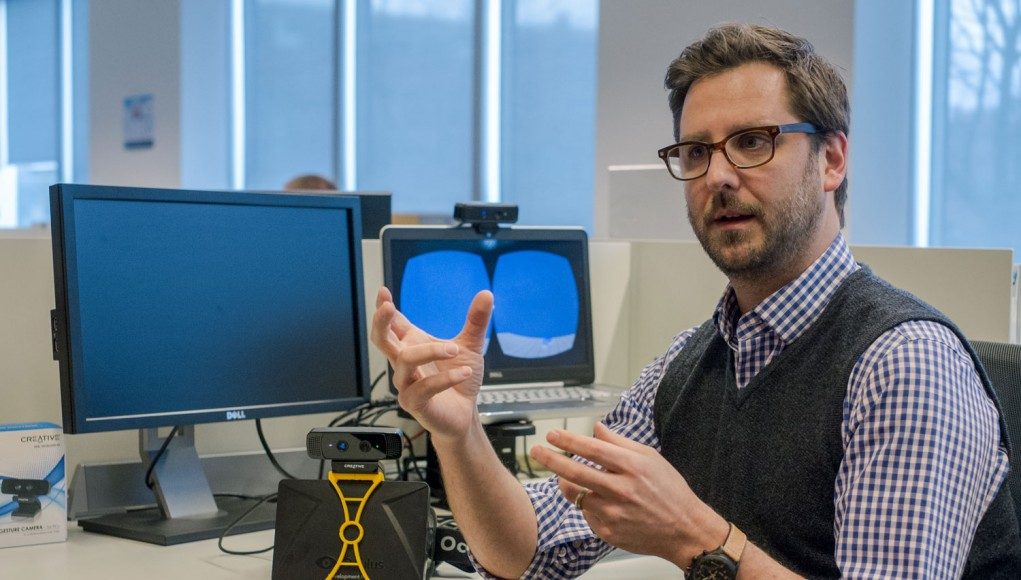

Mike Nicholls is Softkinetic’s VP Content & Applications. He joined the company in 2010 and previously headed up Microsoft’s Project ‘Natal’ which later became known as Kinect. Road to VR’s Jonathan Tustain caught up with Mike at the VR Meetup in London to discover more about the company’s ambitions to enhance virtual reality applications.

—

Road to VR: Who are SoftKinetic?

Mike: SoftKinetic is a 3D vision company who create depth sensors, tracking middleware and content. We manufacture a variety of white label depth cameras and recently collaborated with Intel and Creative Labs to release the world’s first desktop depth webcam – the Senz3D, as well as Intel’s Perceptual Computing and Real Sense SDK. It is the Senz3D camera we have been using for our recent VR demos.

Road to VR: Would it be fair to compare the Creative Senz3D camera with Leap Motion, which has also been used to demo VR interaction? If so, how do they compare?

Mike: The Creative Senz3D is very different from the Leap, in both form and function. The Senz3D includes a Time-of-Flight depth sensor that provides an interactive volume encompassing the user. It’s able to track the hands and fingertips from any position or orientation, which provides a more comfortable way of interacting with the screen. The Senz3D is designed to be placed like a traditional webcam on a laptop or desktop monitor, so it doesn’t add clutter to your workspace, and makes the technology disappear when you’re using it.

The Creative Senz3D is extremely responsive and tracks both hands and fingers (and more) with ease in any position or orientation.

Road to VR: Have your recent demos been an indication that SoftKinetic is seriously looking at VR applications utilizing depth camera technology?

Mike: Sure, SoftKinetic is interested in VR. It’s an experience unlike any other and it has great potential to transform the way we interact and view our media. What we showed at CES and the VR Meetup in London was really just a proof-of-concept that we wanted to share and get feedback on. Like a lot of developers who played with the Oculus DK1, we were immediately impressed, but felt there was more that SoftKinetic’s expertise in depth recognition could bring to the VR experience. To create a real sense of presence by tracking your own body movements in VR. We’ve been really encouraged by the response so far.

Road to VR: Will ‘Time of Flight’ sensors ever be accurate enough compared to inertial tracking?

Mike: Without a doubt. You can already get a glimpse of this capability when you see the quality of your hands tracked in front of the Senz3D camera with our middleware. Technology has a way of improving over time.

Road to VR: When Ben tried the technology at CES he commented, “The latency of the Creative Depth Camera was relatively impressive, but there’s still room for some improvement. There is some jumpiness, and there’s the issue of the camera’s field of view not matching that of the Oculus Rift” – how are you addressing these issues?

Mike: Those are great observations. The Creative Senz3D is extremely responsive and tracks both hands and fingers (and more) with ease in any position or orientation – that’s exactly what it is designed for, for use on a laptop or desktop. When we start something new, like VR, it’s important that we understand what is needed to provide the best user-experience. Using the Senz3D allows us to rapidly prototype ideas and learn from the results. The goal of our prototype wasn’t to create a robust demo, it was to rapidly prototype what it would be like to use our hands to interact with objects in VR. FOV and latency are just a few of the areas we’re looking at.

Road to VR: How would you respond to Ben’s conclusion about the future of this technology? – “While this type of natural hand input will work great for casual VR experiences where training-less intuitive input is important, controllers are unlikely to go away anytime soon. The type of responsiveness and accuracy that serious gamers crave can only be handled by controllers for now, and on that front, Sixense’s STEM system is confidently paving the way.”

Mike: We don’t see natural gesture as an ‘all or nothing’ solution. I think what gamers crave in controlling their games is the right tool to do the job, and certainly responsiveness and accuracy are not exclusive to physical controllers. There are still a lot of hurdles to overcome when it comes to control and navigation in VR. What we’ve discovered with hand tracking in VR was an early ah-ha moment based on a simple prototype. It’s clear to us, and those who have tried our demo, that there is something inherently intuitive about using your hands to manipulate objects in VR. It simply works the way you think it should and also has the added benefit to provide a sense of spatial awareness for your position in VR.

There are still a lot of hurdles to overcome when it comes to control and navigation in VR.

As I hinted at previously, creating Presence is going to be very important for many VR experiences, and we think using depth and tracking to transform your actual movements (not an interpretation of your movements through a joystick) into VR are key to create the feeling that your actually someplace else – no physical controller can do that.

Road to VR: Could your technology offer positional tracking for VR?

Mike: Yes, our sensors can provide positional tracking, which is key to translate a user’s head movements in VR. It can also provide real-time room analysis that can bring additional benefits to the VR experience.

Road to VR: One of our readers, eyeandeye, commented, “I’m curious how their camera avoids the occlusion issues that the Leap apparently has how well would this work in various lighting conditions?” How would you respond to that?

Road to VR: One of our readers, eyeandeye, commented, “I’m curious how their camera avoids the occlusion issues that the Leap apparently has how well would this work in various lighting conditions?” How would you respond to that?

Mike: SoftKinetic’s Time-of-Flight depth sensor and tracking middleware are very different from Leap. Our depth camera creates a large interactive volume around the user, so you’re able to move your hands, fingers and head in any direction or position in front of the camera. Because our ToF camera uses infrared light, it works just as well in light or dim lighting conditions – even total darkness won’t affect performance. The infrared light emitted from the camera is only sensitive in direct sunlight.

Road to VR: Could SoftKinetic increase the FoV, even matching that of the Rift?

Mike: The Creative Senz3D camera has a 90° diagonal field of view. This makes it perfect for what it was designed for as a desktop solution. We’re prototyping a variety of use cases to determine what the ideal FOV will be for VR.

Road to VR: Is SoftKinetic talking to VR manufacturers about the integration of sensors?

Mike: Yes, we’re actively working with several companies active in Augmented and Virtual Reality such as Meta and others. We are working to find the answers we need to design the correct solutions for VR/AR. It’s important to us that we take the time to prototype a variety of use cases, and not rush to any predetermined conclusions.

Road to VR: What tools would a VR developer need to create their own Unity demos which utilized this functionality?

Mike: Simple. If you don’t already have a Senz3D camera, you can purchase it on Amazon. We also provide a 3D model that can be used to attach the camera to an Oculus DK1. The model is freely available for download on MakerBot Thingiverse.

SoftKinetic’s iisu middleware is also free to download and comes with all the tools and Unity support you’ll need to start making your own demos. Find out more about SoftKinetic at their website.