Valve co-founder Gabe Newell previously revealed Valve was working on a brain-computer interface (BCI) with OpenBCI, the minds behind open source BCI software and hardware solutions. Now Tobii, the eye-tracking firm, announced it’s also a partner on the project and that developer kits incorporating eye-tracking and “design elements” of Valve Index are expected to first ship sometime early next year.

Update (February 5th, 2021): Newell’s interview, which is referenced below in the original article, didn’t reveal whether OpenBCI project ‘Galea’ was the subject of the partnership, however now Tobii has confirmed that it is indeed the case along with a few other details.

Tobii will be lending its eye-tracking technology to Galea, which it says will incorporate design elements from Valve Index. Developer kits for early beta access partners will ship in early 2022, the companies say.

Reading between the lines somewhat, it appears Galea will not only include the sensors-packed strap, but also an eye-tracking enabled, possibly modified Valve Index headset.

Original Article (January 28th, 2021): Newell hasn’t been secretive about his thoughts on BCI, and how it could be an “extinction-level event for every entertainment form.” His message to software developers: start thinking about how to use BCI now, because it’s going to be important to all aspects of the entertainment industry fairly soon.

How soon? Newell says in a talk with News 1 that by 2022, studios should have them in their test labs “simply because there’s too much useful data.”

Newell speaks about BCI through a patently consumer-tinted lens—understandable coming from a prominent mind behind Steam, the largest digital distribution platform for PC gaming, and not to mention an ardent pioneer of consumer VR as we know it today.

To Newell, BCI will allow developers to one day create experiences that completely bypass the traditional “meat-peripherals” of old in function—eyes, ears, arms and legs—giving users access to richer experiences than today’s reality is capable of providing.

“You’re used to experiencing the world through eyes, but eyes were created by this low-cost bidder that didn’t care about failure rates and RMAs, and if it got broken there was no way to repair anything effectively, which totally makes sense from an evolutionary perspective, but is not at all reflective of consumer preferences. So the visual experience, the visual fidelity we’ll be able to create — the real world will stop being the metric that we apply to the best possible visual fidelity.”

On the road to that more immersive, highly-adaptive future, Newell revealed Valve is taking some important first steps, namely its newly revealed partnership with OpenBCI, the neurotech company behind a fleet of open-source, non-invasive BCI devices.

Newell says the partnership is working to provide a way so “everybody can have high-resolution [brain signal] read technologies built into headsets, in a bunch of different modalities.”

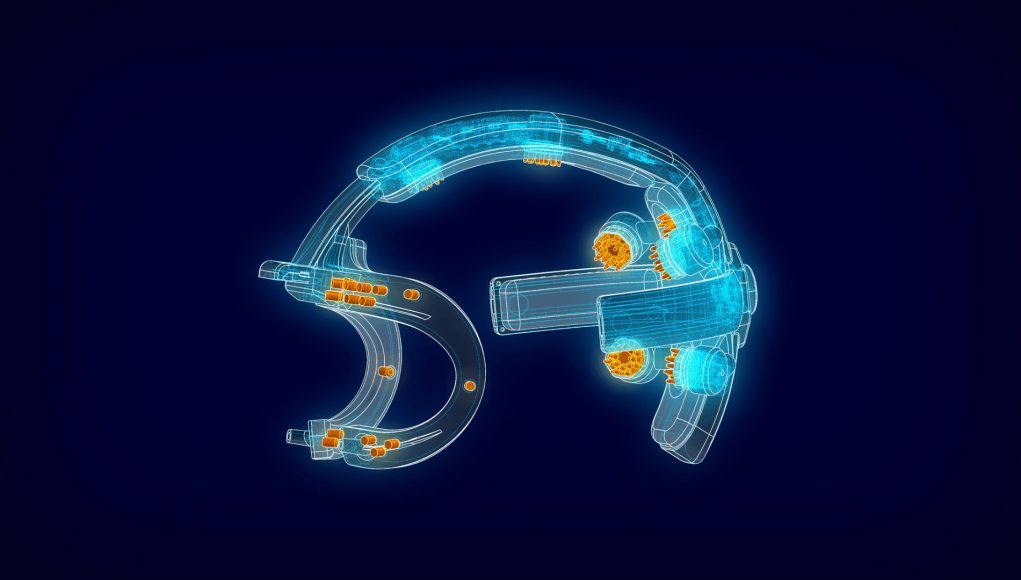

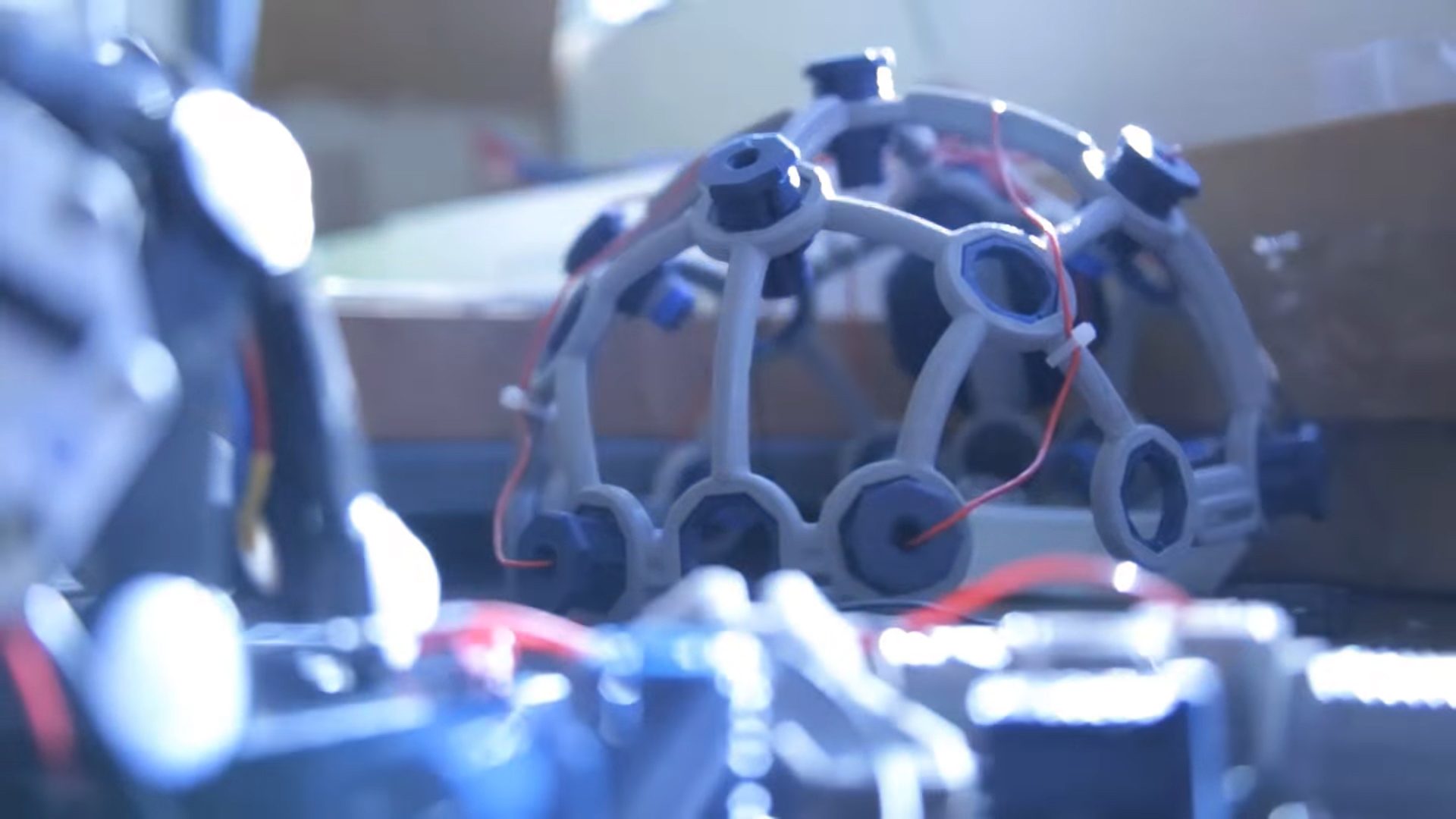

Back in November, OpenBCI announced it was making a BCI specifically for VR/AR headsets, called Galea, which sounded very similar to how Valve’s Principal Experimental Psychologist Dr. Mike Ambinder described in his GDC 2019 vision for VR headsets fitted with electroencephalogram (EEG) devices.

Although Newell doesn’t go into detail about the partnership, he says that BCIs are set to play a fundamental role in game design in the very near future.

“If you’re a software developer in 2022 who doesn’t have one of these in your test lab, you’re making a silly mistake,” Newell tells 1 News. “Software developers for interactive experiences — you’ll be absolutely using one of these modified VR head straps to be doing that routinely — simply because there’s too much useful data.”

There’s a veritable laundry list of things BCI could do in the future by giving software developers access to the brain, and letting them ‘edit’ the human experience. Newell has already talked about this at length; outside of the hypotheticals, Newell says near-term research in the field is so fast-paced, that he’s hesitant to commercialize anything for the fear of slowing down.

“The rate at which we’re learning stuff is so fast that you don’t want to prematurely say, ‘OK, let’s just lock everything down and build a product and go through all the approval processes, when six months from now, we’ll have something that would have enabled a bunch of other features.”

It’s not certain whether Galea is the subject of the partnership, however its purported capabilities seem to line up fairly well with what Newell says is coming down the road. Gelea is reportedly packed with sensors, which not only includes EEG, but also sensors capable of electrooculography (EOG) electromyography (EMG), electrodermal activity (EDA), and photoplethysmography (PPG).

OpenBCI says Galea gives researchers and developers a way to measure “human emotions and facial expressions” which includes happiness, anxiety, depression, attention span, and interest level—many of the data points that could inform game developers on how to create better, more immersive games.

Provided such a high-tech VR headstrap could non-invasively ‘read’ emotional states, it would represent a big step in a new direction for gaming. And it’s one Valve clearly intends on leveraging as it continues to both create (and sell) the most immersive gaming experiences possible.

Interested in watching the whole interview? Catch the video directly on 1 News.