Microsoft announced it’s released a public preview of Azure Remote Rendering support for Meta Quest 2 and Quest Pro, something that promises to allow devs to render complex 3D content in the cloud and stream it to those VR headsets in real-time.

Azure Remote Rendering, which already supports desktop and the company’s AR headset HoloLens 2, notably uses a hybrid rendering approach to combine remotely rendered content with locally rendered content.

Now supporting Quest 2 and Quest Pro, developers are able to integrate Microsoft’s Azure cloud rendering capabilities to do things like view large and complex models on Quest.

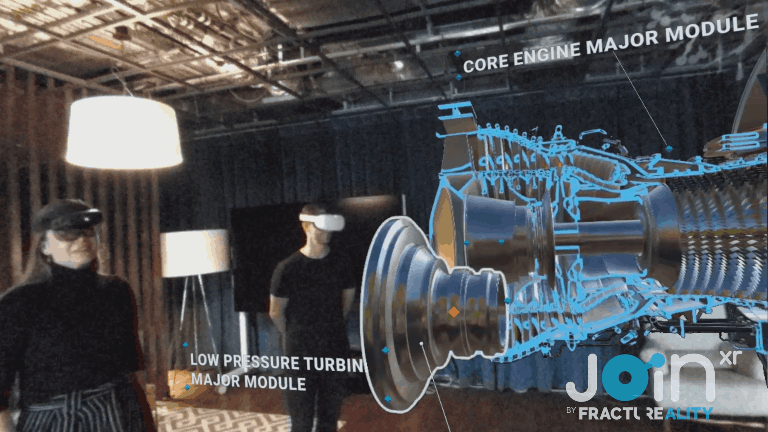

Microsoft says in a developer blog post that one such developer Fracture Reality has already integrated Azure Remote Rendering into its JoinXR platform, enhancing its CAD review and workflows for engineering clients.

The JoinXR model above was said to take 3.5 minutes to upload and contains 12.6 million polygons and 8K images.

While streaming XR content from the cloud isn’t a new phenomenon—Nvidia initially released its own CloudXR integration for AWS, Microsoft Azure, and Google Cloud in 2021—Microsoft offering direct integration is a hopeful sign that the company hasn’t given up on VR, and is actively looking to bring enterprise deeper into the fold.

If you’re looking to integrate Azure’s cloud rendering tech into your project, check out Microsoft’s step-by-step guide here.