Meta Quest 3 brings with it new ‘Touch Plus’ controllers that do away with the tracking ring that’s been part of the company’s 6DOF consumer VR controllers ever since the original Rift. But that’s not the only change.

Editor’s Note: for some clarity in this article (and comments), let’s give some names to all the different 6DOF VR controllers the company has shipped over the years.

- Rift CV1 controller: Touch v1

- Rift S controller: Touch v2

- Quest 1 controller: Touch v2

- Quest 2 controller: Touch v3

- Quest Pro controller: Touch Pro

- Quest 3 controller: Touch Plus

6DOF consumer VR controllers from Meta have always had a ‘tracking ring’ as part of their design. The ring houses an array of infrared LEDs that cameras can detect, giving the system the ability to track the controllers in 3D space.

Quest 3 will be the first 6DOF consumer headset from the company to ship with controllers without a tracking ring; the company is calling new controllers ‘Touch Plus’.

Tracking Coverage

In a session at Meta Connect 2023, the company explained it has moved the IR LEDs from the tracking ring into the faceplate of the controller, while also adding a single IR LED at the bottom of the handle. This means the system has less consistently visible markers for tracking, but Meta believes its improved tracking algorithms are up to the challenge of tracking Touch Plus as well as Quest 2’s controllers.

Note that Touch Plus is different than the company’s Touch Pro controllers—which also don’t have a tracking ring—but instead use on-board cameras to track their own position in space. Meta confirmed that Touch Pro controllers are compatible with Quest 3, just like Quest 2.

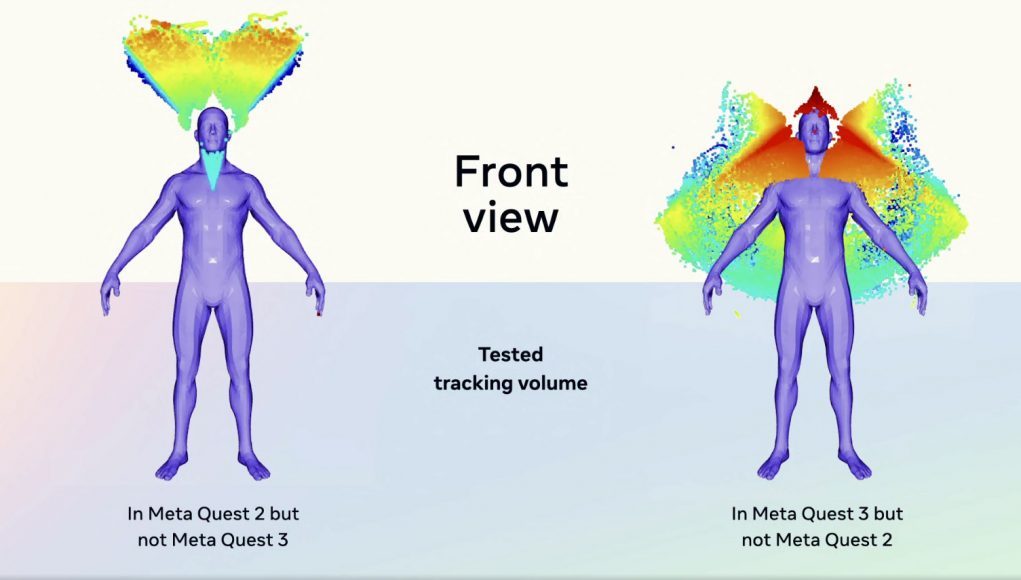

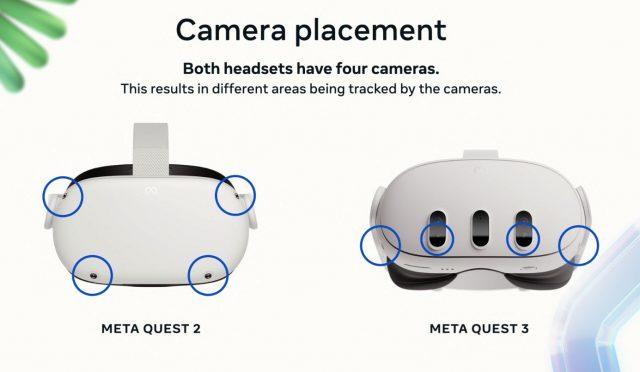

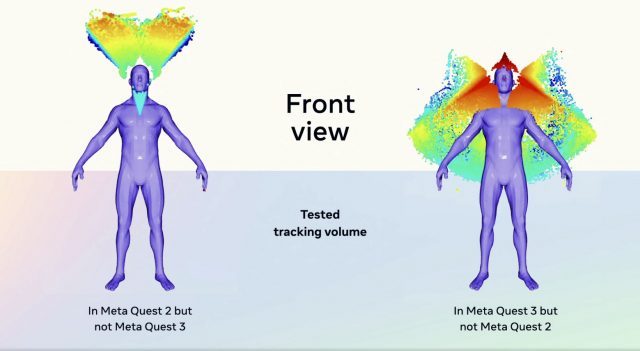

Meta was clear to point out that the change in camera placements on Quest 3 means the controller tracking volume will be notably different than on Quest 2.

The company said Quest 3 has about the same amount of tracking volume, but it has strategically changed the shape of the tracking volume.

The company said Quest 3 has about the same amount of tracking volume, but it has strategically changed the shape of the tracking volume.

Notably, Quest 3’s cameras don’t capture above the head of the user nearly as well as Quest 2. But the tradeoff is that Quest 3 has more tracking coverage around the user’s torso (especially behind them), and more around the shoulders:

Meta believes this is a worthwhile tradeoff because players don’t often hold their hands above their head for long periods of time, and because the headset can effectively estimate the position of the controllers when outside of the tracking area for short periods.

Haptics

As for haptic feedback, the company said that “haptics on the Touch Plus controller are certainly improved, but not quite to the level of Touch Pro,” and further explained that Touch Plus has a single haptic motor (a voice coil modulator), whereas Touch Pro controllers have additional haptic motors in both the trigger and thumbstick.

The company also reminded developers about its Meta Haptics Studio tool, which aims to make it easy to develop haptic effects that work across all of the company’s controllers, rather than needing to design the effects for the haptic hardware in each controller individually.

Trigger Force

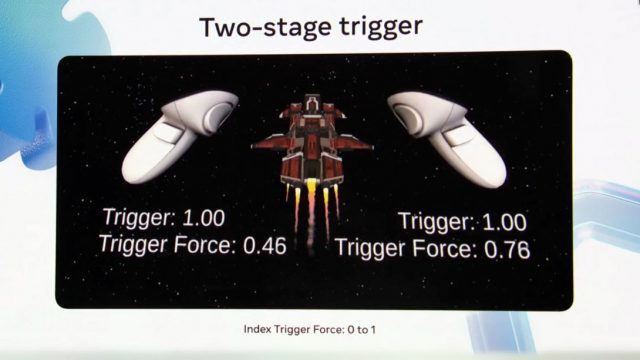

Touch Plus also brings “one more little secret” that no other Touch controller has to date: a two-stage index trigger.

Meta explained that once a user fully pulls the trigger, any additional force can be read as a separate value—essentially a measure of how hard the trigger is being squeezed after being fully depressed.

Meta explained that once a user fully pulls the trigger, any additional force can be read as a separate value—essentially a measure of how hard the trigger is being squeezed after being fully depressed.

What’s Missing From Touch Pro

Meta also said that Touch Plus won’t include some of the more niche features of Touch Pro, namely the ‘pinch’ sensor on the thumbpad, and the pressure-sensitive stylus nub that can be attached to the bottom and used to ‘draw’ on real surfaces.