At Oculus Connect in September, the VR company brought together experts and developers from across the world to talk VR. Among them were developers from Epic Games, creators of Unreal Engine, who shared some of the challenges and solutions to getting the visually stunning Showdown demo to run at 90 FPS on the Oculus Rift Crescent Bay prototype.

Nick Whiting, lead programmer on VR and visual scripting for UE4, and Nick Donaldson, senior designer at Epic Games, took to the stage at Oculus Connect to talk with an audience of developers about Unreal Engine 4 (UE4) and its integration with the Oculus Rift. Amidst the talk, the two went into detail on the process of optimizing Showdown, a high-fidelity demo built in UE4 that a five man team managed to push to 90 FPS—on a single Nvidia GTX980 GPU, to run on the Oculus Rift Crescent Bay prototype.

See Also: Optimizing Games for VR Is About Finding Creative Solutions – Darknet Developer Shares His Insights

Whiting opened the session recounting the Unreal Engine demos that have accompanied many of the Oculus Rift’s biggest steps in hardware progression. First was a VR version of Epic’s Elemental tech demo for the Oculus Rift HD prototype at E3 2013. At CES in 2014, Epic combined the Elemental and StrategyGame demos to create StrategyVR which demonstrated the positional tracking capabilities of the Oculus Rift Crystal Cove prototype. At GDC 2014, Epic and Oculus revealed Couch Knights, shown on the Oculus Rift DK2, which was a two player demo where users battled against each other by controlling doll-like characters.

See Also: Download 13 Beautiful Unreal Engine 4 Examples for the Oculus Rift DK2

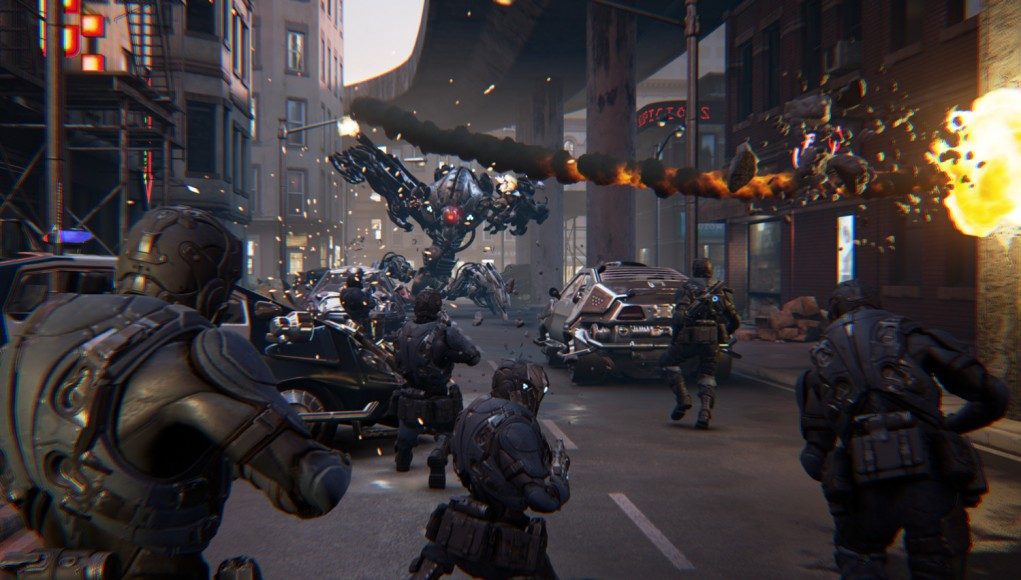

And most recently, Epic used assets from their Infiltrator and Samaritan demos to create Showdown, an impressive high-fidelity experience which the team had to optimize to run at a whopping 90 FPS for the Oculus Rift Crescent Bay prototype. The demo is positively action packed; I was lucky enough to try it inside the Oculus Rift Crescent Bay prototype at Oculus Connect. Here’s what I wrote of the experience:

…The 10 minute session was capped off with Showdown, made by Epic Games in UE4, which put me in the middle of a city street with a slow motion action scene unfolding around me. Futuristic-looking troops were running alongside me firing their weapons. Rockets were flowing by with detailed trails of fire and smoke. The soldiers were firing at a huge robot that looked to be composed of alien technology. Debris flew by my face as bullets struck the ground. The whole time I’m flying slowly forward through the scene toward the robot. As I get nearer, a rocket blasts a car to my left and sends it flying over my head. Eventually the robot leans in and growls at my face (or whatever the robot equivalent of a growl is). This demo didn’t give me a feeling of presence—of truly being there—but it was fucking cool. And I think that’s the first time I’ve written that word on this site. I can’t wait to see experiences like this turned into rich narratives.

See Also: First Hands-on – Oculus Rift Crescent Bay is Incredible

I asked Epic if they’d release Showdown to the public. They said they’d like to, but don’t have a date at this point.

Optimizing Showdown for 90 FPS

Developing for VR is significantly more demanding than developing a typical flat screen game. Not only because the game needs to be rendered in stereoscopic 3D, but because comfortable VR requires incredibly high frame rates. For the Oculus Rift DK1, the FPS target was 60; with the DK2, that number rose to 75; and with the latest Oculus Rift Crescent Bay prototype, the ideal rate is a whopping 90 FPS! This requires careful optimization by developers, often involving creative problem solving to recreate resource intensive effects using more efficient tricks.

Donaldson’s segment on optimizing Showdown starts at 17:48 in the video above. Presentation slides here.

Whiting—who jokingly referred to optimization as “dark magic”—turns the stage over to Donaldson around 17:48 in the session.

“Couch nights was barely running at 75Hz,” Donaldson said, referring to the last demo that Epic built for the Oculus Rift DK2 to show during GDC 2014. The task ahead—building an action-packed scene for VR and getting it to run at 90 FPS—was daunting.

Donaldson says that Showdown was put together in just five weeks using a five person team. Granted, they did borrow assets made for other demos, but Donaldson says that during the optimization process, they ended up needing to touch nearly every asset to get the scene to 90 FPS.

When it comes to optimizing, Donaldson advises developers to “rip the band-aid off early.” Find out what’s killing your framerate. The tools built into UE4 can be particularly helpful for this.

“CPU profiling tool… this is a gem, it’s awesome and no one really knows about it. What it lets you do is capture a preview of the scene and then you can actually find out down to the draw-call level exactly what’s costing you what,” Donaldson said. The GPU profiler is also helpful, and it’s important to understand where your bottleneck is so you don’t spend all your time optimizing the GPU’s workload only to have the CPU be the main cause of slowdown.

Some of the old tricks that developers have been using to create low-cost effects don’t necessarily work in VR though.

“There’s this whole visual language of graphics rendering stuff that we’ve kind of used over the last few years that no longer work, so we have to build things out of real geometry,” Donaldson said. He says that “creative problem solving” is key to optimization.

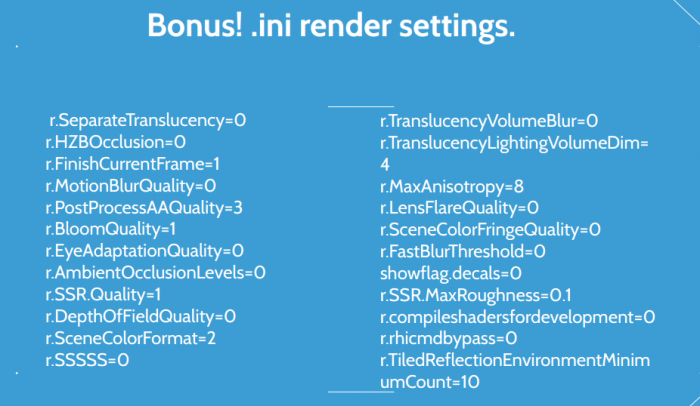

At the end of the segment, Donaldson shared a list of .ini render settings for UE4. He told me that “unreal tends to default to ‘badass’ kind of settings,” but not necessarily the ones you want if you’re looking for top performance.

“For any of the r.X settings, you can type r.SettingName ? and it will give you a description of what it does (in game), so it’s worth exploring each of those for better understanding,” Donaldson told me.

Donaldson recommends that developers check out the Performance and Profiling documentation page to get started using UE4’s tools for optimization.

Epic has been aggressively adapting their engine to be friendly for Oculus Rift development, and this inside look at optimizing in UE4 will surely be helpful to VR developers. Learning the “dark magic” of optimization is going to be especially important for those hoping to create games and experiences on Samsung’s Gear VR.