Researchers at Stanford University have unveiled a prototype VR Headset which they claim reduce the effects of VR sickness through the use of an innovative ‘stacked’ display which generates naturalistic light fields.

VR display technology continues to evolve rapidly, with each new generation of headset offering better and better image quality on the road to an ever more immersive experience. Right now however, development has been concentrated on making so-called ‘flat plain’ 3D display technology with less latency, blurring and ghosting.

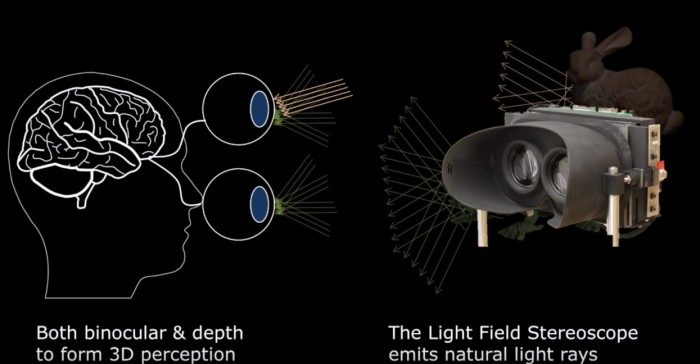

Low persistence of vision, provided by OLED powered displays first appeared publicly at CES 2014 in Oculus’ Crystal Cove feature prototype for example. But these displays still offer an artificially rendered stereocopic view of virtual worlds, one that has binocular stereoscopic depth but doesn’t allow a user’s eyes to focus on different depth plains. Essentially, 3D on a flat plain.

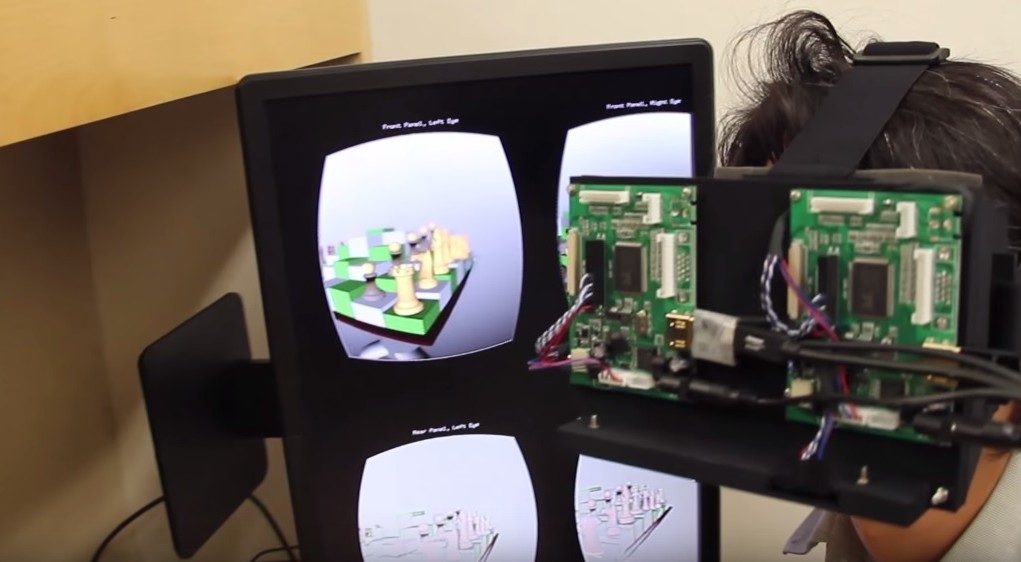

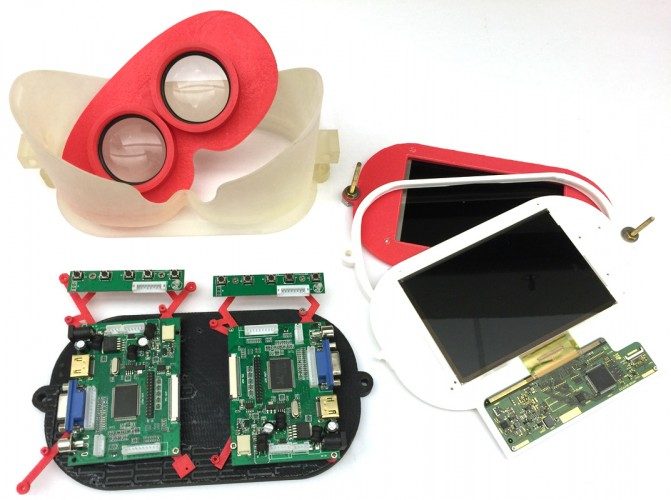

Now, a team of researchers at Stanford University, claim they’ve made the first step in delivering a more naturalistic and comfortable virtual reality display technology. They’ve developed a system which uses off-the-shelf transparent LCD panels stacked, one in front of the other, to generate visible light fields for each eye which include proper depth information. The resulting image, combining the front and rear displays (multiplicative rather than additive), when passed to the user’s eyes means that objects at different depths can be naturally focused on.

As the user’s interpretation of the image presented isn’t reliant on just binocular stereoscopic imagery (that is, one image for each eye view on a single plain) and allows the eye to more naturally respond and rest at focal cues, this reduces fatigue as it more naturally replicates how we perceive the world in real life. At least that’s the theory. You can see it in practice in the video above – where the team use a 5mm aperture camera to simulate focussing at different depths. It’s impressive looking stuff.

The research, being headed up by Fu-Chung Huang, Kevin Chen and Gordon Wetzstein, claim their new Light-field stereoscope might allow users to enjoy immersive experiences for much longer periods, opening the door to lengthy virtual reality play sessions.

The only thing not currently clear is that, although this technology impressively adds natural depth perception to VR displays, it does use LCD based technology which is currently eschewed in current generation headsets owing to its high persistence nature leading to motion blur. OLED’s extremely fast pixel switching time allow both minimisation of motion blue and the use of low persistence of vision, where the displayed image is only displayed to the user for a fraction of a second to combat stuttering in fast movement. It’s not clear whether which technology present the greater benefit to the consumer VR industry long term.

The unit is now being developed at NVIDIA research and will be demonstrated at SIGGRAPH 2015 in Los Angeles next week. It’ll be interesting to see where the GPU giant takes the ground breaking research next.

You can read more about this research and download the papers here.