Realities wants to transport viewers to exciting places across the globe in ways that are much more immersive than the single, flat vantage point of a 360 degree photo or video. The company is creating an efficient photogrammetry pipeline to build sometimes frighteningly realistic virtual environments that users can actually walk through.

I recently visited the Realities team to check out their very latest work. After donning a Vive Pre headset and playing around for a solid 10 or 15 minutes, I was thoroughly impressed… and I hadn’t even left the menu.

No One Said Menus in VR Have to Be Boring

Realities wants to capture exciting places around the world and allow anyone to visit. Encapsulating this sense of global teleportation, the developers have created a menu which takes the form of a detailed model of the Earth floating amidst a sea of stars and our nearby Sun. I could have clicked on one of the nodes on the globe to teleport to the corresponding scene, but I was far too fascinated with the planet itself.

Starting at about the size of the beach ball, the Earth in front of me looked like one of those amazing NASA photos showing the entire sphere of the planet within the frame. There’s a good reason it looked that way: it was constructed using assets from NASA, including real cloud imagery and accurate elevation data for the Earth’s continents (which was exaggerated to allow users to make out the detailed terrain from their satellite vantage point). The dark side of the globe also accurately lights up with a brilliant, warm grid-like glow of city-light scattered across the planet’s surface.

With the Vive’s controllers I was able to reach out and manipulate the globe by spinning it, moving it, and doing a pinch-zoom gesture to make it bigger or smaller. I grabbed the Earth and lifted it over my head to look at it from below; for the first time in my life, I truly got a sense of the way in which South America really curves down under the Earth as it reaches toward Antarctica. I’d never seen—or I suppose ‘felt’—the shape of the continent in that way before, not even when playing with one of those elementary school globes in the real world.

With the Vive’s controllers I was able to reach out and manipulate the globe by spinning it, moving it, and doing a pinch-zoom gesture to make it bigger or smaller. I grabbed the Earth and lifted it over my head to look at it from below; for the first time in my life, I truly got a sense of the way in which South America really curves down under the Earth as it reaches toward Antarctica. I’d never seen—or I suppose ‘felt’—the shape of the continent in that way before, not even when playing with one of those elementary school globes in the real world.

I went looking for Hawaii, where I once lived for a year. After spotting the lonesome island chain in the middle of the Pacific I wanted to zoom right down to street-level to see my stomping grounds. When I couldn’t zoom in much further than the ‘state view’, I had to remind myself that this was, after all, just the menu. At this point I realized I’d been playing around with it for 10 or 15 minutes and hadn’t even stepped inside Realities’ actual product.

So I swung the globe around to find a highlighted node in Europe (where the Realities devs are from, and many of their early captured scenes) and clicked to teleport.

Stepping Inside a Virtually Real Virtual Environment

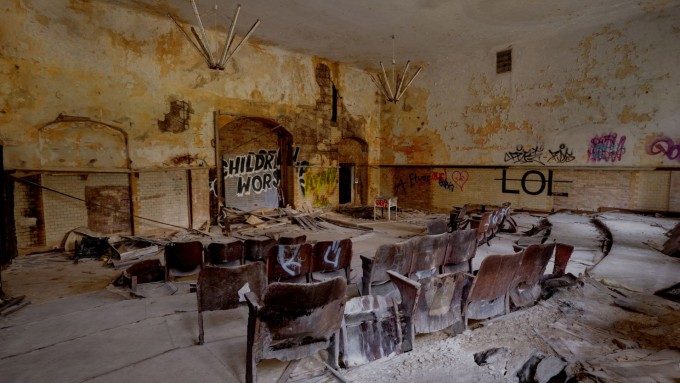

When the scene faded in, I found myself in a small, dilapidated auditorium. Now, this would have been a ‘neat’ place to see in the form of a high resolution 360 photo. But with Realities, the scene around me not only had depth, it was actually made of real 3D geometry and was fully navigable, both by physically walking around the Vive’s room scale space or pointing with the controller and clicking to blink from one spot to the next. There’s a massive gulf in immersion between just seeing a space like this with a 360 photo compared to being inside a detailed recreation where I can walk around and kneel down to see the dust on the floor.

For the most part, the scene around me was more detailed and realistic looking than any other virtual world I’ve been inside. This is thanks to the photogrammetric technique that Realities is using to capture these spaces, which involves the use of high resolution photography to recreate a very accurate model of the real space.

The developers joked that they get to “outsource the lightning engine to the real world,” since the scene is lit as desired in real life before capture. With photogrammetry, the real world also handles the jobs of texture artist and 3D modeler (with a little help from someone like Realities who has to find a way to pull it all into the computer).

The results are only as good as the pipeline though; Realities have made big improvements since the last time we saw their work. Inside the crumbling auditorium I could see the debris scattered across the room with detail that would be simply unfathomable to craft by hand in a 3D modeling program in the time it took Realities to capture the space.

Even the most minute details were captured and shown as fully realized geometry. As I approached the walls I could see the flecks of paint curling and flaking off after decades of neglect. The graffiti-covered walls revealed individual strokes of paint up close, and scattered about the debris I spotted the discarded yellow cap of a can of spray paint. The developers told me people often find tiny details—like a pack of cigarettes and a lighter—that they themselves haven’t spotted before. No joke… a scavenger hunt in a space like this would probably be a lot of fun.

An older capture of the famous Las Vegas sign

In some areas where adequate data wasn’t captured (like in tight nooks where it’s hard to fit a camera) the geometry will look sort of like a janky 3D capture form the original Kinect, with stretched textures and unearthly geometry, especially on very fine edges. Some of this is hidden by the fact that real space I was looking at is quite derelict, so it’s already a chaotic mess in the first place. Still, the Realities team has shown that wherever they can capture adequate data, they are capable of spitting out richly detailed geometry on both the macro and micro scales.

I should be a bit more clear about the locomotion method employed by Realities. It isn’t a ‘blink’ as much as a ‘zoom’. When you point and click, you traverse from A to B almost, but not quite, instantly. You still see the world move around you, but it’s very fast. So fast, the developers tell me, it’s beyond normal human reaction time. So while you still see it, by the time you can react to it, you have reached your destination. That’s the hypothesis at least, and in my testing it seems to have the benefits of nausea-free blinking, but helps you keep you better oriented between where you are and where you came from, instead of just teleporting instantly from A to B.