AMD has announced TrueAudio Next a “scalable” physics-based audio rendering engine for generating environmentally accurate, GPU accelerated audio for virtual reality.

AMD has announced a set of key technologies to bolster its open source technology arsenal represented by GPUOpen, this time in the field of immersive VR audio. TrueAudio Next, AMD claim, provides “real-time dynamic physics-based audio acoustics rendering” and that any soundscape can now be modelled physically, taking into account reflection and occlusion.

With GPUOpen and LiquidVR, AMD continues to pitch its tent in the open source camp, a reaction to its main rival NVIDIA’s approach which focuses largely on proprietary, GPU hardware and driver locked Gameworks VR (now known as VRWorks) initiatives and technologies – i.e. things that will only work if you develop for and buy their graphics cards.

“We are excited about the potential of TrueAudio Next,” says Sasa Marinkovic, Head of VR and Software Marketing at AMD, “It enables developers to integrate realistic audio into their VR content in order to achieve their artistic vision, without compromise. Combining this with AMD’s commitment to work with the development community to create rich, immersive content, the next wave of VR content can deliver truly immersive audio – that will sound and feel real.”

AMD’s GPUOpen and Liquid VR are equivalent to NVIDIA’s GameWorks and VRWorks in that they both provide frameworks upon which to build VR games, the difference with the former is that as a developer, if you want to poke around in the source to work out why something is working a certain way, you can download the source straight from GitHub.

See Also: Nvidia’s VRWorks Audio Brings Physically Based 3D GPU Accelerated Sound

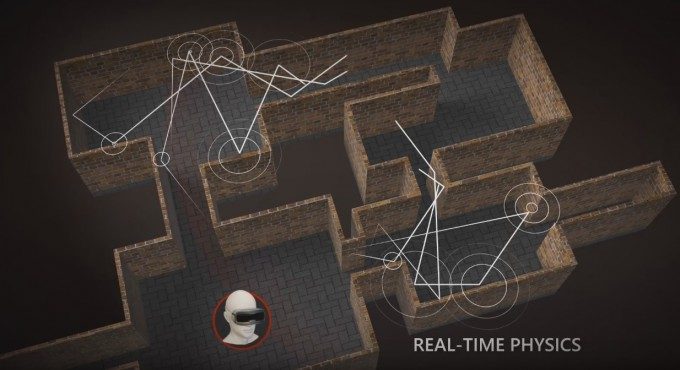

AMD’s TrueAudio Next is built atop Radeon Rays (formerly AMD FireRays), the company’s high efficient GPU accelerated ray tracing software. Traditionally used for graphics rendering, ray tracing also has important uses in the world of audio and modelling the physical properties of a virtual environment and the audio that resonates within. This means more accurate, realistic sound for virtual reality applications and games and, in theory, more chance to achieve psychological immersion.