Leap Motion has announced it’s to early access to the beta of its Interaction Engine, a set of systems designed to help developers implement compelling, realistic and hopefully frustration-free input with just their hands.

If you’ve ever spent time with a physics-sandbox title, you’ll know that a large part of the appeal is the satisfaction and freedom to play within a virtual world that behaves somewhat like reality – with none of the real-world restrictions applied. But this presents myriad problems, not least of which is that those real-world modelled physics breakdown when physicality is removed. Without physical boundaries in place, objects on the virtual plane will behave according to the digital physics model, right up to the point you accidentally put your digital self through said objects – at which point things kinda breakdown.

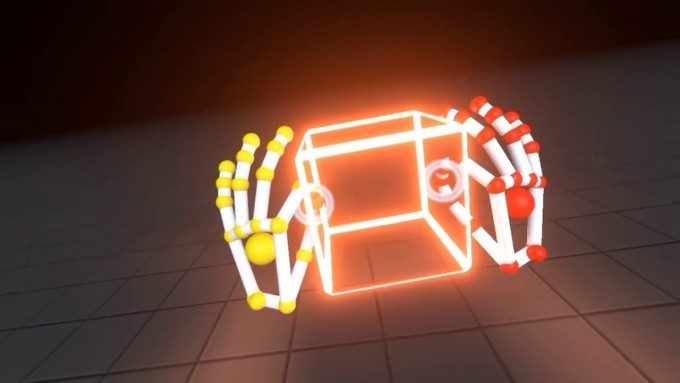

These issues are particularly acute when it comes to integrating naturalistic hand interaction with a digital space and its objects, for example in VR. Bridging the “gray area” between accuracy and what ‘feels good’ to a human being is part of that elusive magic when you encounter an input interface that just works. More specifically, in the case of VR, that bridging involves implementing an alternative set of rules when a player connects and grasps a virtual object in 3D space, bending realities rules in favour of a visual experience that more closely matches our expectations of what should happen.

[gfycat data_id=”AdventurousAncientBrahmanbull” data_autoplay=true data_controls=false]

These are all issues that Leap Motion, the company most well known for its depth sensing sensor peripheral of the same name, have been grappling with for many months now and they’re Interaction Engine aims to remove a lot of the pain for developers by providing a framework that “exists between the Unity game engine and real-world hand physics,”[gfycat data_id=”UnripeFancyAndeancockoftherock” data_autoplay=true data_controls=false]

The last time we encountered Leap Motion, they showed us the first glimpses of their work to try and boil down such an enormously complex set of problems into something that developers can interface with easily. At CES in January the Leap Motion team let us get our hands on Orion with an early verison of their Interaction Engine, a significant milestone for the company in terms of their overall tracking framework with impressive leaps in lowered tracking latency and the systems ability to handle hand tracking issue

Leap Motion’s release of Interaction Engine’s Beta completes another piece of the peripheral-free VR input puzzle that the company has dedicated itself to over the last couple of years.

“The Interaction Engine is designed to handle object behaviors as well as detect whether an object is being grasped,” reads a recent blog post introducing the Interaction Engine, “This makes it possible to pick things up and hold them in a way that feels truly solid. It also uses a secondary real-time physics representation of the hands, opening up more subtle interactions.”

Leap Motion have always had a knack for presenting complex ideas involved in their work in a visual way immediately graspable by the viewer. These latest demo’s illustrate that user-friendly fuzzy logic Leap Motion believe strike a neat balance between believable virtual reality and frustration-free human-digital interaction.

The Interaction Engine represents another milestone for Leap Motion on its quest to achieve hardware free, truly human input. And if you’re a developer, it’s something you can get your hands on right now as the beta is available for download here, read all about it here and chat with others doing the same.