It turns out that rendering stereoscopic 3D images is not as simple as slapping two slightly different views side-by-side for each eye. There’s lots of nuance that goes into rendering a proper 3D view that properly mimics real world vision — and there’s lot’s that can go wrong if you aren’t careful. Oliver Kreylos, a VR researcher at UC Davis, emphasizes the importance of proper stereoscopic rendering and has a great introduction to 3D rendering for Oculus Rift developers.

The dangers of poor stereoscopic 3D rendering range from eyestrain and headaches to users not feeling right in the virtual world.

The latter of which is the “biggest danger VR is facing now,” Kreylos told me. The subtleties of improper 3D rendering are such that the everyday first-time VR user won’t think, “this is obviously wrong, let me see how to fix it.” They’ll say instead, “I guess 3D isn’t so great after all, I’ll pass,” says Kreylos. This could be a major hurdle to widespread consumer adoption of virtual reality.

Oliver Kreylos is a PhD virtual reality researcher who works at the Institute for Data Analysis and Visualization, and the W.M. Keck Center for Active Visualization at the University of California, Davis. He maintains a blog on his VR research at Doc-Ok.org, where a few months back he showed us what it’s like to be inside of a CAVE.

Kreylos has a great introductory article about the ins and outs of proper stereoscopic 3D rendering: Good Stereo vs. Bad Stereo.

He also has an illuminating video that’s great for anyone not already versed in 3D:

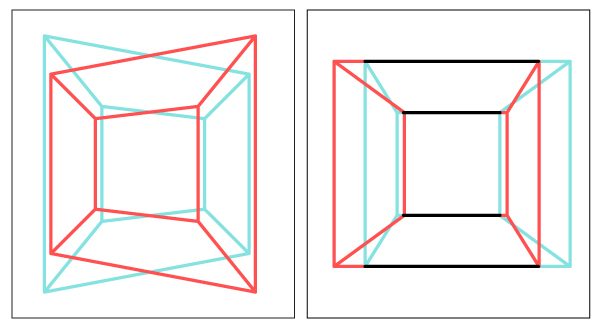

“…here’s the bottom line: Toe-in stereo is only a rough approximation of correct stereo, and it should not be used. If you find yourself wondering how to specify the toe-in angle in your favorite graphics software, hold it right there, you’re doing it wrong,” Kreylos wrote in Good Stereo vs. Bad Stereo.

“The fact that toe-in stereo is still used — and seemingly widely used — could explain the eye strain and discomfort large numbers of people report with 3D movies and stereoscopic 3D graphics. Real 3D movie cameras should use lens shift, and virtual stereoscopic cameras should use skewed frusta, aka off-axis projection. While the standard 3D graphics camera model can be generalized to support skewed frusta, why not just replace it with a model that can do it without additional thought, and is more flexible and more generally applicable to boot?” he concludes.

Oculus Rift SDK and Unity Have the Basics, but There’s More That Can Go Wrong

Kreylos, who has had some time to play with the Oculus Rift, tells me that Oculus and Unity have laid a great foundation for proper stereoscopic 3D thanks to the SDK.

“At the most basic, someone has to set up the proper camera parameters, i.e., projection matrices, to get the virtual world to show up on the screens just right. On the Rift, someone also has to do the lens distortion correction. Both these things are taken care of by the Rift SDK, by both the low-level C++ framework and the Unity3D binding. And as far as I can tell, both bindings do it correctly. It’s a bit more tricky in the Unity3D binding due to having to work around Unity’s camera model, but apparently they pulled it off.”

Kreylos checked his initial impressions with the SDK source code.

“For the Rift SDK, I went the source code route. I found the bits of code that set up the projection matrices, and while they’re scattered all over the place, I did find the lines that set up a skewed-frustum projection using calibration parameters read from the Rift’s non-volatile RAM during initialization. That was very strong evidence that the Rift SDK uses a proper stereo model. I then compared the native SDK display to the Unity display, and they looked as much the same as I could tell, so I’m confident about the Unity binding as well.”

“Any software based either on the low-level SDK or the Unity binding should therefore have the basics right,” he added.

But, there’s more that can go wrong. Developer vigilance is required.

“A lot of 3D graphics software does things to the virtual camera that they can only get away with because normal screens are 2D, such as squashing the 3D model in the projection direction for special effects, rendering HUD or other UI elements to the front- or backplane, using oblique frusta to cheaply implement clipping planes, etc. All those shortcuts stop working in stereo or in an HMD. And those tricks are done deeply inside game engines, or even by applications themselves. Meaning there are additional pitfalls beyond the basic stereo setup,” he said.

Kreylos gives the thumbs up to Oculus’ SDK documentation regarding correct stereoscopic 3D rendering, noting that inside there is a “very detailed discussion” on the matter. You can find the latest Oculus Rift SDK documentation here (after logging in). Anyone building an Oculus Rift game from the ground up should absolutely consult this document to get started with understanding proper 3D rendering.