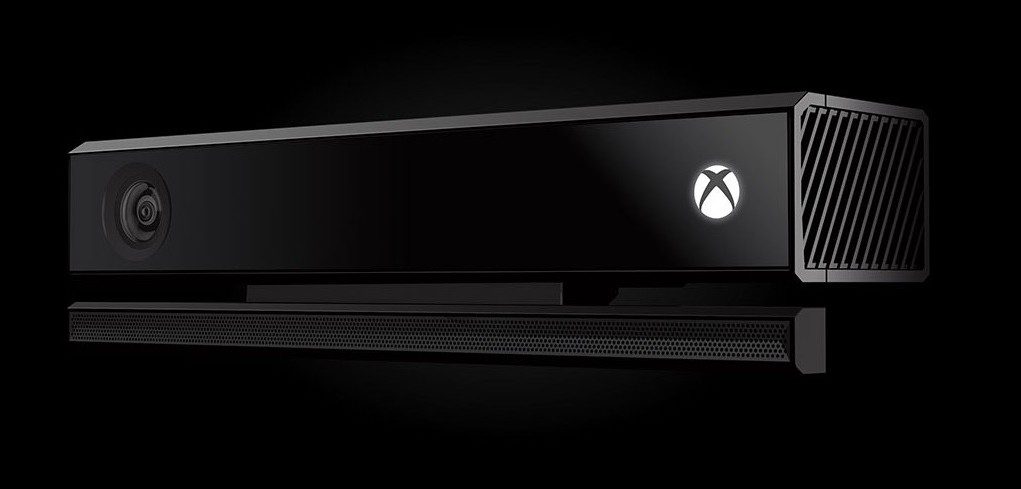

Sadly, there were no VR-related surprises from the big console manufacturers at E3 this year. However, Microsoft’s Kinect 2.0 could become a key virtual reality input device thanks to a rich set of features and substantially improved performance.

Back when Kinect launched in 2010, Microsoft positioned it as a high-tech input device that would revolutionize the way we play games. Unfortunately, it fell well short of that lofty goal. Part of the problem is that the Kinect didn’t have the technical chops to deliver the experience that Microsoft was touting.

James Iliff, producer of Project Holodeck, told me back in 2012 that his team was attempting to use a quad-Kinect setup for skeletal avatar tracking, but had to abandon it for performance reasons.

“…the Kinect hardware is extremely lacking in fidelity. Every point the Kinect tracks is filled with unmanageable jitter, rendering the data useless for anything other than the most simple of interactions. We tried very hard to get around this with several software algorithms we wrote, to get multiple Kinects to communicate with each other, however this did not really make anything more accurate unfortunately.”

While the original Kinect might not have been up to the task, the Kinect 2.0 might finally deliver on the technical promise that Microsoft made.

Wired has a great preview of the Kinect 2.0 showing off its impressive capabilities:

Thanks to greatly increased fidelity, the Kinect 2.0 can do much more than it’s predecessor. Here’s a short list of some of things that the unit can sense, and tools that developers can make use of:

- 1080p color camera

- Active IR for light filtering

- Improved skeletal tracking

- Joint rotation

- muscle/force

- Expressions Platform:

- Heart rate estimation

- Expression

- Engagement

- Looking Away (yes/no)

- Talking

- Mouth Moved

- Mouth (open/closed)

- Glasses (yes/no)

- Independent right/left eye (open/closed)

Limitations of Original Kinect for Virtual Reality

For virtual reality, putting the player inside the game (known as ‘avatar embodiment’) is key. Avatar embodiment can’t be achieved in full without proper skeletal tracking.

Skeletal tracking is difficult with the original Kinect because of its low fidelity.

Oliver Kreylos is a PhD virtual reality researcher who works at the Institute for Data Analysis and Visualization, and the W.M. Keck Center for Active Visualization, at the University of California, Davis. He maintains a blog on his VR research at Doc-Ok.org.

Kreylos, who claims to own six Kinects (and has done his fair share of hacking), talks about the limitations of the original Kinect in a recent post on his blog.

The bottom line is that in Kinect1, the depth camera’s nominal resolution is a poor indicator of its effective resolution. Roughly estimating, only around 1 in every 20 pixels has a real depth measurement in typical situations. This is the reason Kinect1 has trouble detecting small objects, such as finger tips pointing directly at the camera. There’s a good chance a small object will fall entirely between light dots, and therefore not contribute anything to the final depth image.

Other systems for skeletal tracking are cost prohibitive. Currently, many Oculus Rift/VR developers track hands with the Razer Hydra, but that’s a long way from full skeletal tracking.

Kinect 2.0 for Virtual Reality Input

From what we’ve seen so far, the Kinect 2.0 has the fidelity to provide proper skeletal tracking. In the Wired video (embedded above) the Kinect 2.0 was able to easily see the wrinkles on the player’s shirt (and even the buttons if close enough). Microsoft has been quiet about many of the technical specifications of the Kinect 2.0, but they claim to be using “proprietary Time-of-Flight technology, which measures the time it takes individual photons to rebound off an object or person to create unprecedented accuracy and precision.”

Kreylos considers the implications:

In a time-of-flight depth camera, the depth camera is a real camera (with a single real lens), with every pixel containing a real depth measurement. This means that, while the nominal resolution of Kinect2′s depth camera is lower than Kinect1′s, its effective resolution is likely much higher, potentially by a factor of ten or so. Time-of-flight depth cameras have their own set of issues, so I’ll have to hold off on making an absolute statement until I can test a Kinect2, but I am expecting much more detailed depth images…

The ‘Expressions Platform’, that analyzes each player in the scene, should be very useful for developers. Heart rate estimation is particularly intriguing — imaging knowing the heart rate of the player in a horror game and changing the tempo of the music accordingly. The possibilities are plentiful!

One unfortunate road bump for the Expressions Platform is that it won’t work if the player’s face is obscured. Much of the information comes from the player’s facial expressions (even the heartrate estimation is done by looking at micro-fluctuations of the skin on the players face). This means it’ll work well for CAVEs and some other systems, but not for head mounted displays like the Oculus Rift.

Even without the Expressions Platform, high fidelity lag free skeletal tracking (at commodity pricing) will allow players to fully embody their avatars, raising the bar of immersion that much more.

Kinect 2.0 for Windows Developer Kit Program Accepting Applications

For the developers among you, Microsoft has announced a developer kit program to get your hands on the Kinect 2.0 for Windows and the upcoming SDK. You have until July 31st to apply to the program. $399 will get you pre-release hardware of the Kinect 2.0 in November, along with a final version of the hardware once it’s released.