Manipulating objects with bare hands lets us leverage a lifetime of physical experience, minimizing the learning curve for users. But there are times when virtual objects will be farther away than arm’s reach, beyond the user’s range of direct manipulation. As part of its interactive design sprints, Leap Motion, creators of the hand-tracking peripheral of the same name, prototyped three ways of effectively interacting with distant objects in VR.

Guest Article by Barrett Fox & Martin Schubert

Barrett is the Lead VR Interactive Engineer for Leap Motion. Through a mix of prototyping, tools and workflow building with a user driven feedback loop, Barrett has been pushing, prodding, lunging, and poking at the boundaries of computer interaction.

Martin is Lead Virtual Reality Designer and Evangelist for Leap Motion. He has created multiple experiences such as Weightless, Geometric, and Mirrors, and is currently exploring how to make the virtual feel more tangible.

Barrett and Martin are part of the elite Leap Motion team presenting substantive work in VR/AR UX in innovative and engaging ways.

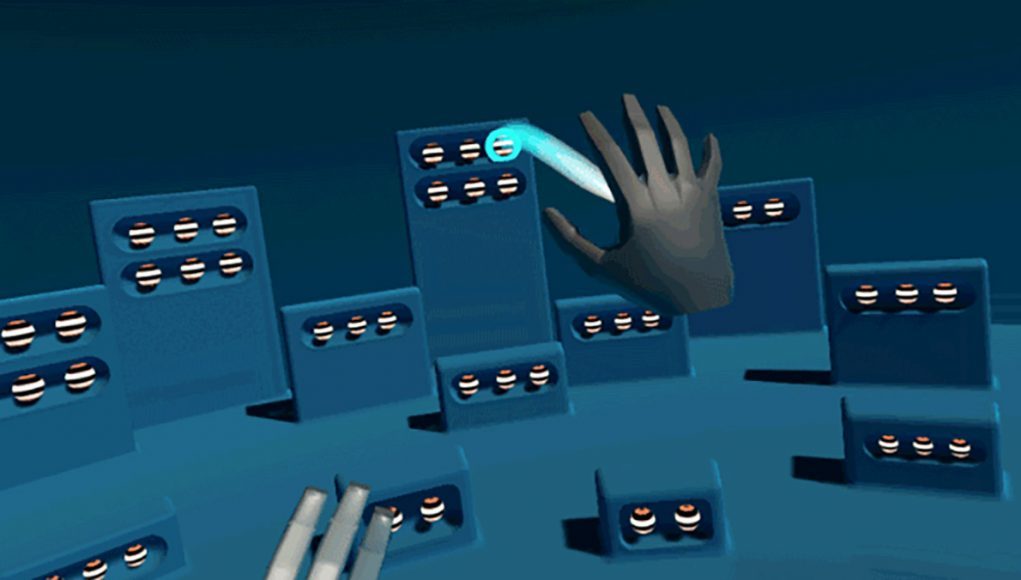

Experiment #1: Animated Summoning

The first experiment looked at creating an efficient way to select a single static distant object then summon it directly into the user’s hand. After inspecting or interacting with it, the object can be dismissed, sending it back to its original position. The use case here would be something like selecting and summoning an object from a shelf then having it return automatically—useful for gaming, data visualization, and educational simulations.

This approach involves four distinct stages of interaction: selection, summoning, holding/interacting, and returning.

1. Selection

One of the pitfalls that many VR developers fall into is thinking of hands as analogous to controllers, and designing interactions that way. Selecting an object at a distance is a pointing task and well suited to raycasting. However, holding a finger or even a whole hand steady in midair to point accurately at distant objects is quite difficult, especially if a trigger action needs to be introduced.

To increase accuracy, we used a head/headset position as a reference transform, added an offset to approximate a shoulder position, and then projected a ray from the shoulder through the palm position and out toward a target (veteran developers will recognize this as the experimental approach first tried with the UI Input Module). This allows for a much more stable projective raycast.

In addition to the stabilization, larger proxy colliders were added to the distant objects, resulting in larger targets that are easier to hit. The team added some logic to the larger proxy colliders so that if the targeting raycast hits a distant object’s proxy collider, the line renderer is bent to end at that object’s center point. The result is a kind of snapping of the line renderer between zones around each target object, which again makes them much easier to select accurately.

After deciding how selection would work, next was to determine when the ‘selection mode’ should be active; since once the object was brought within reach, users would want to switch out of selection mode and go back to regular direct manipulation.

Since shooting a ray out of one’s hand to target something out of reach is quite an abstract interaction, the team thought about related physical metaphors or biases that could anchor this gesture. When a child wants something out of their immediate vicinity, their natural instinct is to reach out for it, extending their open hands with outstretched fingers.

This action was used as a basis for activating the selection mode: When the hand is outstretched beyond a certain distance from the head, and the fingers are extended, we begin raycasting for potential selection targets.

To complete the selection interaction, a confirmation action was needed—something to mark that the hovered object is the one we want to select. Therefore, curling the fingers into a grab pose while hovering an object will select it. As the fingers curl, the hovered object and the highlight circle around it scale down slightly, mimicking a squeeze. Once fully curled, the object pops back to its original scale and the highlight circle changes color to signal a confirmed selection.

2. Summoning

To summon the selected object into direct manipulation range, we referred to real world gestures. A common action to bring something closer begins with a flat palm facing upwards followed by curling the fingers quickly.

At the end of the selection action, the arm is extended, palm facing away toward the distant object, with fingers curled into a grasp pose. We defined heuristics for the summon action as first checking that the palm is (within a range) facing upward. Once that’s happened, we check the curl of the fingers, using how far they’re curled to drive the animation of the object along a path toward the hand. When the fingers are fully curled the object will have animated all the way into the hand and becomes grasped.

During the testing phase we found that after selecting an object—with arm extended, palm facing toward the distant object, and fingers curled into a grasp pose—many users simply flicked their wrists and turned their closed hand towards themselves, as if yanking the object towards themselves. Given our heuristics for summoning (palm facing up, then degree of finger curl driving animation), this action actually summoned the object all the way into the user’s hand immediately.

This single motion action to select and summon was more efficient than two discrete motions, though they offered more control. Since our heuristics were flexible enough to allow both, approaches we left them unchanged and allowed users to choose how they wanted to interact.

3. Holding and Interacting

Once the object arrives in hand, all of the extra summoning specific logic deactivates. It can be passed from hand to hand, placed in the world, and interacted with. As long as the object remains within arm’s reach of the user, it’s not selectable for summoning.

4. Returning

You’re done with this thing—now what? If the object is grabbed and held out at arm’s length (beyond a set radius from head position) a line renderer appears showing the path the object will take to return to its start position. If the object is released while this path is visible, the object automatically animates back to its anchor position.

Overall, this execution felt accurate and low effort. It easily enables the simplest version of summoning: selecting, summoning, and returning a single static object from an anchor position. However, it doesn’t feel very physical, relying heavily on gestures and with objects animating along predetermined paths between two defined positions.

For this reason it might be best used for summoning non-physical objects like UI, or in an application where the user is seated with limited physical mobility where accurate point-to-point summoning would be preferred.