Researchers at Stanford and Samsung Electronics have developed a display capable of packing in greater than 10,000 pixels per inch (ppi), something that’s slated to be used in VR/AR headsets and contact lenses of the future.

Over the years, research and design firms like JDI and INT have been racing to pave the way for ever higher pixel densities for VR/AR displays, astounding convention-goers with prototypes boasting pixel densities in the low thousands. The main idea is to reduce the perception of the dreaded “Screen Door Effect”, which feels like viewing an image in VR through a fine grid.

Last week however researchers at Stanford University and Samsung’s South Korea-based R&D wing, the Samsung Advanced Institute of Technology (SAIT), say they’ve developed an organic light-emitting diode (OLED) capable of delivering greater than 10,000ppi.

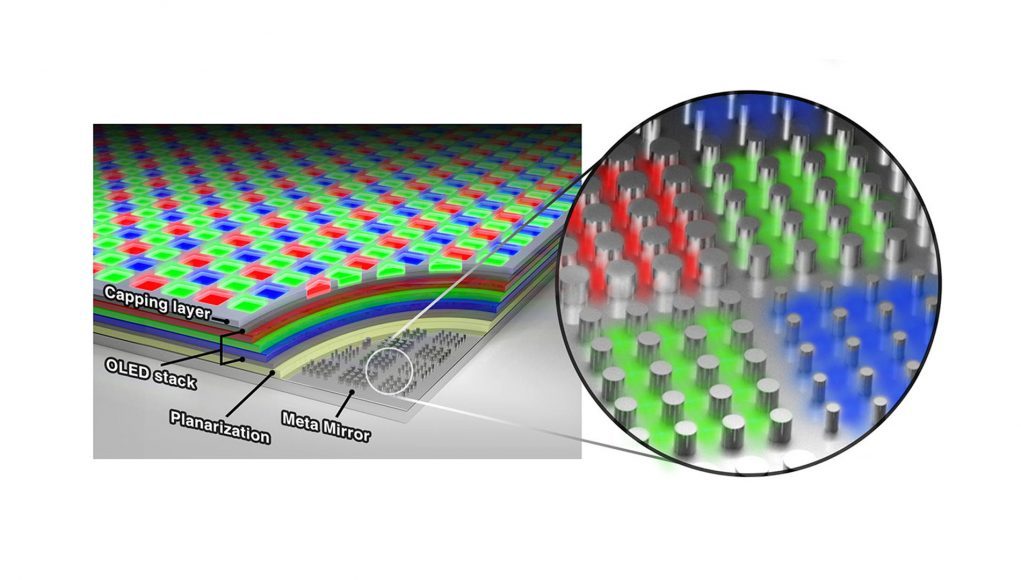

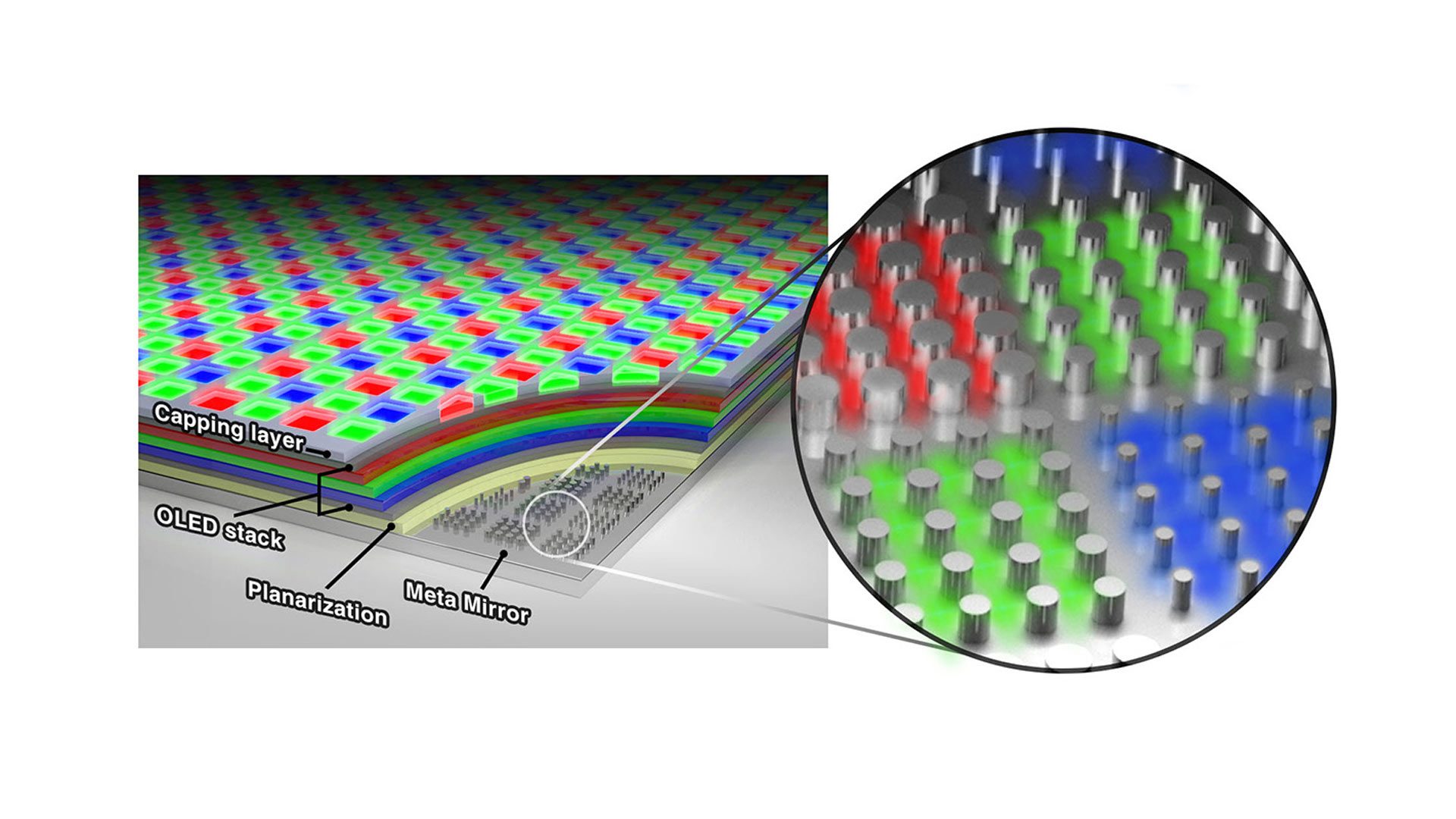

In the paper (via Stanford News), the researchers outline an RGB OLED design that is “completely reenvisioned through the introduction of nanopatterned metasurface mirrors,” taking cues from previous research done to develop an ultra-thin solar panel.

By integrating in the OLED a base layer of reflective metal with nanoscale corrugations, called an optical metasurface, the team was able to produce miniature proof-of-concept pixels with “a higher color purity and a twofold increase in luminescence efficiency,” making it ideal for head-worn displays.

Furthermore, the team estimates that their design could even be used to create displays upwards of 20,000 pixels per inch, although they note that there’s a trade-off in brightness when a single pixel goes below one micrometer in size.

Stanford materials scientist and senior author of the paper Mark Brongersma says the next steps will include integrating the tech into a full-size display, which will fall on the shoulders of Samsung to realize.

It’s doubtful we’ll see any such ultra-high resolution displays in VR/AR headsets in the near term—even with the world’s leading display manufacturer on the job. Samsung is excellent at producing displays thanks to its wide reach (and economies of scale), but there’s still no immediate need to tool mass manufacturing lines for consumer products.

That said, the next generation of VR/AR devices will need a host of other complementary technologies to make good use of such a ultra-high resolution display, including reliable eye-tracking for foveated rendering as well as greater compute power to render ever more complex and photorealistic scenes—things that are certainly coming, although aren’t here yet.