With a new technique, Adobe wants to turn normal monoscopic 360-degree video into a more immersive 3D experience, complete with a level of depth information that promises to give the user the ability to shift their physical vantage point in a way currently only possible with special volumetric cameras—and all with your off-the-shelf 360 camera rig.

Announced by Adobe’s head of research Gavin Miller at National Association of Broadcasters Show (NAB) in Las Vegas this week and originally reported as a Variety exclusive, the new software technique aims to bring six degree of freedom (6-DoF) to your bog standard monoscopic 360-degree video, meaning you’ll not only be able to look left, right, up and down, but also be able to move your head naturally from side-to-side and forward and backward; just as if you were actually there.

According to a group of Adobe researchers, giving the video positional tracking is done via a novel warping algorithm that can synthesize new views within the monoscopic video—and all on the fly while critically maintaining 120 fps. As a consequence, the technique can also be used to stabilize the video, making it a useful tool for those looking to smooth out those jerky hand-held captures that are generally unattractive to VR users. All of this comes with one major drawback though: in order to recover 3D geometry from the monoscopic 360 vid, the camera has to be moving.

Here’s a quick run-down of what’s in the special sauce.

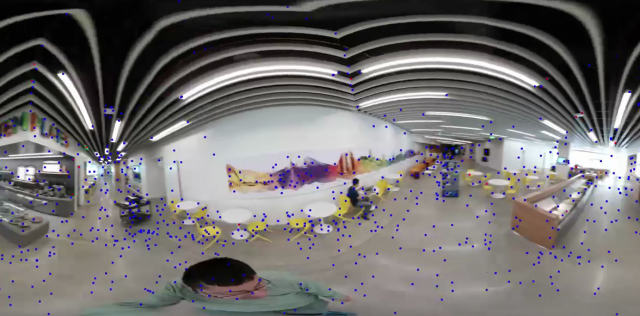

Adobe’s researchers report in their paper that they first employ what’s called a structure-from-motion (SfM) algorithm to compute the camera motion and create a basic 3D reconstruction. After inferring 3D geometry from captured points, they map every frame of the video onto six planes of a cube map, and then run a standard computer-vision tracker algorithm on each of the 6 image planes. Some of the inevitable artifacts of mapping each frame to a cube map is handled by using a field-of-view (FOV) greater than 45 degrees to generate overlapping regions. The video heading this article shows the algorithms in action.

The technique isn’t a 3D panacea to your monoscopic woes just yet. Besides only working with a moving camera, the quality of the 3D reconstruction depends on how far the synthetically created view is from the original one. Going too far, too fast can risk a wonky end result. Problems also arise when natural phenomenon like large textureless regions, occlusions, and illumination changes come into play, which can create severe noise in the reconstructed point cloud and ‘holes’ in the 3D effect. In the fixed view-point demonstration, you’ll also see some warping artifacts of non-static objects as the algorithm tries to blend synthetic frames with original frames.

Other techniques to achieve 6-DoF VR video usually require light-field cameras like HypeVR’s crazy 6k/60 FPS, LiDAR rig or Lytro’s giant Immerge camera. While these undoubtedly will produce a higher quality 3D effect, they’re also custom-built and ungodly expensive. Even though it might be a while until we see the technique come to an Adobe product, the thought of being able to produce what you might call ‘true’ 3D VR video from consumer-grade 360 camera, is exciting to say the least.