AMD’s Radeon RX Vega 64 is finally out after the long wait, promising to offer stiff competition with what’s become the new standard for high-end VR, NVIDIA’s 16nm Pascal architecture-based GTX 1080.

Created as a replacement for the Radeon Fury X and Radeon Fury, the RX Vega 64 is manufactured based on the 14nm FinFet architecture, incorporating 64 compute units and 4096 stream processors with a base clock speed of 1247 MHz (1546 MHz under load). Critically, AMD’s new card doubles Fury’s RAM from 4GB to 8GB of the company’s second generation of High Bandwidth Memory (HBM2).

We stacked up the GTX 1080 against the GTX 980ti in a head-to-head VR benchmark, something worth looking into if you’re not familiar the with NVIDIA’s consumer-grade graphics card. Needless to say, the GTX 1080 has become a high-end go-to for VR systems for a reason—it can chew through nearly anything current games can offer on high settings at a reliable 90 fps.

While we’ve thoroughly tested the GTX 1080, we haven’t had a chance to test out the RX Vega 64 for ourselves, but our friends over at EuroGamer maintain AMD’s newest GPU it’s competitive enough with Nvidia, but critically “offers no knockout blow – in the here and now, at least.”

Similarly, a review by TechAdvisor contends the RX Vega 64 performs well out of the box for VR and 4K gaming, closing in on GTX 1080’s performance only by just a handful of frames per second depending on the game and quality settings, but also has a greater requirements in both power and cooling departments than the GTX 1080.

Coming in both liquid-cooled and air-cooled varieties, the Vega 64 gulps down the wattage, with a typical board requiring 345W (liquid) and 295W (air)—nearly double the GTX 1080’s base 180W max load.

Initially announced at a starting MSRP of $500 for the air-cooled card, the RX Vega 64 is supposed to be less expensive than the GTX 1080 was after its May 2016 launch date at $599 MSRP. Realistically though, online retailers are currently selling the cards at a heavy markup, coming in at $650-$700. You can probably thank cryptocurrency miners for that.

Despite a high introductory price, what remains to be seen is whether the GPU can eventually justify the price tag once developers get a chance to optimize their programs around the card’s new features. Only time will tell though, as the GTX 1080 has had more than a year for developers to work out all the best ways to squeeze every last drip of performance out of the card.

What about VR?

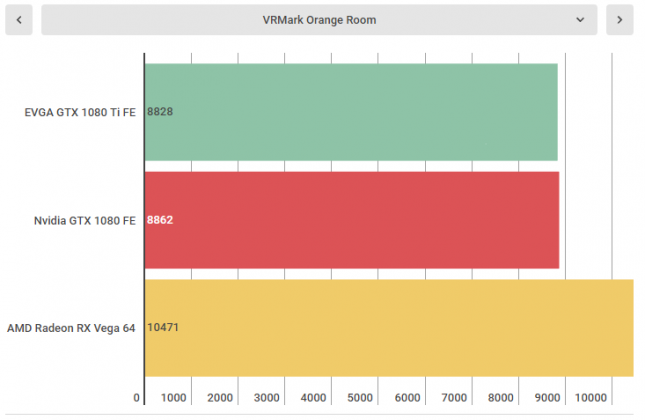

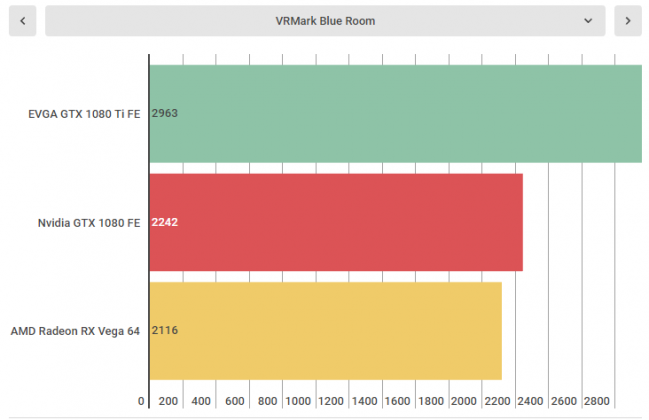

VRMark, the VR benchmarking software from Futuremark, offers a few options for testing GPU performance, including the Orange Room and the Blue Room benchmarking tests.

According to Futuremark, The Orange Room benchmark certifies whether your system can meet the strenuous requirements for the HTC Vive and Oculus Rift. The Blue Room however is a more demanding test, designed to benchmark the latest graphics cards by pushing 5K rendering resolution and volumetric lighting effects.

Here you can see the RX Vega 64 compared to both GTX 1080 and GTX 1080ti:

The RX Vega 64 also supports AMDs Vulkan API, DirectX 12.1, and is a ‘Radeon VR Ready Premium’ GPU, a class of hardware that AMD contends “meet[s] or exceed[s] the Oculus Rift or HTC Vive recommended specifications for graphics cards.”

At this point, you probably shouldn’t pull out the big bucks for a card that hasn’t proven itself worthy against the GTX 1080 just yet. If you’re looking to up your rendering power on a dime, we’d wait until the cards sell at (or below) the initially advertised MSRP before making any hasty purchases. Admittedly, AMD offers a number of cards in their ‘Radeon VR Ready Premium’ class that are much easier on the wallet, including the $450 RX 480 (4GB). If you’re determined to build an AMD-only system, check out the list of the company’s GPUs here.