A video posted by a Vision Pro developer appears to show the current levels of hand-tracking and occlusion performance that Apple’s new headset is capable of.

Apple Vision Pro, expected to launch in the next few months, will use hand-tracking as its primary input method. While system-level interactions combine hand-tracking with eye-tracking for a ‘look and tap’ modality, developers can also build applications that allow users to interact directly with applications using their hands.

Hand-tracking on Vision Pro can be broken into two distinct capabilities: ‘hand-tracking’ and ‘hand-occlusion’.

Hand-tracking is the estimated 3D model of the hand, its joints, and the position of the fingertips. This model is used to determine when objects are touched, grasped, and interacted with.

Hand-occlusion deals with how the system overlays your real hand onto virtual content. Rather than drawing a 3D model of a virtual hand into the scene, Vision Pro cuts out the image of your real hands to show them in the scene instead. Doing so adds another layer of realism to the virtual content because you can see your own, unique hands.

A developer building for Vision Pro using Unity posted a video which gives a clear look at both hand-tracking and occlusion.

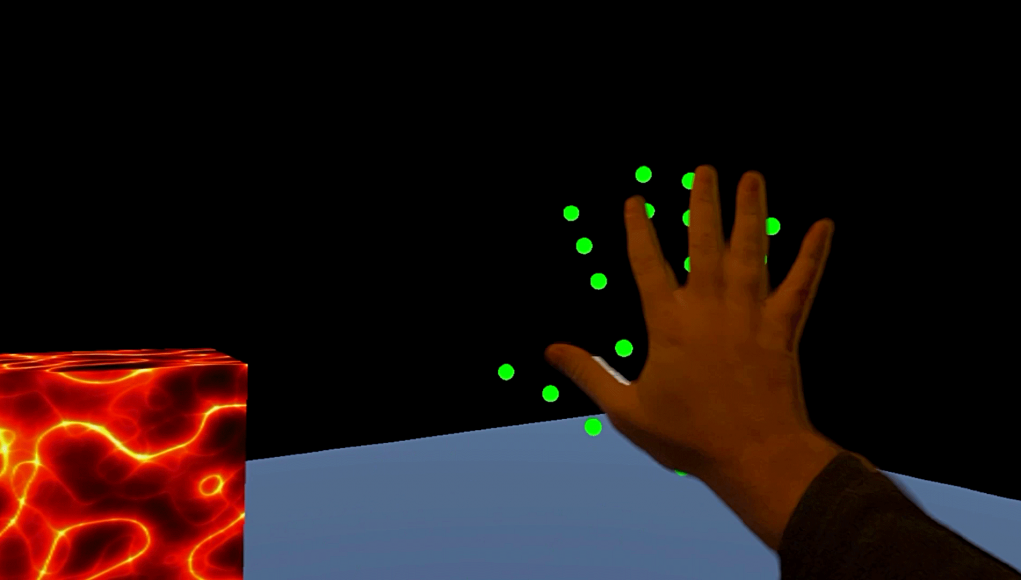

In the video above, the real hands show the occlusion system in action, while the green dots show the estimated 3D position of the hand.

We can see that hand-occlusion is very good but not perfect; when surrounded entirely by virtual content and moving quickly, you can see some clipping around the edges of the hand.

Meanwhile, the actual hand-tracking position lags further behind the hand-occlusion, somewhere around 100–200ms behind. We can’t quite determine the true latency of Vision Pro’s hand-tracking however, because we only have a view of the occlusion-tracking hands to compare it to (which themselves will have some latency with regard to the real hands).

In this context, the video comes from a Unity developer conversation saying that their early experiments with Vision Pro showed very high hand-tracking latency, apparently in comparison to the latest Quest hand-tracking capabilities. Other Unity developers agreed that they were seeing similar latency on their own Vision Pro devices.

However, the video in question is a hand-tracking integration inside of a Unity app, which means the hand-tracking performance and latency may have additional factors relating to Unity that aren’t present when using Apple’s first-party Vision Pro development tools. And considering the headset hasn’t launched yet and these tools are still in development, we may see additional improvements by the time the headset actually reaches stores.