How does it work?

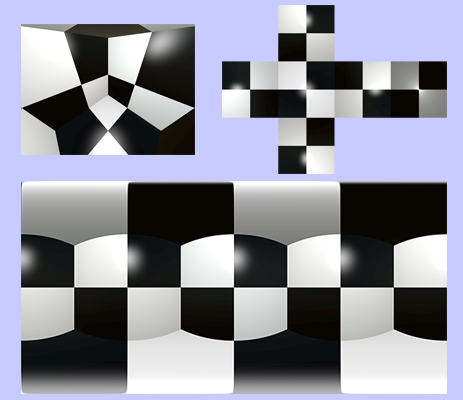

The basic concept of 360 capture in Unity is similar to 360 capture in the real world: we point the (virtual) camera in many different directions to capture different parts of the scene, and then combine the results into a single 360 degree image. Monoscopic capture is particularly simple: we just point the camera in six directions, up, down, left, right, forward and backwards (cubemap format). The camera is located in exactly the same position for all six. The image below shows capturing a checkered box using a camera located inside the box.

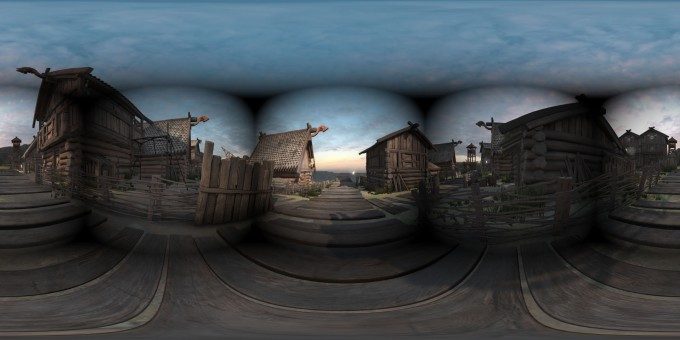

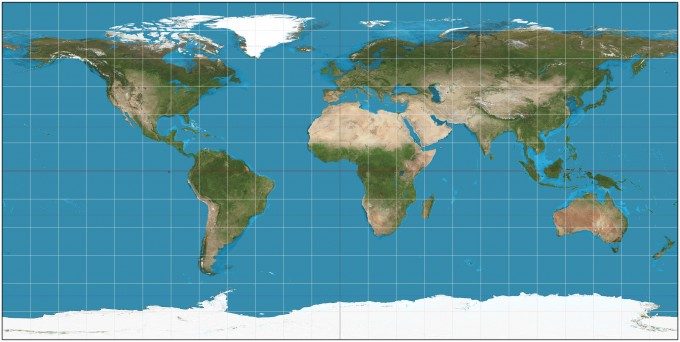

Representing a complete 360 degree environment in a single image faces the same challenge as trying to represent the surface of the Earth on a flat map. The projection used by most tools today is the equirectangular projection. In an equirectangular projection, vertical (y) position gives latitude, while horizontal (x) position gives longitude. This projection is generally avoided by mapmakers because it distorts the area of the poles, making them appear much larger than they actually are; but it is convenient for 360 degree panoramas because it is easy to create an efficient viewer for this format by simply projecting the image onto the inside of a large sphere and placing the viewer inside the sphere.

Note that 360 captures must be very high-resolution, because at any given time, you are only “zooming in” on a small portion of the video (typically 10-20%). To roughly match the angular resolution of an Oculus Rift or HTC Vive, 360 captures must be at least 4K pixels wide.

Converting from the captured camera views into the final equirectangular image requires a re-projection operation, which copies pixels from the source images to their correct locations in the target image. This can be done very quickly on GPU using a compute shader; once it’s done, the final image is transferred to the CPU for saving.

Note that in some cases, this simple approach can produce visual artifacts. For example, if a vignette filter is being applied to dim the edges of the view, this will cause the edge of each of the six views to be dimmed, making the edges of the cube visually evident, as shown below. To reproduce such an effect, it would be necessary to remove it during capture, and then add it back while viewing the panorama. Screen-space effects that cause issues like this usually appear in sky and water effects. Other screen-space effects, like bloom or antialiasing, affect the entire view uniformly, and so produce no noticeable artifacts.