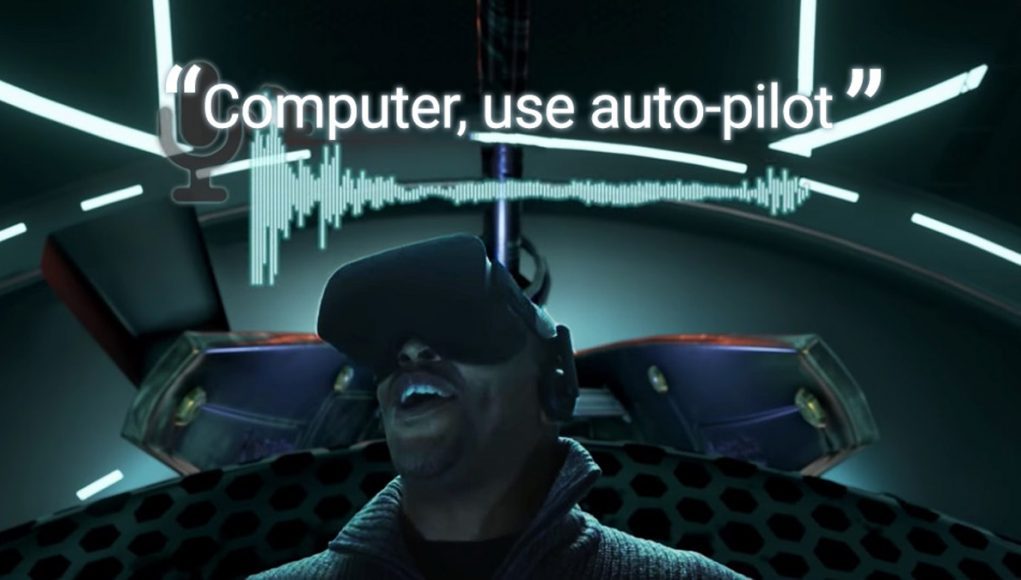

Developer Human Interact announced this past week that they are collaborating with Microsoft’s Cognitive Services in order to power the conversational interface behind their Choose-Your-Own-Adventure, interactive narrative title named Starship Commander. They’re using Microsoft’s Custom Recognition Intelligent Service (CRIS) as the speech recognition engine, and then Microsoft’s Language Understanding Intelligent Service (LUIS) in order to translate spoken phrases into a number of discrete intention actions that are fed back into Unreal Engine for the interactive narrative.

Developer Human Interact announced this past week that they are collaborating with Microsoft’s Cognitive Services in order to power the conversational interface behind their Choose-Your-Own-Adventure, interactive narrative title named Starship Commander. They’re using Microsoft’s Custom Recognition Intelligent Service (CRIS) as the speech recognition engine, and then Microsoft’s Language Understanding Intelligent Service (LUIS) in order to translate spoken phrases into a number of discrete intention actions that are fed back into Unreal Engine for the interactive narrative.

LISTEN TO THE VOICES OF VR PODCAST

Audio PlayerI caught up with Human Interact founder and creative director Alexander Mejia six months ago to talk about the early stages of creating an interactive narrative using a cloud-based and machine learning powered natural language processing engine. We talk about the mechanics of using conversational interfaces as a gameplay element, accounting for gender, racial, and regional dialects, the funneling structure of accumulating a series of smaller decisions into larger fork in the story, the dynamics between multiple morally ambiguous characters, and the role of a character artist who sets bounds of AI and their personality, core belief system, a complex set of motivations.

Here’s a Trailer for Starship Commander:

Here’s Human Interact’s Developer Story as Told by Microsoft Research:

Support Voices of VR

- Subscribe on iTunes

- Donate to the Voices of VR Podcast Patreon

Music: Fatality & Summer Trip