CREAL, a company building light-field display technology for AR and VR headsets, has revealed a new through-the-lens video showing off the performance of its latest VR headset prototype. The new video clearly demonstrates the ability to focus at arbitrary distances, as well as the high resolution of the foveated region. The company also the rendering tech that powers the headset is “approaching the equivalent of [contemporary] VR headsets.”

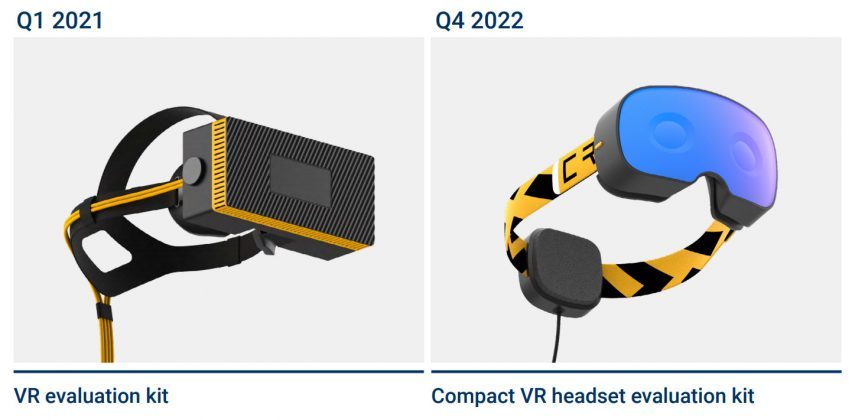

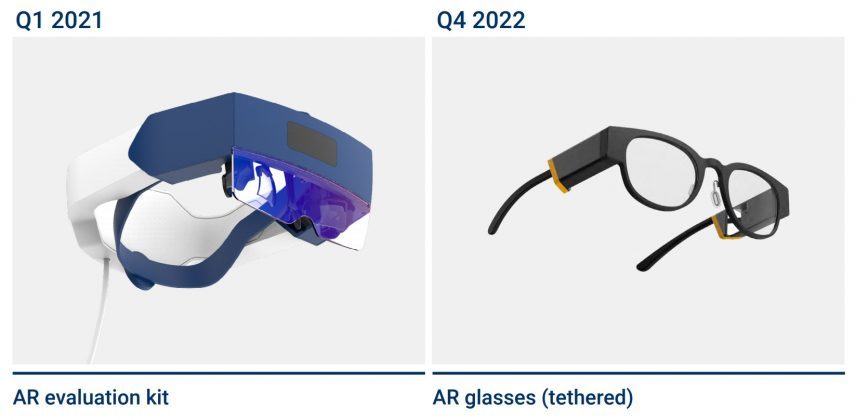

Earlier this year Creal offered the first glimpse of AR and VR headset prototypes that are based on the company’s light-field displays.

Much different from the displays used in VR and AR headsets today, light-field displays generate an image that accurately represents how we see light from the real world. Specifically, light-field displays support both vergence and accommodation, the two focus mechanisms of the human visual system. Most headsets on the market today only support vergence (stereo overlap) but not accomodation (individual eye focus), which means the imagery is technically stuck at a fixed focal depth. With a light-field display you can focus at any depth, just like in the real world.

While Creal doesn’t plan to build its own headsets, the company has created prototypes to showcase its technology with the hopes that other companies will opt to incorporate it into their headsets.

We’ve seen demonstrations of Creal’s tech before, but a newly published view really highlights the light-field display’s continuous focus and the foveated arrangement.

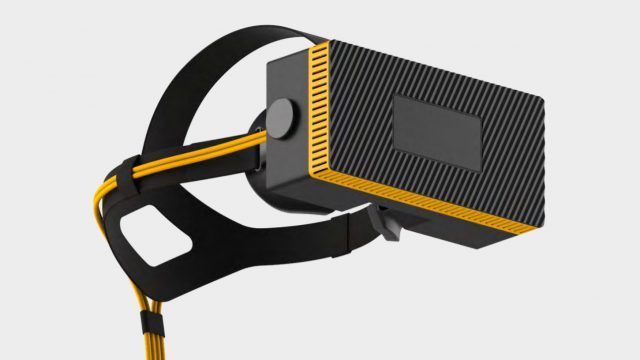

Creal’s prototype VR headset uses a foveated architecture (two overlapping displays per eye); a ‘near retina resolution’ light-field display which covers the central 30° of the field of view, and a larger, lower resolution display (1,600 × 1,440, non-light-field) which fills the peripheral field of view out to 100°.

In the through-the-lens video we can clearly see the focus shifting from one part of the scene to another. Creal says the change in focus is happening entirely in the camera that’s capturing the scene. While some approaches to varifocal displays use eye-tracking to continuously adjust the display’s focal depth based on where the user is looking, a light-field has the depth of the scene ‘baked in’, which means the camera (just like your eye) is able to focus at any arbitrary depth without any eye-tracking trickery.

In the video we can also see that the central part of the display (the light-field portion) is quite sharp compared to the rest. Creal says this portion of the display is “now approaching retinal resolution,” and also running at 240Hz.

And while you might expect that rendering the views needed to power the headset’s displays would be very costly (largely due to the need to generate the light-field), the company says it’s rendering tech is steadily improving and “approaching the equivalent of classical stereo rendering of other VR headsets,” though we’re awaiting more specifics.

While Creal’s current VR headset prototype is very bulky, the company expects it will be able to further shrink its light-field display tech into something more reasonable by the end of 2022. The company is also adapting the tech for AR and believes it can be miniaturized to fit into compact AR glasses.