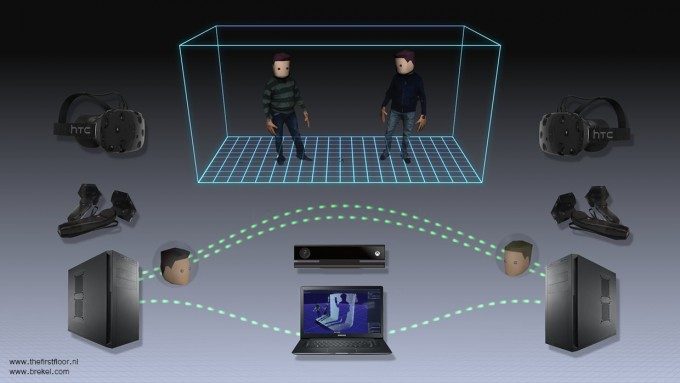

After featuring their new experiment which used the HTC Vive and Microsoft Kinect to the physical image of 2 players into virtual worlds remotely, we wanted to know more about how developers Jasper Brekelmans and Jeroen de Mooij had pulled it off. So, in an interview conducted by themselves, the duo share their development tips, woes and joy working in VR with the HTC Vive and Microsoft’s Kinect camera.

When Jasper Brekelmans and collaborator Jeroen de Mooij got access to a set of HTC Vive gear, they wanted to work towards an application that addressed their belief that “the Virtual Reality revolution needs a social element in order to really take off”. So, they decided to build a multiplayer, cooperative virtual reality game which not only allowed physical space to be shared and mapped into VR, but also project the player’s bodies in as well. The result is a cool looking shared experience, only possible in VR.

We asked the duo to share their experiences. What follows is an interview conducted between Jasper and Jeroen, which digs into why and how the project came to be and how it was eventually executed.

Jasper Brekelmans (website)

Jasper (@brekelj) started as a Motion Capture professional and 3D character rigger about 15 years ago, working on commercials, tv shows, films and interactive characters. You may also know him as the creator of the Brekel tool set offering affordable and simple to use markerless motion capture using Kinect sensors.

Jasper (@brekelj) started as a Motion Capture professional and 3D character rigger about 15 years ago, working on commercials, tv shows, films and interactive characters. You may also know him as the creator of the Brekel tool set offering affordable and simple to use markerless motion capture using Kinect sensors.

Jeroen de Mooij (website)

Jeroen (@jeroenduhmooij) started out as a 2D interaction designer but during the years his focus shifted to creating 3D content. First for animation and now mainly for interactive purposes. He has worked on commercials, interactive installations, large video projection mappings, custom software and tools.

Jeroen (@jeroenduhmooij) started out as a 2D interaction designer but during the years his focus shifted to creating 3D content. First for animation and now mainly for interactive purposes. He has worked on commercials, interactive installations, large video projection mappings, custom software and tools.

What were your goals when starting the project?

Jasper: The first thing we wanted to do with the two loaner Valve/HTC Vive headsets was have some fun over the holiday break.

Quickly we devised a little hackathon plan trying to see if we could do a multi-user setup. Since we only had a single set of Lighthouses we opted for two users in a shared physical & virtual space.

We wanted to see if we could devise a virtual scene in which users collaborate on building/manipulating something while being able to see a representation of the other user as well as his/her contribution to the virtual scene. We also wanted to make sure all data would be shared over a network connection so that we could potentially expand on this in the future.

Jeroen: Due to limited amount of time we had access to the two HTC systems, the approach was to get as far as we could within that time period.

So first, can we hook up 2 HMD’s within the range of 2 lighthouses? Check that worked. So how about a multi user environment, how to set that up? So basically we set new goals in the process. But we aimed for a multi user experience from the start.

Who did what?

Jasper: I focussed on the pointcloud visualization, creating code that would compress & stream live data from my Brekel Pro Pointcloud application and render it in a Unity DirectX11 shader.

It was important to have very low latency, acquire and render pointcloud data at 30 fps (the native frame rate of the Kinect), not slow down Unity’s viewport rendering (which typically runs at 60-90 fps for VR) and not consume more bandwidth than a current internet connection can handle.

Luckily all of that was achieved by using multi-threaded C++ code and direct access to the GPU using DirectX11 shaders. Marald Bes also helped with a Unity script that would allow virtual objects to be grabbed and manipulated.

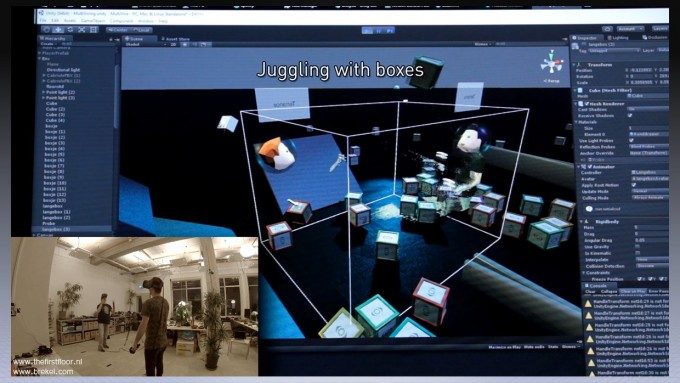

Jeroen: I have been working with Unity3D for a while and recently worked on a small test involving workstation <> GearVR networking, so I knew a few basic things about the Unet system.

Basically I did all the networking stuff with Unet in Unity3D and setting up the scene to play in. Marald helped by scripting the possibility to grab a box with the steam controller.

And I used the avatar heads from another R&D project I am working on with Erwin. Jasper did what he’s best at, all the kinect related stuff (streaming server, networking, unity3D client)

What problems did you encounter during implementation?

Jasper: Problems? None of course, everything went perfectly fine the first time we tried and we never cursed once….. yeah right. ;)

Implementing networking code is always hard and frustrating work, initially I set out to do it all with raw sockets for performance reasons. After two attempts it turned out it was very difficult to get that to be robust and in the end I settled on building on top of an existing library and actually get some unexpected performance benefits from that.

Luckily for the Unity DirectX11 shaders I could partly rely on some of my existing code, so that went down smoothly. I did extend the pointcloud rendering with a mesh rendering style that was procedurally generated on the GPU.

During implementation we realized the point cloud style was actually nicer to look at, since meshes occlude things obstructing the view too much at times. Aligning the pointcloud and both Vive calibrations also proved tricky, but after some attempts we found some practical tricks that aligned things well enough for our tests.

Jeroen: Time, time is one huge factor when on a deadline, but on the other hand, it makes you really focused on what to achieve within that time.

Having experience building one person experiences in Unity3D but not very much on networking or multi-user projects, I really needed time to figure out the way Unet is set up and meant to be used. So that meant a lot of running in circles and starting over with a different approach. Also, the documentation and tutorials are limited as the Unet system is a recently added feature to Unity3D, and, as always, the things I wanted to try were not a part of the ‘basic’ functionalities.

Besides that I noticed that the controllers were offline sometimes although they still had enough power.

The compositor of the steam system got confused sometimes. This seemed to happen when accidentally dragging a window onto the second screen (HTC vive).

What did you learn while playing with the tech and where there things you didn’t expect beforehand?

Jasper: Well first and foremost it was way more fun than we anticipated :)

Also many things came natural that I hadn’t even thought of. Like being able to transfer a picked up object from one hand to another, throwing it to the other user and catching it.

But even non verbal communication with the other user by simply looking over to see what he’s doing, or things like gesturing worked really intuitively for communication.

Also throwing a block at each other’s faces was pretty fun (although we could’ve expected that) and even triggered genuine reactions of avoiding being hit when done unexpectedly.

Jeroen: I learned to stack boxes :)

Working with the DK2 and GearVR I knew that your brain is pretty easy to fool.

The main thing that stuck from this experiment is how natural it all felt. The point cloud gave not only a representation of the other person, but also some environmental points, so to me; it did not feel disconnected with the real world at all. I did not expect that up front, having done the SteamVR demos where I was really emerged into another world. This naturally has its own charm.

To me, the cable was not really an issue, I got used to it really quick but it would really be a big step forward not to have that coroutine in the back of my head.

And having the opportunity to physically walk around, and especially walk around a 3D object, gives a big sense of freedom in the virtual world. That really is a big difference with any seated experience I have done.

What are your future plans?

Jasper: We definitely want to extend this with a setup where both users are not sharing the same physical space but are in two different offices using an internet connection.

Unfortunately we had to return the loaner Vives to their owners so will probably have to wait until the retail version is out. :(

We are also eager to adapt this to work with a Mixed Reality device like Microsoft’s HoloLens as we believe this type of collaboration with shared 3D objects can have great uses for productivity.

Jeroen: Hopefully expanding this project or start similar VR / AR / MR experiments.

For this project I would like to add a few things, i.e. actually doing it over the internet and see how that feels. So adding positional voice would be important in that case.

Also, I am really curious how it will be with more users in the same space, sharing and collaborating on something. I see options for games, of course, but I think that there are a lot of opportunities for a variety of purposes. Stacking and throwing boxes was fun, as is any physics sandbox, but I would like to expand the functionalities inside such an environment. Maybe give every user his own set of tools to stimulate collaboration even more.

More point cloud data streaming into the environment is another thing, also making sure that the Kinect angle and range is correctly set up, or maybe even have a look at the other project Jasper is working on; Multi Kinect cloud data.

What was the purpose of using a Kinect device in the setup?

Jasper: We solely used it for point clouds and didn’t use any of its skeleton tracking.

I have experimented with rendering 3D avatars in VR (using the Kinect as well as other motion capture equipment).

It’s nice and certainly has it’s uses but since the 3D avatar is always an abstract representation of the user it doesn’t feel very immersive.

Even though a point cloud representation has artefacts it does contain texture, lighting and details that you know belong to the user (yourself or another person) and we noticed you can pick up more subtle clues from it than an abstracted representation.

Jeroen: Jasper was already working on a small project with streaming point clouds, so the timing was perfect to incorporate that into this experiment.

It really added the ‘real’ aspect to the whole experiment. The Vive tracks the controllers and the HMD, so that’s hands and head, and not really a representation of a whole person. By aligning the Kinect’s coordinate system with the steamVR’s, a whole person was standing in VR. And it looked really funny to have a point cloud body with 3D avatar head.

Why have we used this sandbox? (And what would be a better sandbox for next time?)

Jeroen: Isn’t it fun to throw things around? We thought so too. In addition to that the physics made it easy to combine objects and therefore supported working together.

The surrounding objects are just there to throw boxes at, so we could see if the network sync worked as hoped.

For the next time I want to go a bit more towards a certain purpose of collaboration.

For example placing buildings on a map and running a simulation, or finding a solution to a certain three dimensional puzzle.

Can you share more details about the setup used?

Jeroen: We used 2 workstations, an i5 and i7, both with GTX970 Nvidia cards in it. Only 2 lighthouses were used for the tracking. We made the project in Unity3D, v5.3

Jasper: Oh and Kinect v2 sensor :)

How about the development time, how much time went into it getting it running?

Jasper: We sat together in the office for about 3 days, and I spent about a week more coding on the networking classes.

Jeroen: I had one complete VR system already set up for another project, so that was functional when we started. The first thing I tested when I got another Vive system was to try out if they both worked in the same space with only 2 lighthouses.

When that was working fine, we started chatting about the opportunities we could build. I mainly focused on setting up the Unet stuff, which took me a few days with trial and error, Jasper had done preparations for the streaming pointcloud part and we hacked it all together in 2 days, add another one for fine-tuning and filming it.

If we had more time with the systems, we would probably make more functionality in it. But this set up was done in say a week.

Is it Vive only or are there other VR systems this is applicable too, i.e. on mobile (GearVR)?

Jasper: Well the set up does need to have tracking of hands and the ability to pick things up, as well as positional tracking of the headset to be somewhat useful.

So for example I guess Oculus Touch and HoloLens’ hand gestures will work for that too. Both should also be able to run Unity with the DirectX11 shaders for the pointclouds. With the GearVR lacking these things it makes it a less interesting platform for this particular setup for us.

Jeroen: It is, in fact, “just” a multiplayer environment where ‘players’ can do things in. So, if the input is the Vive + lighthouses, a Kinect skeleton or an organic motion tracking solution that controls the avatar, it’s all possible.

Even viewing it on a GearVR on Wi-Fi is a possibility if there is external positional tracking. But, we are aiming for the HoloLens :)

Our thanks to Jasper Brekelmans and Jeroen de Mooij for sharing their experiences with us. We’ll will stay in touch and track the duo’s progress to keep you in the loop.