Rich interactions between the user and the virtual world are a key aspect of what makes VR so compelling, but creating and tuning such interactions is a challenging and time consuming task for developers. Toolsets like UltimateXR can speed up the iteration process for VR interactions, bringing more interactivity and immersion to games and experiences.

Guest Article by Enrique Tromp

Enrique is cofounder and CTO of VRMADA, a tech company providing enterprise VR solutions worldwide. With a strong passion for computer graphics and digital art, his career spans 20 years in simulation, video games, and live interactive experiences. These days he loves taking on challenging VR projects and developing UltimateXR, a free and open-source VR framework. You can follow his latest work on Twitter @entromp.

Three years ago I had the opportunity to discuss the importance of delightful interactions in VR applications in this Road to VR guest article. I showcased some examples developed at VRMADA, where we use VR for enterprise training and simulation. One of the key ideas is that good, natural interactions are essential for the efficient assimilation of procedures targeted by the training. In VR videogames, great interactions improve the gameplay and can make some of the mechanics really fun and satisfying.

Since around 2016, I have invested a big portion of my life building a framework and toolset that has become the technological foundation of the company. The main goal was to create a scalable system that would help our developers create VR application for years to come. During these years we had to develop applications with extremely different requirements, interactions, and goals, from serious training to entertainment. These differences were key in making the framework naturally converge to a system prepared to work in very different scenarios.

We recently decided to make the framework and tools available to the public—free and open-source for everyone to use. The result is UltimateXR for Unity. We hope to soon port it to other platforms as well (Unreal, Web…?).

In this article I will discuss some of the features in UltimateXR that have been key in successfully improving our VR interactions while lowering turnaround times at the same time.

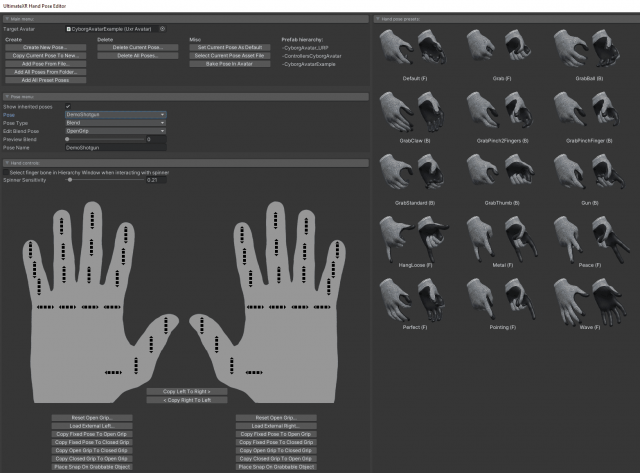

Integrated Hand-pose Editor

Authoring hand-poses is an important process when developing object manipulation and other mechanics that require switching between different poses.

At the beginning we exported hand animations directly from our 3D program of choice (3dsMax, Maya, Blender, etc.) but we soon realized it became a big bottleneck. We decided to develop a full-fledged hand-pose editor inside Unity that would allow us to adjust hand-poses directly from the world editor.

Besides the standard editing options, some features that I think have been key in lowering turnaround times are:

- Support for custom widgets to quickly rotate finger bones, but also let the developer use the built-in Unity transform handles. They can both be used interchangeably.

- Support for fixed and blend poses (more on this later).

- Support for hand-pose presets that can be used directly or as an quick starting point for new poses.

- Support for exchanging poses between rigs coming from applications using different coordinate systems.

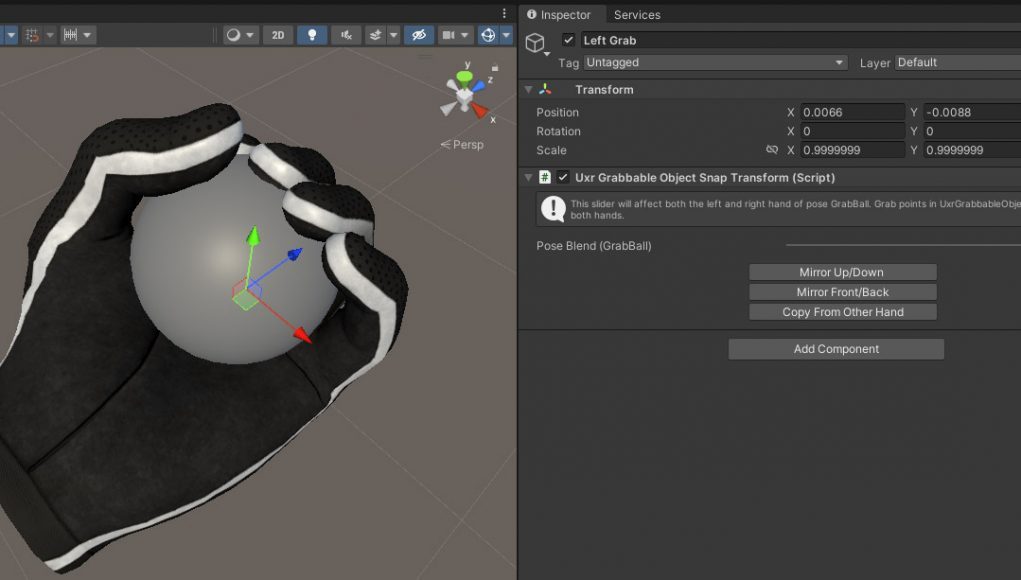

Blend Poses to Author Manipulation Using Common Grab Poses

During development we realized that a lot of objects are grabbed using common poses from a well-known set. The only difference was that the grip was more open or closed depending on the size of the object.

We decided to create a new pose type called the blend pose, which is defined by a start pose and an end pose and can adopt any pose in between. To support different object sizes, the start pose would be a completely open pose, able to grab the biggest possible object, and the end pose would be a completely closed pose, able to grab the smallest possible object. Any object with an intermediate size could use the same pose but with a different blend value controlled by a slider.

Using the standard cylindric grab on two different objects

While fixed poses are normally used for hand gestures and ad-hoc grips, blend poses were extremely useful to develop grips that could be re-used by many different objects.

Blend poses have also proven to be useful for objects where the grip can change, such as when pulling the trigger on a gun or pressing a button on an interactive device while holding it.

Customizable Hands

Creating a different set of hands for every software we developed would have been a very time-consuming task. In the specific case of two entertainment applications, we used a custom rig; but for most of our training applications we have always stuck with the same core assets. This has allowed us to re-use poses such as hand gestures or the common blend poses discussed in the previous section.

We still wanted to be able to make hands look different in each application. For that we created a rig that could switch between skin and gloves and be able to customize any of the two. This has the benefit of being able to reuse poses while still be able to have a different looking set of hands in newer projects.

The visual side is also often driven by the client requirements or a videogame design document. In certain training applications you start with bare hands and one of the first steps is to wear safety items such as gloves. This already means you either show skin features or decide to hide them using a ghost shader, for example.

A VR application may also let you customize your skin color and hand size. Support for more than one hand size can be very important because in some scenarios where you’re representing yourself, such as a realistic collaboration VR app or a training application, having a different hand size can break immersion.

Different skin shader variations: big hands on top, small hands at the bottom

To avoid only supporting a single size (which in many applications tends to be a large male hand) we opted to support two hand sizes: big hands and small hands. Adult hands can mostly be grouped in any of these two.

In the future we might add the possibility to procedurally change hand sizes, but for now I find this the best tradeoff between inclusiveness, flexibility, and required effort.