A new paper authored by researchers from Disney Research and several universities describes a new approach to procedural speech animation based on deep learning. The system samples audio recordings of human speech and uses it to automatically generate matching mouth animation. The method has applications ranging from increased efficiency in animation pipelines to making social VR interactions more convincing by animating the speech of avatars in real-time in social VR settings.

Researchers from Disney Research, University of East Anglia, California Institute of Technology, and Carnegie Mellon University, have authored a paper titled A Deep Learning Approach for Generalized Speech Animation. The paper describes a system which has been trained with a ‘deep learning / neural network’ approach, using eight hours of reference footage (2,543 sentences) from a single speaker to teach the system the shape the mouth should make during various units of speech (called phonemes) and combinations thereof.

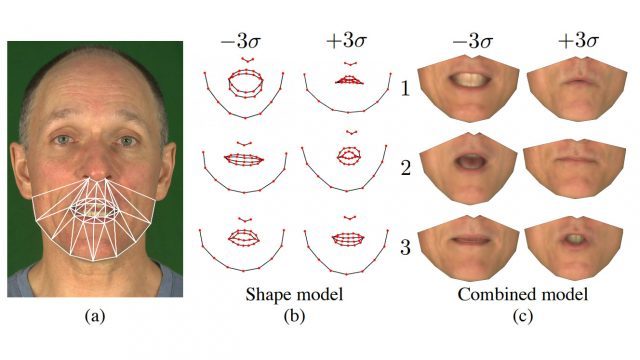

Below: The face on the right is the reference footage. The left face is overlaid with a mouth generated from the system based only on the audio input, after training with the video.

The trained system can then be used to analyze audio from any speaker and automatically generate the corresponding mouth shapes which can then be applied to face model for automated speech animation. The researchers say the system is speaker-independent and can “approximate other languages.”

We introduce a simple and effective deep learning approach to automatically generate natural looking speech animation that synchronizes to input speech. Our approach uses a sliding window predictor that learns arbitrary nonlinear mappings from phoneme label input sequences to mouth movements in a way that accurately captures natural motion and visual coarticulation effects. Our deep learning approach enjoys several attractive properties: it runs in real-time, requires minimal parameter tuning, generalizes well to novel input speech sequences, is easily edited to create stylized and emotional speech, and is compatible with existing animation retargeting approaches.

Creating speech animation which matches an audio recording for a CGI character is typically done by hand by a skilled animator. And while this system falls short of the sort of high fidelity speech animation you’d expect from major CGI productions, it could certainly be used as an automated first-pass in such productions or used to add passable speech animation in places where it might otherwise be impractical, such as NPC dialogue in a large RPG, or for low budget projects that would benefit from speech animation but don’t have the means to hire an animator (instructional/training videos, academic projects, etc).

In the case of VR, the system could be used to make social VR avatars more realistic by animating the avatar’s mouth in real-time as the user speaks. True mouth tracking (optical or otherwise) would be the most accurate method for animating an avatar’s speech, but a procedural speech animation system like this one could be a practical stopgap if / until mouth tracking hardware becomes widespread.

Some social VR apps are already using various systems for animating mouths; Oculus also provides a lip sync plugin for Unity which aims to animate avatar mouths based on audio input. However, this new system based on deep learning appears to provide significantly high detail and accuracy in speech animation than other approaches that we’ve seen thus far.