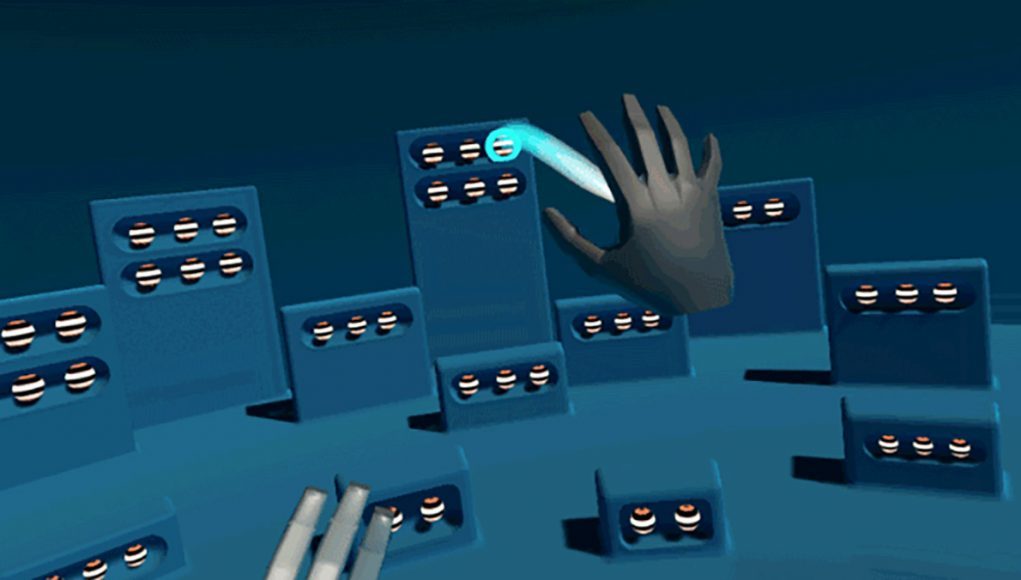

Experiment #3: Extendo Hands!

For Leap Motion’s third and final experiment, rather than simply gesturing to command a distant object to come within reach, we explored giving the user longer arms to grab the faraway object.

The idea was to project the virtual hands out to the distant object to allow a grab that functions the same as when the user is standing right next to an object. Then, once the object was held, the user would be in full control of it, able to pull it back to within their normal reach or even relocate and release it.

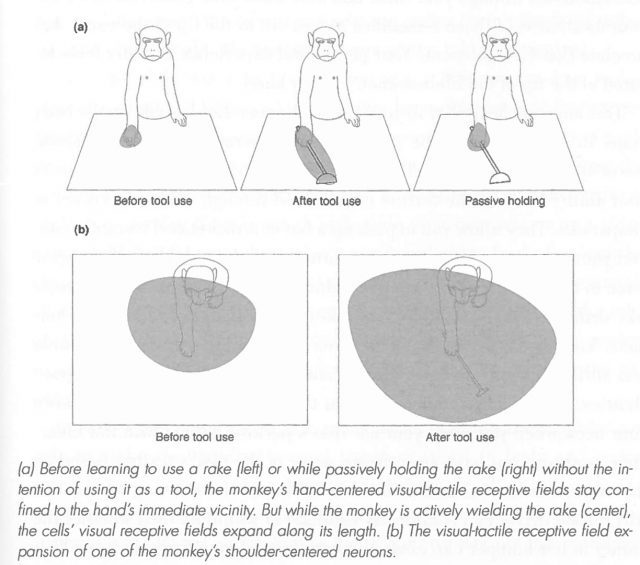

This approach touches on some interesting neuroscience concepts, such as body schema and peripersonal space. Our brains are constantly using incoming sensory to model both the position of our bodies and their various parts in space, as well as the empty space around us in which we can take immediate action. When we use tools, our body schema expands to encompass the tool and our peripersonal space grows to match our reach when using the tool. When we use a rake or drive a car, those tools literally become part of our body, as far as our brain is concerned.

Our body schema is a highly adaptable mental model evolved to adopt physical tools. It seems likely that extending our body schema through virtual means would feel almost as natural.

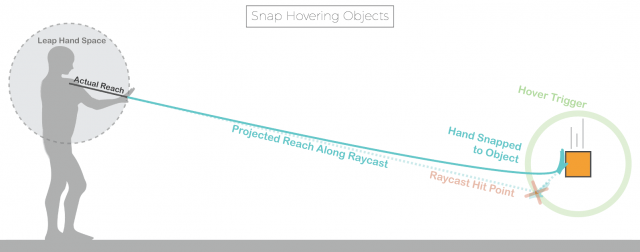

For this experiment, our approach centered on the idea of remapping the space of our physical reach onto a scaled-up projective space, effectively lengthening our arms out to a distant object. Again, the overall set of interactions could be described in stages: selecting/hovering, grabbing, and holding.

1. Selecting/Hovering

To select an object, we were able to reuse much of the logic from the previous executions: raycasting through the shoulder and palm to hit a larger proxy collider around distant objects. Once the raycast hit an object’s proxy collider, we projected a new blue graphic hand out to the object’s location as well as the underlying virtual hand, which contains the physics colliders that do the actual grabbing. We used similar snapping logic from previous executions so that when an object was hovered, the blue projected hand snapped to a position slightly in front of the object, ready to grab it.

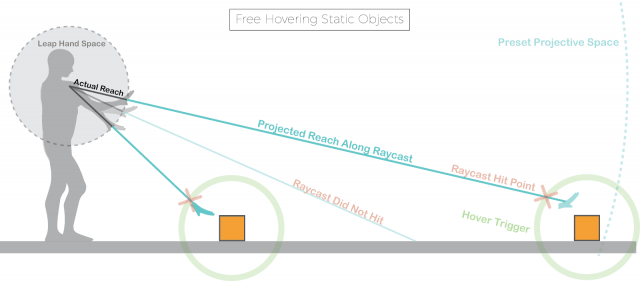

As an alternative, we looked at an approach without snapping where we would hover over a distant object and project out a green hand, but let the user retain full control of their hand. The idea was to allow the full freedom of manipulation, including soft contact, for distant objects.

To do this we remapped the reachable range of hands onto a preset projective space that was large enough to encompass the farthest object. Then whenever the raycast hit an object’s proxy collider, we would simply send out the projected hand to its corresponding position within the projective space, letting it move freely so long as the raycast was still hitting the proxy collider. This created a small bubble around each object where free hovering with a projected hand was possible.

Regular soft contact interaction with virtual objects takes a bit of getting used to, since from the perspective of a game engine, the hands are immovable objects with unstoppable force. This effect is multiplied when operating in a projectively scaled-up space. A small movement of the real hand is magnified by how far away the projective hand is. Often, when trying to roll our hand over a distant object, we instead ended up forcefully slapping them away. After some tests we removed free hover and stuck with snap hovering.

2. Grabbing

Grabbing in this execution was as simple as grabbing any other virtual object using our underlying virtual hand model. Since we’d snapped the blue projected hand to a convenient transform directly in front of the distant object, all we had to do was grab it. Once the object was held we moved into the projective space held behavior that was the real core of this execution.

3. Holding

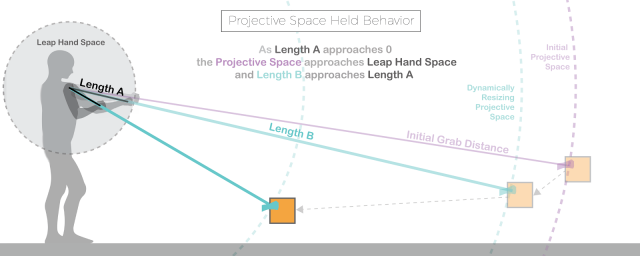

At the moment when grabbing a distant object, the distance to that object is used to create a projective space, onto which the actual reach is remapped. This projective space means that moving the hand in an arc will move the projected hand along the same arc within the projected space.

However, as one brings their hand towards them the projective space dynamically resizes. As the distance between the actual hand and a reference shoulder position approaches zero, the projective space approaches the actual reach space.

Once the hand is almost as far back as one can bring it, and the projective space is almost equal to actual reach space, the projected hand fuses into the actual hand and the user is left holding the object directly.

The logic behind this dynamically resizing projective space held behavior sounds quite complicated, but in practice feels like a very natural extension of one’s hands.

After a bit of practice these Extendo Hands begin to feel almost like regular hands just with a longer reach. They make distant throwing, catching, and relocation possible and fun!

After a bit of practice these Extendo Hands begin to feel almost like regular hands just with a longer reach. They make distant throwing, catching, and relocation possible and fun!

Extendo Hands feel quite magical. After a few minutes adjusting to the activation heuristics, one could really feel a sense of peripersonal space expand to encompass the whole environment. Objects previously out of reach were now merely delayed by the extra second it took for the projected hand to travel over to them. More So than the other two executions this one felt like a true virtual augmentation of the body’s capabilities. We look forward to seeing more work in this area as our body schemas are stretched to their limits and infused with superpowers.