One of the biggest limitations of current VR technology is the inability to convey a realistic and complete sense of touch. So far, the most you would feel from a modern consumer VR system, like the HTC Vive, is vibration through a controller. From what we saw at SIGGRAPH 2016, it seems that the logical next step just is to produce more kinds of vibrations, at more points around the body, all synced with proper visuals and sound in VR.

Developers, researchers, and companies from around the world presented experimental haptic technologies at SIGGRAPH this week, ranging from novel hardware, to narrative experiences simply enhanced by a few vibrating units on your back.

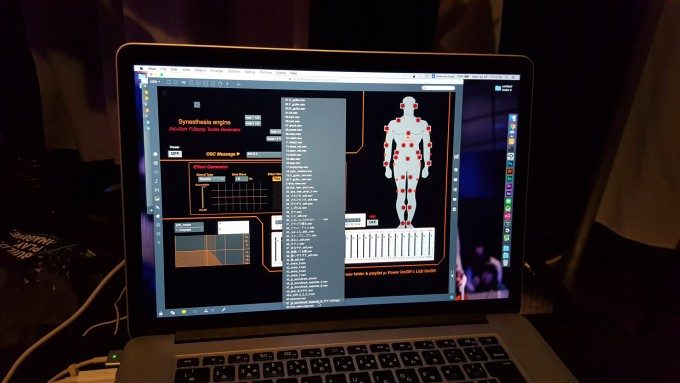

Synesthesia Suit

One of the first things I tried was the Synesthesia Suit by researchers from Keio University. You may know it already as it was first seen in the demonstration of Rez Infinite on the PlayStation VR back in 2015. Now they’re back again showing off the suit with an additional VR experience they developed.

First I played Rez Infinite with the PSVR and the suit. It did seem to add more immersion, but ultimately did not make the game feel that much more real. Then I tried the other experience with a different suit running on the HTC Vive (an unnamed demo for show purposes) and it did actually impress me with how real some of it felt.

This other suit had differently placed vibrators on the suit than the first one I tried; there were even two on my head! The vibrators are actually voice coil based actuators, according to Kouta Minamizawa, the associate professor who worked on the suit, which are capable of a wide range of vibrations.

The demo put me through several abstract scenes with similarly weird synthetic sound that reacts to what you do, or what happens to you. There were lasers hitting me, phantom beings passing through me, floating orbs of energy all throughout, and other random things. This time it was painfully clear—almost but not quite literally—that the vibration units on the suit were more capable of complex feedback than you’d think, from smooth buffets of energy, to sharp piercings of lasers. But there’s also another trick.

The trick is in the synchronization of the right intensity vibrations with the right visuals and the right audio, which will be the key lesson to all of these projects. Without those ingredients, the haptics of the suit would simply feel like vibrations from a suit, not something actually touching you. That may be because the brain expects to feel a certain kind of feedback when seeing certain things happen to the body, or likewise expects something visually matching to understand better what’s being felt. Audio can also have a role in those expectations.

So if you’re missing any of those components, haptics can come off as fake. That may have been what was holding back Rez Infinite from showing off the full potential of the suit, since the game, at least in the demo I tried, didn’t have anything visually come into contact with my body in VR, so my brain wouldn’t have any context for what the vibration represents.

In the unnamed demo they had with the other suit, there was all manner of stuff touching and hitting me and it felt a lot more real; it showed me for the first time the potential for vibration-based body haptics in VR.

Much of the time, however, it actually wasn’t very effective, as what I saw wasn’t exactly in sync with the vibrations. That was mostly because the suit didn’t actually do any body tracking, and just relied on assumptions of where my body would be, based on the position of the head and hands from the Vive. In the future that could change of course, as body tracking technology gets better and more widespread. Nonetheless, when it worked, it was on the verge of impressive. It truly felt like lasers were hitting my noggin at one point, and that’s a good thing, at least for now.

It’s true however that this concept, of higher realism with perfectly synchronized haptics, visuals, and audio, isn’t really new. It’s actually a rather simple and logical idea that has been proven for a long time. But with the rise of consumer VR technology and the new levels of ‘presence’ brought forth, the topic is more relevant than ever, to both researchers and businesses. In any case, many are attempting to reach for the holy grail of VR in their own way, and haptics is an important part in getting there.

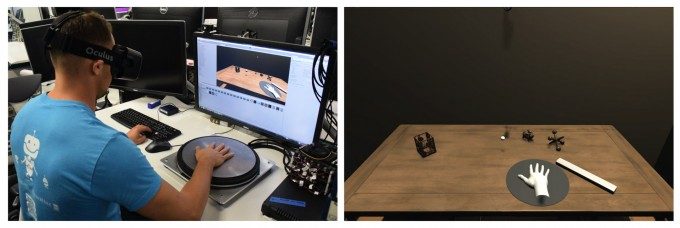

Oculus HapticWave

Work from the other researchers at SIGGRAPH reinforces this overarching concept of matched feedback. Especially Oculus Research’s ‘HapticWave’ project, a VR multi-sensory experiment with a vibrating metal plate. It essentially has users place their hand on a metal plate, put on an Oculus Rift, and control a bouncing ball, or a moving spark, on a table. The user would feel directional vibrations that mimic with a high degree of accuracy what is happening on the table and hear it happening with positional audio from the Rift’s headphones.

When I tried it, it actually took a considerable amount of time to get used to the locked in calibration they had to set for efficient show demos, and in fact I had to try it several times, but when it worked for a few split moments, again, the objects did feel more physical rather than virtual. So it supports the idea that matched haptics, visuals, and audio, indeed were incredibly valuable together while not so much individually.

We also can’t forget that Oculus, one of the biggest consumer VR players, is involved with HapticWave, and a few have questioned what they’re even doing with a such a bulky and kind of ‘useless’ metal plate. The answer is basically what most researchers presenting at SIGGRAPH would say: this is research to further our understanding of the field, not necessarily something that will be developed into a consumer product.

In this case, it would be to study the human perception of haptics, the limits, the effective baseline, etc. The HapticWave technology is partly a tool for making this possible, and one that will continue to advance as research is furthered. It’s also useful to keep in mind that Oculus Research, who created HapticWave, is a division at Oculus focused on technology that may be beneficial in the long term—5 to 10 out—so what they’re doing with HapticWave might just be a slice of the groundwork they’re setting.

Read the HapticWave Research Paper