A Step Toward “creating the world’s first HDR headset”

After giving an overview of Half Dome 3, Lanman went on to share new research from Facebook Reality Labs which he called “the first step towards creating the world’s first HDR headset.”

HDR is basically shorthand for ‘very high contrast ratio capable of significantly more brightness’. The goal of an HDR display is to be able to produce a more realistic range of brightness that you’d find in the real world, all the way from dark shadows to bright reflections from the Sun.

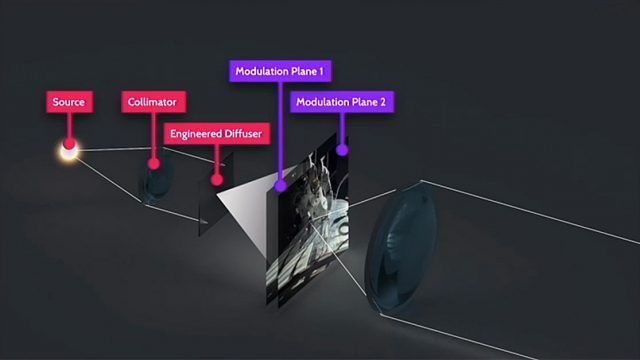

Lanman said that Facebook Reality Labs researchers created an optical pipeline that maintains as much of the light from the source as possible using a collimator and an engineered diffuser followed by dual modulation of the image to boost contrast, and finally passed through a lens that’s specially designed to retain contrast.

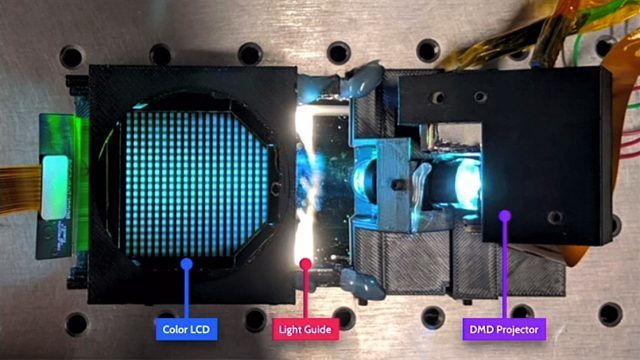

The team build a benchtop prototype to prove out the pipeline and created a working HDR display capable of 6,000 nits of brightness (compared to around 100 nits for the typical VR headset, according to Lanman). But, he said, the benchtop prototype was impractical to be miniaturized for use in a headset, largely because the contrast-preserving lens was far too large.

So the team went about creating a ‘folded’ version of the lens. “This you could get on your head,” Lanman said. The work is detailed in a paper titled High Dynamic Range Near-eye Displays which was published during the conference.

Pupil Steering Theory

Lanman also touched on ‘pupil steering’ retinal displays for AR headsets. Pupil steering is moving the retinal display to stay aligned with the movements of your eyes so that the headset can have a wide field of view without sacrificing a large eyebox.

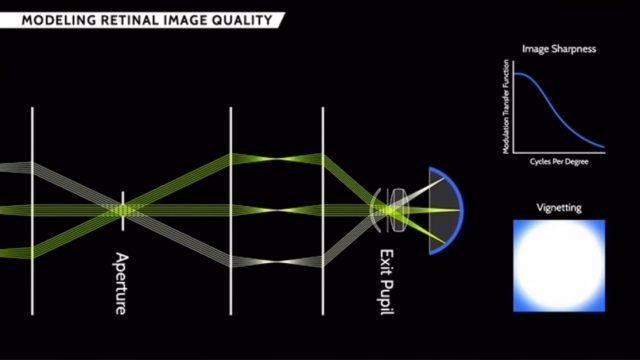

Before building a pupil steering system, Researchers at Facebook Reality Labs wanted to understand the fundamental requirements of such a system—in how many degrees of freedom must the retinal display be moved to achieve optimal performance?

To answer that question, the researchers built a simulated retinal display to answer basic questions like ‘how bright would the image be?’ ‘What will happen to the image during fast eye movements if the pupil steering can’t keep up?’ The findings were published last year in a paper titled Retinal Image Quality in Near-eye Pupil-steered Systems.

– – — – –

“After five and a half years we’re having a lot of fun working across a broader scope of research than many of us have in the past,” Lanman said, wrapping up his presentation. “Starting at level 1, we’re trying to push fundamental vision science to drive requirements for our systems. We still have plenty of not-quite-ready for primetime ideas at levels 2 through 4. And occasionally, we dive into startup mode, and we do things at level 5 and beyond, like Half Dome. We’re so happy to get to pull back the curtain here at [the conference]. And of course, we hope to inspire others.”

Interested in R&D? Check out our recent coverage of Facebook Reality Labs’ holographic folded optics and field of view expansion for holographic displays.