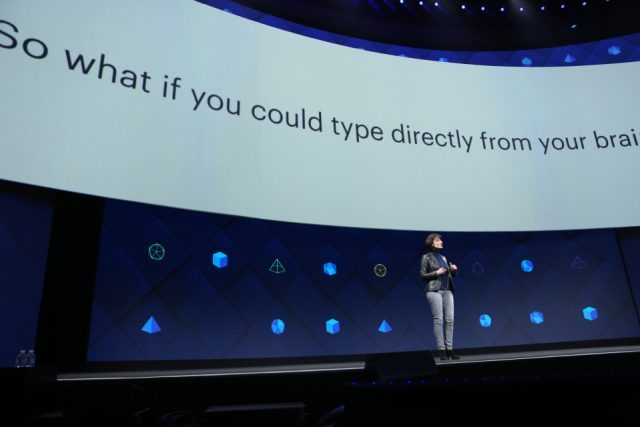

Regina Dugan, VP of Engineering at Facebook’s Building 8 skunkworks and former head of DARPA, took the stage today at F8, the company’s annual developer conference, to highlight some of the research into brain-computer interfaces going on at the world’s most far-reaching social network. While it’s still early days, Facebook wants to start solving some of the AR input problem today by using tech that will essentially read your mind.

6 months in the making, Facebook has assembled a team of more than 60 scientists, engineers and system integrators specialized in machine learning methods for decoding speech and language, in optical neuroimaging systems, and “the most advanced neural prosthesis in the world,” all in effort to crack the question: How do people interact with the greater digital world when you can’t speak and don’t have use of your hands?

At first blush, the question may seem like it’s geared entirely at people without the use of their limbs, like those with Locked-in Syndrome, a malady that causes full-body paralysis and inability to produce speech. But in the realm of consumer tech, making what Dugan calls even a simple “brain-mouse for AR” that lets you click a binary ‘yes’ or ‘no’ could have big implications to the field. The goal, she says, is direct brain-to-text typing and “it’s just the kind of fluid computer interface need for AR.”

While research regarding brain-computer interfaces has mainly been in service of these sorts of debilitating conditions, the overall goal of the project, Dugan says, is to create a brain-computer system capable of letting you type 100 words per minute—reportedly 5 times faster than you can type on a smartphone—with words taken straight from the speech center of your brain. And it’s not just for the disabled, but targeted at everyone.

“We’re talking about decoding those words, the ones you’ve already decided to share by sending them to the speech center of your brain: a silent speech-interface with all the flexibility and speed of voice, but with the privacy of typed text,” Dugan says—something that would be invaluable to an always-on wearable like a light, glasses-like AR headset.

Because basic systems in use today now don’t operate in real-time and require surgery to implant electrodes—a giant barrier we’ve yet to surmount—Facebook’s new team is researching non-invasive sensors based on optical imaging that Dugan says would need to sample data at hundreds of times per second and precise to millimeters. A tall order, but technically feasible, she says.

This could be done by bombarding the brain with quasi-ballistic photons, light particles that Dugan says can give more accurate readings of the brain than contemporary methods. When designing a non-invasive optical imaging-based system, you need light to go through hair, skull, and all the wibbly bits in between and then read the brain for activity. Again, it’s early days, but Facebook has determined optical imaging as the best place to start.

The big picture, Dugan says, is about creating ways for people to even connect across language barriers by reading the semantic meanings of words behind human languages like Mandarin or Spanish.

Check out Facebook’s F8 day-2 keynote here. Regina Dugan’s talk starts at 1:18:00.