Futuremark have now released the full version of their long awaited, dedicated virtual reality benchmark, VRMark. And, after months of research and development, the company has found itself having to redefine its own views on how the difficult subject of VR performance testing should be tackled.

Futuremark are developers of some of the world’s best known and most widely used performance testing software. In enthusiast PC gaming circles, their visually impressive proprietary synthetic gaming benchmark series 3DMark has been the basis for many a GPU fanboy debate over the years with every new version bringing with it a glimpse at what the forthcoming generation of PC gaming visuals might deliver and PC hardware fanatics can aspire to achieve.

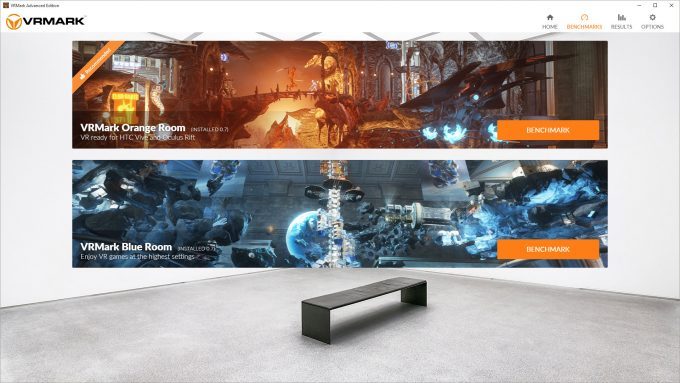

Therefore, it was inevitable that once virtual reality reached the consumer phase, the company would take an active part in VRs renaissance, in fact with immersive gaming came lofty initial hardware requirements and a necessary obsession with low latency visuals and minimum frame rates of 90FPS. So surely a new Futuremark product, one focused purely on the needs of VR users, would be a slam dunk for the company. VRMark is the company’s first foray into the world of consumer VR performance testing and recently launched in full via Steam, offering up a selection of pure performance and experiential ‘benchmarks’, the latter viewable inside a VR headset.

However, as anyone who has experienced enough virtual reality across different platforms will tell you, putting a number on how ‘good’ a VR system performs is anything but simple. With dedicated VR headsets come complex proprietary rendering techniques and specialist dedicated display technology a lot of which simply hadn’t been done at a consumer level before. The biggest challenge however, the biggest set of variables Futuremark had to account for, was human physiology and the full gamut of possible human responses to a VR system.

However, as anyone who has experienced enough virtual reality across different platforms will tell you, putting a number on how ‘good’ a VR system performs is anything but simple. With dedicated VR headsets come complex proprietary rendering techniques and specialist dedicated display technology a lot of which simply hadn’t been done at a consumer level before. The biggest challenge however, the biggest set of variables Futuremark had to account for, was human physiology and the full gamut of possible human responses to a VR system.

Futuremark initially approached the issue from a pure, analytical perspective, as you might expect. You may remember that we went hands on with a very early version of the software last year which at the time came complete with some pretty expensive additional hardware. Futuremark’s aim at that time (at least in part), to measure the much coveted ‘motion to photons’ value – the time it takes for an image to reach the human eye, from render time to display. However, you’ll notice that if you’ve popped onto Steam to purchase the newly released VRMark, it does not list ‘USB oscilloscope’ or ‘photo-sensitive sensor’ as requirements. Why is that?

We asked Futuremark’s James Gallagher to enlighten us.

We asked Futuremark’s James Gallagher to enlighten us.

“After many months of testing, we’ve seen that there are more significant factors that affect the user’s experience,” he says, “Simply put, measuring the latency of popular headsets does not provide meaningful insight into the actual VR experience. What’s more, we’ve seen that it can be misleading to infer anything about VR performance based on latency figures alone.” Gallagher continues, “We’ve also found that the concept of ‘VR-ready’ is more subtle than a simple pass or fail. VR headsets use many clever techniques to compensate for latency and missed frames. Techniques like Asynchronous Timewarp, frame reprojection, motion prediction, and image warping are surprisingly effective.”

Gallagher is of course referring to techniques that almost all current consumer VR hardware vendors now employ to help deal with the rigours of hitting those required frame rates and the unpredictable nature of PC (and console in the case of PSVR) performance. All these techniques (Oculus has Asynchronous Timewarp and now Spacewarp, Valve’s SteamVR recently introduced Asynchronous Reprojection) work along similar lines to achieve a similar goal, to ensure that the motions you think you’re making in VR (say, when you turn your head) matches with what your eyes see inside the VR headset. The upshot is minimised judder and stuttering, two effects very likely to induce nausea in VR users.

Gallagher is of course referring to techniques that almost all current consumer VR hardware vendors now employ to help deal with the rigours of hitting those required frame rates and the unpredictable nature of PC (and console in the case of PSVR) performance. All these techniques (Oculus has Asynchronous Timewarp and now Spacewarp, Valve’s SteamVR recently introduced Asynchronous Reprojection) work along similar lines to achieve a similar goal, to ensure that the motions you think you’re making in VR (say, when you turn your head) matches with what your eyes see inside the VR headset. The upshot is minimised judder and stuttering, two effects very likely to induce nausea in VR users.

“With VRMark, you can judge the effectiveness of these techniques for yourself,” says Gallagher, “This lets you judge the quality of the VR experience with your own eyes. You can see for yourself if you notice any latency, stuttering, or dropped frames.” And Gallagher shares something surprising about their research, “In our own tests, most people could not identify the under-performing system, even when the frame rate was consistently below the target. You may find that you can get a comfortable VR experience on relatively inexpensive hardware.”

“With VRMark, you can judge the effectiveness of these techniques for yourself,” says Gallagher, “This lets you judge the quality of the VR experience with your own eyes. You can see for yourself if you notice any latency, stuttering, or dropped frames.” And Gallagher shares something surprising about their research, “In our own tests, most people could not identify the under-performing system, even when the frame rate was consistently below the target. You may find that you can get a comfortable VR experience on relatively inexpensive hardware.”

To describe Futuremark’s VR benchmarking methodology for consumers in more detail, here’s James Gallagher explaining it in his own words.

- System A is VR-ready with room to grow for more demanding experiences.

- System B is VR-ready for games designed for the recommended performance requirements of the HTC Vive and Oculus Rift.

- System C is technically not VR-ready but is still able to provide a good VR experience thanks to VR software techniques.

- System D is not VR-ready and cannot provide a good VR experience.

With all of that laid out, I asked Gallagher to explain why, if Futuremark are now recommending people adopt a ‘see for yourselves’ methodology for VR benchmarking, why does he believe VRMark is needed at all? In theory any single VR application or game could be chosen to be used in the above methodology. Why should people invest in VRMark?