A number of standalone VR headsets will be hitting the market in 2018, but so far none of them offer positional (AKA 6DOF) controller input, one of the defining features of high-end tethered headsets. But we could see that change in the near future, thanks to research from Google which details a system for low-cost, mobile inside out VR controller tracking.

The first standalone VR headsets offering inside-out positional head tracking are soon to hit the market: the Lenovo Mirage Solo (part of Google’s Daydream ecosystem), and HTC Vive Focus. But both headsets have controllers which track rotation only, meaning that hand input is limited to more abstract and less immersive movements.

Detailed in a research paper (first spotted by Dimitri Diakopoulos), Google says that the reasons behind the lack of 6DOF controller tracking on many standalone headsets is because of hardware expense, computational cost, and occlusion issues. The paper, titled Egocentric 6-DoF Tracking of Small Handheld Objects goes on to demonstrate a computer-vision based 6DOF controller tracking approach which works without active markers.

Authors Rohit Pandey, Pavel Pidlypenskyi, Shuoran Yang, and Christine Kaeser-Chen, all from Google, write, “Our key observation is that users’ hands and arms provide excellent context for where the controller is in the image, and are robust cues even when the controller itself might be occluded. To simplify the system, we use the same cameras for headset 6-DoF pose tracking on mobile HMDs as our input. In our experiments, they are a pair of stereo monochrome fisheye cameras. We do not require additional markers or hardware beyond a standard IMU based controller.”

The authors say that the method can unlock positional tracking for simple IMU-based controllers (like Daydream’s), and they believe it could one day be extended to controller-less hand-tracking as well.

Inside-out controller tracking approaches like Oculus’ Santa Cruz use cameras to look for for IR LED markers hidden inside the controllers, and then compare the shape of the markers to a known shape to solve for the position of the controller. Google’s approach effectively aims to infer the position of the controller by looking at the users arms and hands, instead of glowing markers.

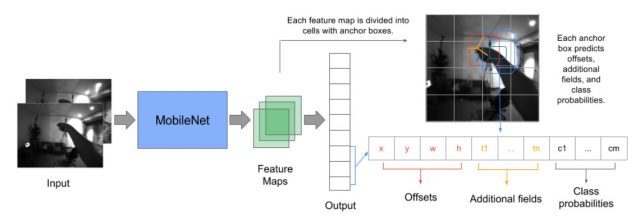

To do this, they captured a large dataset of images from the headset’s perspective, which show what it looks like when a user holds the controller in a certain way. Then they trained a neural network—a self-optimizing program—to look at those images and make guesses about the position of the controller. After learning from the dataset, the algorithm can use what it knows to infer the position of the controller from brand new images fed in from the headset in real time. IMU data from the controller is fused with the algorithm’s positional determination to improve accuracy.

A video, which has since been removed, showed the view from the headset’s camera, with a user waving what looked like a Daydream controller around in front of it. Overlaid onto the image was a symbol marking the position of the controller, which impressively managed to follow the controller as the user moved their hand, even when the controller itself was completely blocked by the user’s arm.

To test the accuracy of their system, the authors captured the controller’s precise location using a commercial outside-in tracking system, and then compared to the results of their computer-vision tracking system. They found a “mean average error of 33.5 millimeters in 3D keypoint prediction,” (a little more than one inch). Their system runs at 30FPS on a “single mobile CPU core,” making it practical for use in mobile VR hardware, the authors say.

And there’s still improvements to be made. Interpolation between frames is suggested as a next step, and could significantly speed up tracking, as the current model predicts position on a frame-by-frame basis, rather than sharing information between frames, the team writes.

As for the dataset which Google used to train the algorithm, the company plans to make it publicly available, allowing other teams to train their own neural networks in an effort to improve the tracking system. The authors believe the dataset is the largest of its kind, consisting of some 547,000 stereo image pairs, labeled with precise 6DOF position of the controller in each image. The dataset was compiled from 20 different users doing 13 different movements in various lightning conditions, they said.

– – — – –

We expect to hear more about this work, and the availability of the dataset, around Google’s annual I/O developer conference, hosted this year May 8th–10th.