Google announced Seurat at last year’s I/O developer conference, showing a brief glimpse into the new rendering technology designed to reduce the complexity of ultra high-quality CGI assets so they can run in real-time on mobile processors. Now, the company is open sourcing Seurat so developers can customize the tool and use it for their own mobile VR projects.

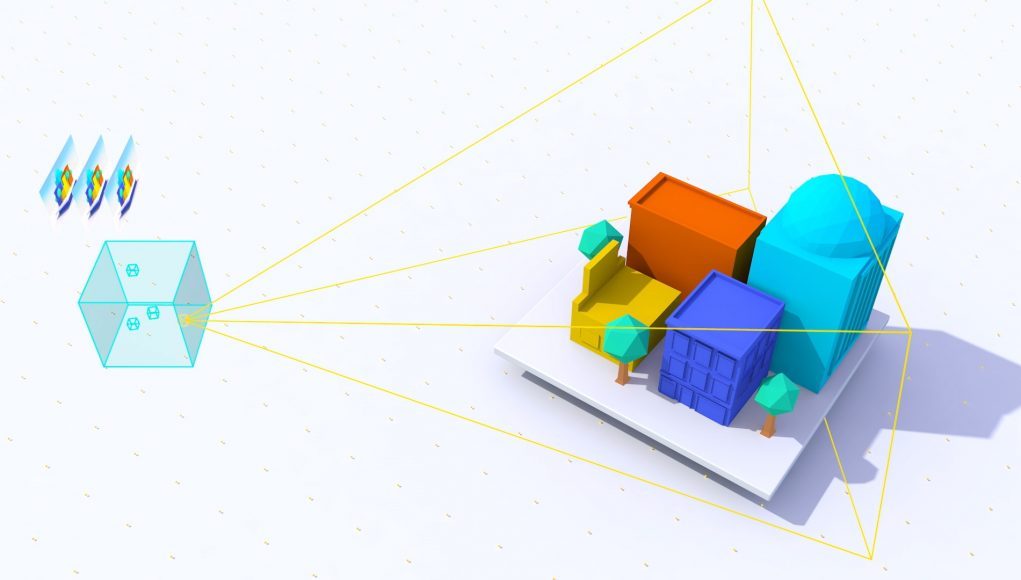

“Seurat works by taking advantage of the fact that VR scenes are typically viewed from within a limited viewing region, and leverages this to optimize the geometry and textures in your scene,” Google Software Engineer Manfred Ernst explains in a developer blogpost. “It takes RGBD images (color and depth) as input and generates a textured mesh, targeting a configurable number of triangles, texture size, and fill rate, to simplify scenes beyond what traditional methods can achieve.”

Blade Runner: Revelations, which launched last week alongside Google’s first 6DOF Daydream headset Lenovo Mirage Solo, takes advantage of Seurat to a pretty impressive effect. Developer studio Seismic Games used the rendering tech to bring a scene of 46.6 million triangles down to only 307,000, “improving performance by more than 100x with almost no loss in visual quality,” Google says.

Here’s a quick clip of the finished scene:

To accomplish this, Seurat uses what the company calls ‘surface light-fields’, a process which involves taking original ultra-high quality assets, defining a viewing area for the player, then taking a sample of possible perspectives within that area to determine everything that possibly could be viewed from within it.

This is largely useful for developers looking to create 6DOF experiences on mobile hardware, as the user can view the scene from several perspectives. A major benefit, the company said last year, also includes the ability to add perspective-correct specular lightning, which adds a level of realism usually considered impossible on a mobile processors’ modest compute overhead.

Google has now released Seurat on GitHub, including documentation and source code for prospective developers.

Below you can see an image with with Seurat and without Seurat (click to expand):