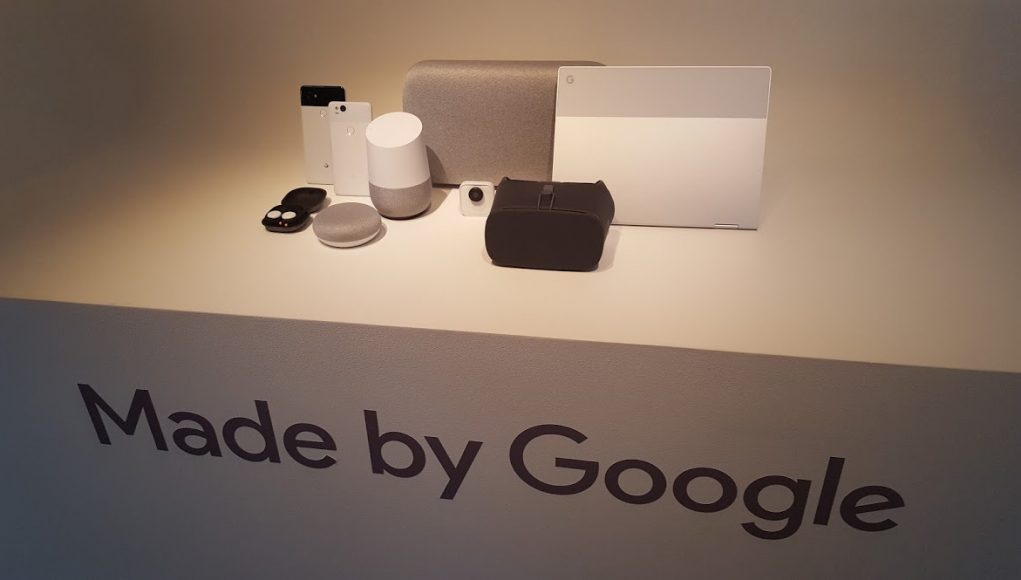

At Google’s big October 4th press conference, the company announced a new Pixel 2 phone and a range of new ambient computing devices powered by AI-enabled conversational interfaces including new Google Mini and Max speakers, Google Clips camera, and wireless Pixel Buds. The Daydream View mobile VR headset received a major upgrade with vastly improved comfort and weight distribution, reduced light leakage, better heat management, cutting-edge aspherical fresnel lenses with larger acuity and sweet spot, as well as an increased field of view of 10-15 degrees more than the previous version. It’s actually a huge upgrade and improvement, but VR itself only received a few brief moments during the 2-hour long keynote where Google was explaining their AI-first design philosophy for their latest ambient computing hardware releases.

At Google’s big October 4th press conference, the company announced a new Pixel 2 phone and a range of new ambient computing devices powered by AI-enabled conversational interfaces including new Google Mini and Max speakers, Google Clips camera, and wireless Pixel Buds. The Daydream View mobile VR headset received a major upgrade with vastly improved comfort and weight distribution, reduced light leakage, better heat management, cutting-edge aspherical fresnel lenses with larger acuity and sweet spot, as well as an increased field of view of 10-15 degrees more than the previous version. It’s actually a huge upgrade and improvement, but VR itself only received a few brief moments during the 2-hour long keynote where Google was explaining their AI-first design philosophy for their latest ambient computing hardware releases.

LISTEN TO THE VOICES OF VR PODCAST

I had a chance to sit down with Clay Bavor, Google’s Vice President for Augmented and Virtual Reality to talk about their latest AR & VR announcements as well as how Google’s ambient computing and AI-driven conversational interfaces fit into their larger immersive computing strategy.

YouTube VR is on the bleeding edge of Google’s VR strategy, and their VR180 livestream camera can broadcast a 2D version that translates well to watching on a flat screen, but also provides a more immersive stereoscopic 3D VR version for mobile VR headsets.

Google retired the Tango brand with the announcement of ARCore on August 29th, and Bavor explains that they had to come up with a number of algorithmic and technological innovations in order to standardize the AR calibration process across all of their OEM manufacturers.

Finally, Bavor reiterates that WebVR and WebAR are a crucial part of the Google’s immersive computing strategy. Google showed their dedication to the open web by releasing experimental WebAR browsers for ARCore and ARKit so that web developers can develop cross-compatible AR apps. Bavor sees a future that evolves beyond the existing self-contained app model, but this requires a number of technological innovations including contextually-aware ambient computing powered by AI as well as their Virtual Positioning System announced at Google I/O. There are also a number of other productivity applications that Google is continuing to experiment with, but the screen resolution still needs to improve from having a visual acuity measurement of 20/100 to being something closer to 20/40.

After our interview, Bavor was excited to tell me how Google created a cloud-based, distributed computing, physics simulator that could model 4 quadrillion photons in order to design the hybrid aspherical fresnel lenses within the Daydream View. This will allow them to create machine-learning optimized approaches to designing VR optics in the future, but it will also likely have other implications for VR physics simulations and potentially delivering volumetric digital lightfields down the road.

Google’s vision of contextually-aware AI and ambient computing has a ton of privacy implications that are similar to my many open questions about privacy in VR, but I hope to open up a more formal dialog with Google to discuss these concerns and potentially new concepts of self-sovereign identity and new cryptocurrency-powered business models that go beyond their existing surveillance capitalism business model. There wasn’t a huge emphasis on Google’s latest AR and VR announcements during the press conference as AI conversational interfaces and ambient computing received the majority of attention, but Google remains dedicated to the long-term vision of the power and potential of immersive computing.

Support Voices of VR

- Subscribe on iTunes

- Donate to the Voices of VR Podcast Patreon

Music: Fatality & Summer Trip