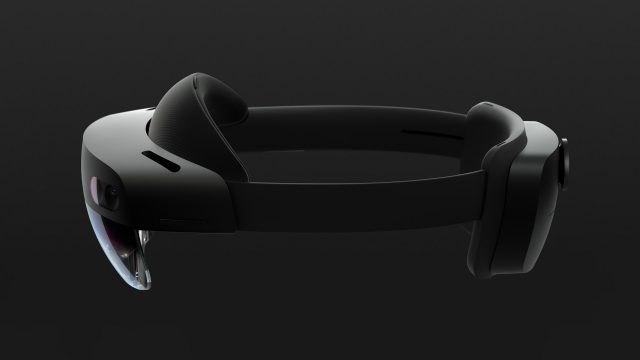

Yesterday at Mobile World Congress, Microsoft unveiled HoloLens 2, the company’s next iteration of its enterprise-focused standalone AR headset. Microsoft is coming strong out of the gate with its fleet of partners as well as a number of in-house developed apps that they say will make it easier for companies to connect, collaborate, and do things like learn on-the-job skills and troubleshoot work-related tasks. That’s all well and good, but is the HoloLens 2 hardware truly a ‘2.0’ step forward? That’s the question that ran through my mind for my half-hour session strapped into the AR headset. The short answer: yes.

Stepping into the closed off demo space at Microsoft’s MWC booth, I was greeted by a pretty familiar mock-up of a few tables and some art on the wall to make it feel like a tastefully decorated home office. Lighting in the room was pretty muted, but was bright enough to feel like a natural indoor setting.

I actually got the chance to run through two HoloLens demos back-to-back; one in the home office, and another in a much brighter space with more direct lighting dedicated to showcasing a patently enterprise-focused demo built by Bentley, a company that deals in construction and infrastructure solutions. The bulk of my impressions come from my first demo where I got to run through a number of the basic interactions introduced at the HoloLens 2 reveal here at MWC.

Fit & Comfort

Putting the headset on like a baseball cap, I tightened it snug to my skull with the ratcheting knob in the back. Overtightening it slightly, I moved the knob in the opposite direction, eliciting a different click.

Although I’m not sure precisely how Microsoft arrived at the claim that it’s “three times more comfortable” than the original HoloLens (they say comfort has been “measured statistically over population”), it presents a remarkably good fit, sporting a low density replaceable foam cushion that comfortably rests the front weight on the top of the forehead. The strap, which is a firm but flexible material, wraps around to fit snugly under what Wikipedia scholars refer to as the occipital bone. Giving it a few good shakes, I was confident that it was firmly stuck to my head even without the need of the optional top strap. In the 30-odd minutes wearing HoloLens 2 over the course of two demos, it seemed to be a comfortable fit that could probably be worn for the advertised two to three hour active-use battery life without issue.

Once the device was on and comfy, I was prompted with a quick eye-tracking calibration scene that displayed a number of pinkish-purple gems that popped in and out of the scene when I looked at them, then I was set and ready to start HoloLensing.

Hand-tracking & Interactions

To my left sitting on the table was a little 3D model of a cartoony miniature city with a weather information display. Like at the on-stage reveal, moving my hand closer to the virtual object showed a white wire frame that offered a few convenient hand holds to grab so I could reposition, turn, and resize the virtual object. HoloLens 2 tracks your hands and individual fingers, so I tried to throw it for a loop with a few different hand holds like an a index finger & thumb grip and a full-handed claw, but the headset was unphased by the attempt; however I found more exaggerated grasping poses (more clearly discernible to the tracking) to be the easiest way to manipulate the room’s various objects.

Brightness

Suffice it to say that HoloLens 2’s optics work best when a room isn’t flooded with light; the better lit space predictably washed out some of the image’s detail and solidity, but it’s clear that the headset has bright enough optics to be acceptably usable in a variety of indoor environments. Of course, I never got the chance to step outside in the Barcelona sun to see how it worked in the worst possible condition—the true test of any AR display system.

Both demos had the headset at max brightness, which can be changed via a rocker switch on the left side. A similar rocker on the right side let me change the audio volume.

A Fitting Hummingbird

With my object interaction handling skills in check, I then got a chance to meet the little hummingbird prominently featured in yesterday’s unveiling. Materializing out of the wall, the intricate little bird twittered about until I was told to put out my open hand, beckoning it to fly over and hover just above my palm. While the demo was created with the primary purpose of showing off the robustness of HoloLens 2’s hand tracking, I couldn’t help but feel that the little bird brought more to the table. As it flew to my open palm, I found myself paying closer attention to the way my hand felt as it hovered over it, subconsciously expecting to feel the wind coming off its tiny wings. For a split second my attention drew to a slight breeze in the room.

It wasn’t a staged ‘4D’ effect either. I later noticed that the whole convention floor had a soft breeze from the building’s HVAC system tasked with slowly fighting against thousands of human-shaped heaters milling about the show floor. For the briefest of moments that little hummingbird lit up whatever part of my brain is tasked with categorizing objects as a potentially physical thing.

Haptics aren’t something HoloLens 2 can do; there isn’t a controller, or included haptic glove, so immersion is driven entirely by the headset’s visuals and positional audio. Talking to Microsoft senior researcher Julia Schwarz, I learned the designers behind the hummingbird portion of the demo loaded it with everything they had in the immersion department, making it arguably a more potent demonstration than the vibration of a haptic motor could produce (or ruin) on its own. It was a perfect storm of positional audio from a moving object, visually captivating movements from an articulated asset, and the prior expectation that a hummingbird wouldn’t actually land on my hand like it would with a Disney princess (revealing it for the digital object that it was). Needless to say, the bird was small enough—and commanded enough attention—to stay entirely in my field of view (FOV) the whole time, which helped drive home the idea that it was really there above my hand. More on FOV in a bit.

Both the hummingbird and general object interaction demos show that HoloLens 2 has made definite strides in delivering a more natural input system that’s looking to shed the coarse ‘bloom’ and ‘pinch’ gestures developed for the original HoloLens. What I saw today still relies on some bits that need tutorializing to fully grasp, but being able to physically click a button, or manipulate a switch like you think you should is moving the interaction-design to where it needs to be—the ultimate ‘anyone can do it’ phase in the future when the hardware will eventually step out of the way.

Eye-tracking & Voice Input

With my bird buddy eventually dematerialized, I then went onto a short demo created specifically to show that the headset can marry eye-tracking and voice recognition into a singular task.

I was told to look at a group of rotating gems that popped after I looked directly at one and said the word “pop!” My brief time with the eye-tracking in HoloLens 2 left me with a good impression; though I didn’t have a way to measure it, I’ve tried nearly every in-headset eye-tracking implementation spanning the 2015-era Fove headset up to Tobii’s new integration with HTC Vive Pro Eye).

The last portion of the demo had one of the most plainly practical uses I’ve seen for eye-tracking thus far: reactive text scrolling. A window appeared containing some basic informational text, and as I naturally got to the bottom of the window it slowly started to scroll to reveal more. The faster I read, the faster it would scroll. Looking to the top of the window, I automatically scrolled back up. It was simple, but extremely effective.