This week Leap Motion released ‘Orion’ a brand new made-for-VR hand tracking engine which works with their existing devices. Time and again we’ve seen promo videos from the company showing great tracking performance but then found it to be less than ideal across the broad range of real-world conditions. Now, an independent video showing the Orion engine in action demonstrates Leap Motion’s impressive mastery of computer vision.

Leap Motion has always been neat and it’s always worked fairly well. Unfortunately in the world of input “fairly well” doesn’t cut it. Even at 99% consistency, input becomes frustrating (imagine your mouse failing to click 1 out of 100 times). And this has presented a major challenge to Leap Motion who have taken on the admittedly difficult computer vision task of extracting a useful 3D model of your hands from a series of images.

The company has been working for nearly a year on a total revamp to their hand tracking engine, dubbed ‘Orion’, which they released this week in beta form for developers. The new engine works on existing Leap Motion controllers.

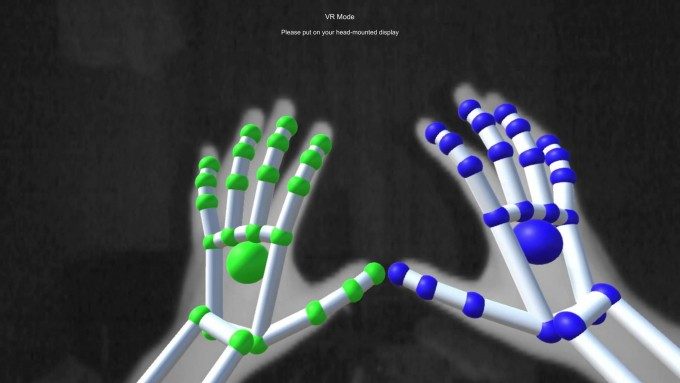

A video from YouTube user haru1688 shows Orion running on the Leap Motion controller mounted to an Oculus Rift DK2. Importantly, this video was not staged for ideal conditions (as official videos surely are). Even so, we see impressively robust performance.

[gfycat data_id=”MindlessOrderlyAssassinbug” data_autoplay=true data_controls=false]

Hands are detected with incredible speed and seemingly immune to rapid movement. We see almost no ‘popping’ of fingers, where the system misunderstood what it was seeing, often showing the 3D representation of the fingers in impossible positions. There’s also now significantly less loss of tracking in general, even when fingers are occluded or the hands are seen edge-on.

Clapping still seems to be one area where the system gets confused, but Leap Motion is aware of this and presumably continuing to refine the interaction before moving Orion out of beta.

Compare the above video to earlier footage by the same YouTube user using a much earlier version of Leap’s hand-tracking (v2.1.1). Here we see lots of popping and loss of the hands entirely.

[gfycat data_id=”GiantTepidDutchsmoushond” data_autoplay=true data_controls=false]

The original Leap Motion hand tracking engine was designed to look up at a user’s hands from below (with the camera sitting on a desk). But as the company has realized the opportunity for their tech in the VR and AR sectors, they’ve become fully committed to a new tracking paradigm, wherein the tracking camera is mounted on the user’s head.

Because the tracking engine wasn’t made specifically for this use-case, there are “four or five major failure cases attributed to [our original tracking] not being built from the ground up for VR,” Leap CEO Michael Buckwald told me.

“For Orion we had a huge focus on general tracking and robustness improvements. We want to let people do those sorts of complicated but fundamental actions like grabbing which are at the core of how we interact with the physical world around us but are hard to do in virtual reality,” he said.