Because VR can take over our entire reality, it can be great for entertainment. But when it comes to AR, the hope is that the tech will be a transient and beneficial addition to reality, rather than taking over your world completely. But figuring out how that works means first understanding how we can interact with AR information at a basic level, like being able to do the same kinds of simple, information-driven tasks that you do hundreds of times per day with your smartphone. Leap Motion, a maker of hand-tracking software and hardware, has been experimenting with exactly that, and is teasing some very interesting results.

Smartphones are essential to our everyday lives, but the valuable information inside of them is constrained by small screens, unable to interact with us directly, nor the world around us. There’s widespread belief in the immersive computing sector that AR’s capacity for co-locating digital information with the physical world makes it the next big step for our smartphones.

Leap Motion has shown lots of cool stuff that can be done with their hand-tracking technology, but most of it is seen through the lens of VR. The company’s VP of Design, Keiichi Matsuda, however, has recently begun teasing prototypes for how the tech can be applied to AR, and the results are nothing short of a glimpse of what our smartphones will eventually become. Matsuda calls this prototype the ‘virtual wearable’:

Introducing Virtual Wearables pic.twitter.com/LPvknKBlnO

— Keiichi Matsuda (@keiichiban) March 22, 2018

The video is shot through an unidentified AR headset which is using Leap Motion’s camera-based hand-tracking module to understand the position of the user’s hands and fingers. Matsuda has envisioned some interesting affordances which uniquely work with the limitations of Leap Motion: the ‘flick tab’ menus are a smart stand-in for what most of us would think to represent as simple buttons; the visual lack of resistance helps reduce the expectation of tactile feedback. The footage also shows impressive occlusion, where the system understands the shape of the user’s hands and appropriately renders ‘clipping’ to make the AR menu feel like it really exists in the same plane as the user’s hands.

The design also draws an on existing, well established touchscreen interface affordances, like a line indicating the grab point of a sliding ‘drawer’ menu; it’s easy to see how this approach could be effectively used to convey and act upon the same sort of basic ‘notification’ type information that we frequently deal with on our smartphones.

Another video from Matsuda shows what the underlying hand-model, as tracked by Leap Motion, looks like to the system behind the scenes:

more beautiful hand tracking pic.twitter.com/dZkZOFnCJ9

— Keiichi Matsuda (@keiichiban) February 28, 2018

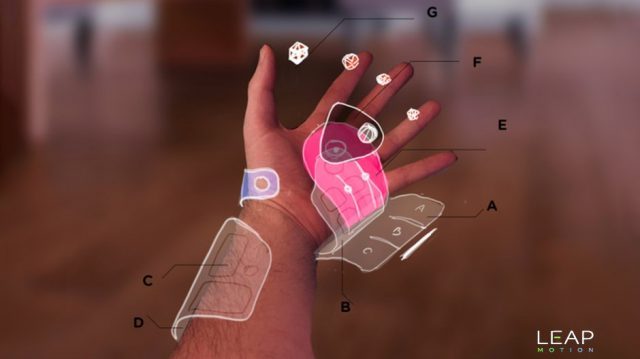

Leap Motion shared a sketch showing an expanded vision of the ‘virtual wearable’ concept:

Matsuda found his way to Leap Motion following the creation of two excellent short films which envision a future where AR is completely intertwined with our day to day lives: Augmented (hyper)Reality and its follow up, HYPER-REALITY (both definitely worth a watch). Now as Leap Motion’s VP of Design, he’s turning his ideas into (augmented) reality.

Matsuda found his way to Leap Motion following the creation of two excellent short films which envision a future where AR is completely intertwined with our day to day lives: Augmented (hyper)Reality and its follow up, HYPER-REALITY (both definitely worth a watch). Now as Leap Motion’s VP of Design, he’s turning his ideas into (augmented) reality.

Leap Motion designers Barrett Fox and Martin Schubert have recently published a series of guest articles on Road to VR which are worth checking out: