ManoMotion, a computer-vision and machine learning company, today announced they’re integrated their company’s smartphone-based gesture control with Apple’s augmented reality developer tool ARKit, making it possible to bring basic hand-tracking into AR with only the use of the smartphone’s onboard processors and camera.

With Google and Apple gearing up for the augmented reality revolution with their respective software developer kits, ARCore and ARKit, developers are fervently looking to see just how far smartphone-based AR can really go. We’ve seen plenty of new usecases for both, including inside-out positional tracking for mobile VR headsets and some pretty mind-blowing experiments too, but this is the first we’ve seen hand-tracking integrated into either AR platform.

Venture Beat got an early look at the company’s gesture input capabilities before they integrated support for ARKit, with ManoMotion CEO Daniel Carlman telling them it tracked “many of the 27 degrees of freedom (DOF) of motion in a hand.” Just like their previous build, the new ARKit-integrated SDK can track depth and recognize familiar gestures like swipes, clicking, tapping, grab, and release—all with what ManoMotion calls “an extremely small footprint on CPUs, memory, and battery consumption.”

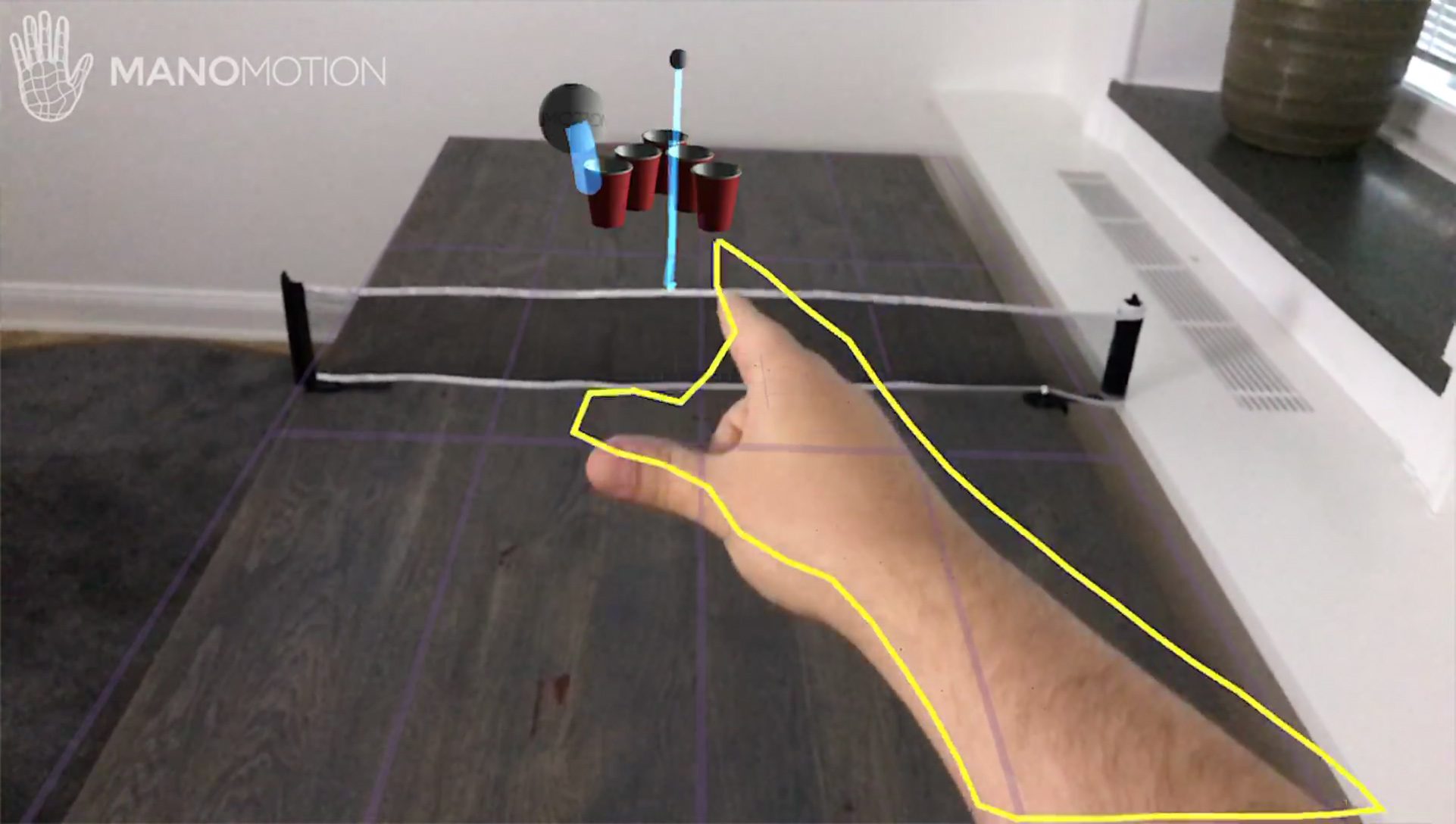

In ManoMotion’s video, we can see the ARKit-driven app recognize the user’s hand and respond to a flicking motion, which sends a ping-pong ball into a cup, replete with all of the spatial mapping abilities of ARKit.

A simple game like beerpong may seem like a fairly banal usecase, but being able to interact with the digital realm with your own two hands (or in this case, one hand) has a much larger implication outside of games. AR devices like HoloLens and The Meta 2 rely upon gesture control to make UI fully interactive, which opens up a world of possibilities including productivity-related stuff like placing and resizing windows, or simply turning on Internet-connected lights in your house with the snap of the finger. While neither Google nor Apple have released word on future AR headsets, it’s these early experimental steps on the mobile platforms of today—which necessarily don’t have access to expensive custom parts—that will define the capabilities of AR headsets in the near future.

“Up until now, there has been a very painful limitation to the current state of AR technology – the inability to interact intuitively in-depth with augmented objects in 3D space,” said Carlman. “Introducing gesture control to the ARKit, and being the first in the market to show proof of this, for that matter, is a tremendous milestone for us. We’re eager to see how developers create and potentially redefine interaction in Augmented Reality!”

ManoMotion says ARKit integration will be made available in the upcoming SDK build, which will be available for download “in the coming weeks” on the company’s website. The integration will initially be made available for Unity iOS, followed by Native iOS in subsequent updates.