EMG Input

While controller and hand-tracking are proven to be useful input devices for XR today, once we move toward all-day worn headsets that we take into public we’re probably not going to want be swing our arms around or poke the air in front of us just to type a message or launch and application while on the bus.

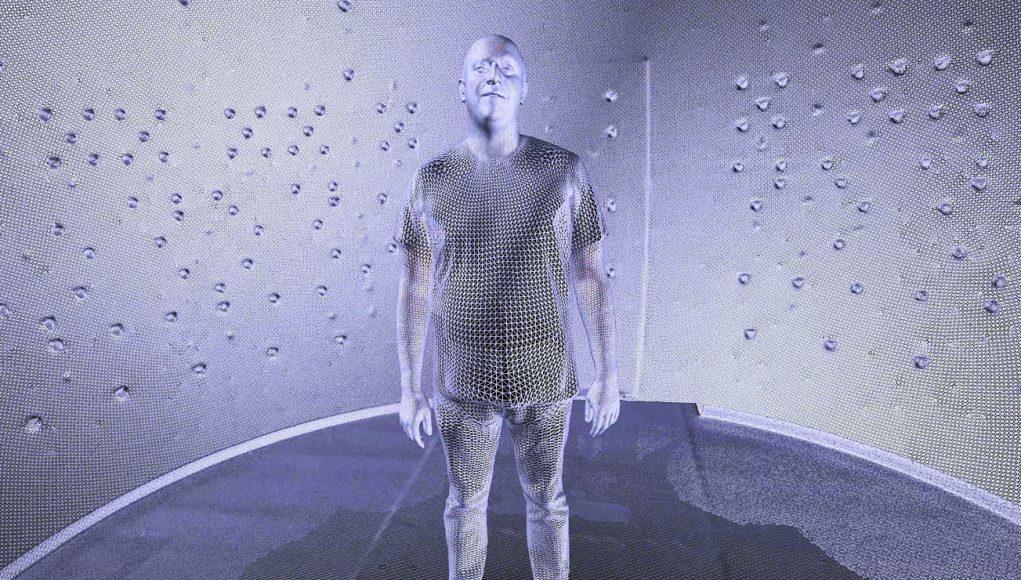

To that end, Meta has been researching a much more subtle and natural means of XR input via wrist-worn EMG. The company detailed some of its work on a wrist-worn input device earlier this year, and at Connect last week for the first time it showed a prototype device being used for text input.

Though the floating window interface on the right is just a simulation, Meta purports that the left view shows an actual prototype device driving the text inputs.

And while it’s slow today in its prototype form, Abrash believes it has huge potential and room for improvement; he calls it “genuinely unprecedented technology.”

Contextual AI

Meta is also working on contextual AI systems to make augmented reality more useful.

In order to help a system understand the intent of the user, Meta used its detailed virtual apartment recreation as an underlying map to help the glasses understand the user’s intent by observing their gaze.

By anticipating intent, Abrash says, the user may only need to have a single button for input which chooses the correct action depending upon the context—like turning on a TV or a light. It’s clear to see how this ‘simple input with context’ dovetails nicely with the company’s concept of a wrist-mounted input device.

– – — – –

Abrash says this overview of some of Meta’s latest R&D projects is just a fraction of what’s being invested in, researched, and built. In order to reach the company’s ultimate vision of the metaverse, he says its “going to take about a dozen major technological breakthroughs […] and we’re working on all of them.”