Speaking at the IEDM conference late last year, Meta Reality Labs’ Chief Scientist Michael Abrash laid out the company’s analysis of how contemporary compute architectures will need to evolve to make possible the AR glasses of our sci-fi conceptualizations.

While there’s some AR ‘glasses’ on the market today, none of them are truly the size of a normal pair of glasses (even a bulky pair). The best AR headsets available today—the likes of HoloLens 2 and Magic Leap 2—are still closer to goggles than glasses and are too heavy to be worn all day (not to mention the looks you’d get from the crowd).

If we’re going to build AR glasses that are truly glasses-sized, with all-day battery life and the features needed for compelling AR experiences, it’s going to take require a “range of radical improvements—and in some cases paradigm shifts—in both hardware […] and software,” says Michael Abrash, Chief Scientist at Reality Labs, Meta’s XR organization.

That is to say: Meta doesn’t believe that its current technology—or anyone’s for that matter—is capable of delivering those sci-fi glasses that every AR concept video envisions.

But, the company thinks it knows where things need to head in order for that to happen.

Abrash, speaking at the IEDM 2021 conference late last year, laid out the case for a new compute architecture that could meet the needs of truly glasses-sized AR devices.

Follow the Power

The core reason to rethink how computing should be handled on these devices comes from a need to drastically reduce power consumption to meet battery life and heat requirements.

“How can we improve the power efficiency [of mobile computing devices] radically by a factor of 100 or even 1,000?” he asks. “That will require a deep system-level rethinking of the full stack, with end-to-end co-design of hardware and software. And the place to start that rethinking is by looking at where power is going today.”

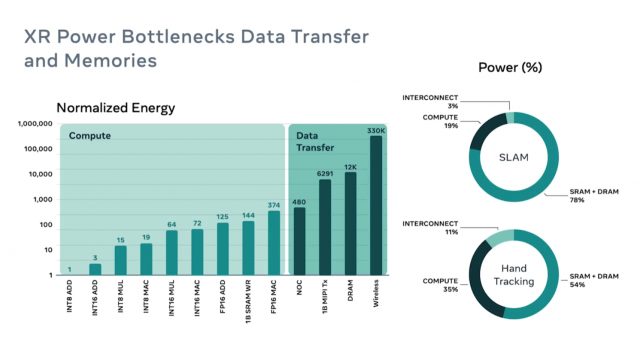

To that end, Abrash laid out a graph comparing the power consumption of low-level computing operations.

As the chart highlights, the most energy intensive computing operations are in data transfer. And that doesn’t mean just wireless data transfer, but even transferring data from one chip inside the device to another. What’s more, the chart uses a logarithmic scale; according to the chart, transferring data to RAM uses 12,000 times the power of the base unit (which in this case is adding two numbers together).

Bringing it all together, the circular graphs on the right show that techniques essential to AR—SLAM and hand-tracking—use most of their power simply moving data to and from RAM.

“Clearly, for low power applications [such as in lightweight AR glasses], it is critical to reduce the amount of data transfer as much as possible,” says Abrash.

To make that happen, he says a new compute architecture will be required which—rather than shuffling large quantities of data between centralized computing hubs—more broadly distributes the computing operations across the system in order to minimize wasteful data transfer.

Compute Where You Least Expect It

A starting point for a distributed computing architecture, Abrash says, could begin with the many cameras that AR glasses need for sensing the world around the user. This would involve doing some preliminary computation on the camera sensor itself before sending only the most vital data across power hungry data transfer lanes.

To make that possible Abrash says it’ll take co-designed hardware and software, such that the hardware is designed with a specific algorithm in mind that is essentially hardwired into the camera sensor itself—allowing some operations to be taken care of before any data even leaves the sensor.

“The combination of requirements for lowest power, best requirements, and smallest possible form-factor, make XR sensors the new frontier in the image sensor industry,” Abrash says.

Continue on Page 2: Domain Specific Sensors »

Page: 1 2