MindMaze, a neurotechnology startup, is developing a simple, low-cost approach to face-tracking called MASK—claimed to be compatible with any VR headset—that can map significantly more life-like expressions onto your virtual avatar.

I recently met with MindMaze at their San Francisco working space to test Mask for myself and learn more about it.

Since optical, computer-vision based face-tracking is made more challenging in VR due to the headset blocking a significant portion of the face, Mask instead employs low-cost electrodes around the periphery of the headset’s foam padding to sense the electrical signals generated when you move muscles in your face.

Speaking to MindMaze CEO Tej Tadi, I was told that the embedded sensing hardware is very low-cost and adds little to the headset’s overall cost of manufacturing. The challenge is not the hardware, Tadi says, but the software which is tasked with interpreting the input.

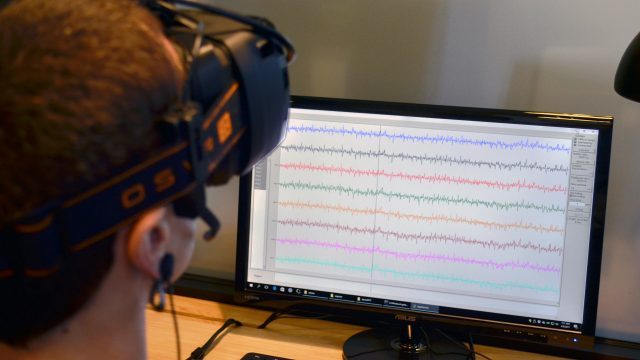

The electrodes, which make direct contact with your face through the headset’s foam interface, only measure electrical amplitude. With eight electrodes embedded on the prototype version of the Mask that I tried, the only thing the computer “sees” is eight incoming data streams that go up or down on a graph. Tadi says that MindMaze has applied their neurotech knowledge to create an algorithm that can read that data, and, from it, extract a set of facial expressions.

Neuro-bamboozling vs. the Real Deal

Now I should be clear up front that I’m extremely skeptical when it comes to neuro-bamboozling: that’s when companies use ‘neuroscience’, ‘neurotechnology’, and other brain buzzwords to make it seem like they’re doing something more significant than they really are. One oft-seen example of neuro-bamboozling is when a company might tell you something like, “you can fly a drone with your mind!,” which, 99% of the time, means they’re going to slap some electrodes on your head and tell you to “concentrate”, which will make the drone go up, and then to “relax” which will make the drone go down. It’s highly binary, and ultimately not very useful.

Nearest I can tell for MindMaze—at least for Mask, as I haven’t seen their other products—they are the real deal. When I strapped on the headset to try Mask for myself, even without any calibration, a range of canned expressions that I made were quickly reflected on the face of an avatar that represented me in the virtual world. When I smiled, it smiled. When I frowned, it frowned. When I winked, it winked. It was easily the best calibration-free tech that I’ve seen of this sort.

I was told the prototype presently supports 10 different facial expressions. Because they are canned poses, which means Mask can only provide approximations of your expressions; it won’t be able to capture the unique movements of the face that make you, you, but it does cover the basics.

In addition to the 10 current expressions, the team says they’re working to suss even more expressions out of the data, including the potential for rudimentary eye-tracking, which wouldn’t be precise enough for things like foveated rendering but could be good enough for expression mapping.