Mojo Vision is a company that’s working to produce smart contact lenses, which it hopes in the near future will let users have a non-obtrusive display without needing to wear a pair of smart glasses.

CNET’s Scott Stein got a chance to go hands-on with a prototype at CES 2020 earlier this month, and although the company isn’t at the point just yet where it will insert the prototype tech into an unsuspecting journalist’s eyeballs, Mojo is adamant about heading in that direction; the team regularly wears the current smart contact lens prototype.

While Mojo maintains its contact lenses are still years away from getting squarely onto the eyeballs of consumers, Mojo is confident enough to say it’ll come sometime in this decade, something the company sees landing in the purview of optometrists so users can get their microdisplay-laden lenses tailored to fit their eyes.

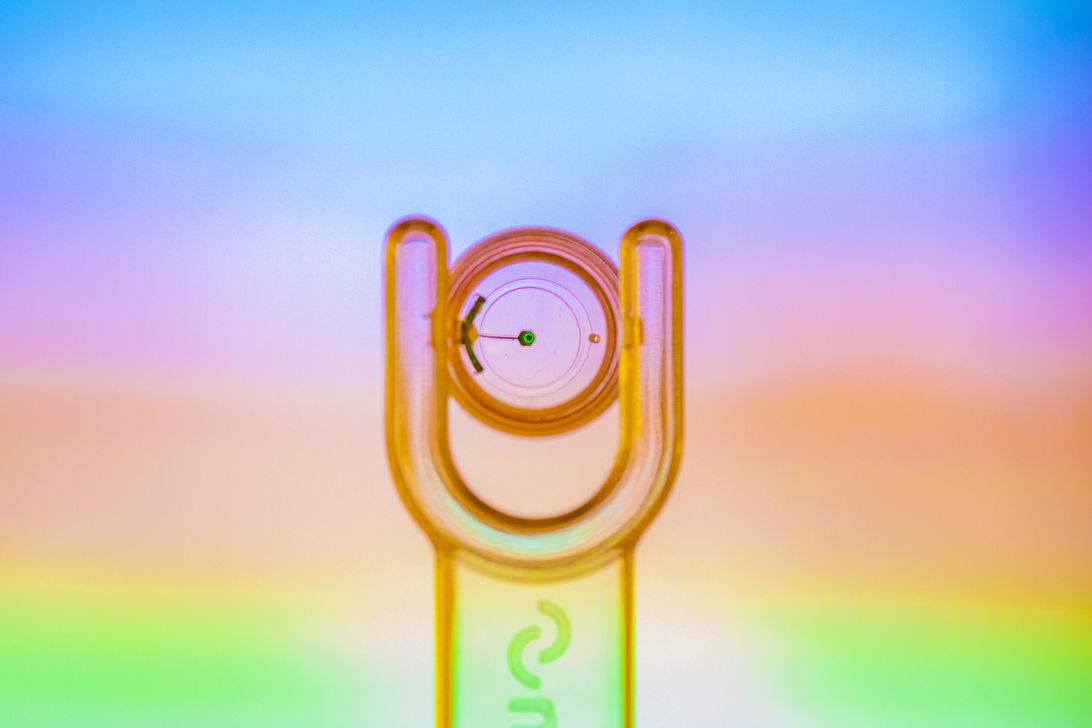

But just how ‘micro’ is that display supposed to be? Fast Company reports that Mojo’s latest and greatest squeezes 70,000 pixels into less than half a millimeter. Granted, that’s serving up a green monochrome microLED to the eye’s fovea, but it’s an impressive feat none the less.

On its way to consumers, Mojo says it’s first seeking FDA-approval for its contacts as a medical device that the company says will display text, sense objects, track eye motion, and see in the dark to some degree, which is intended to help users with degenerative eye diseases.

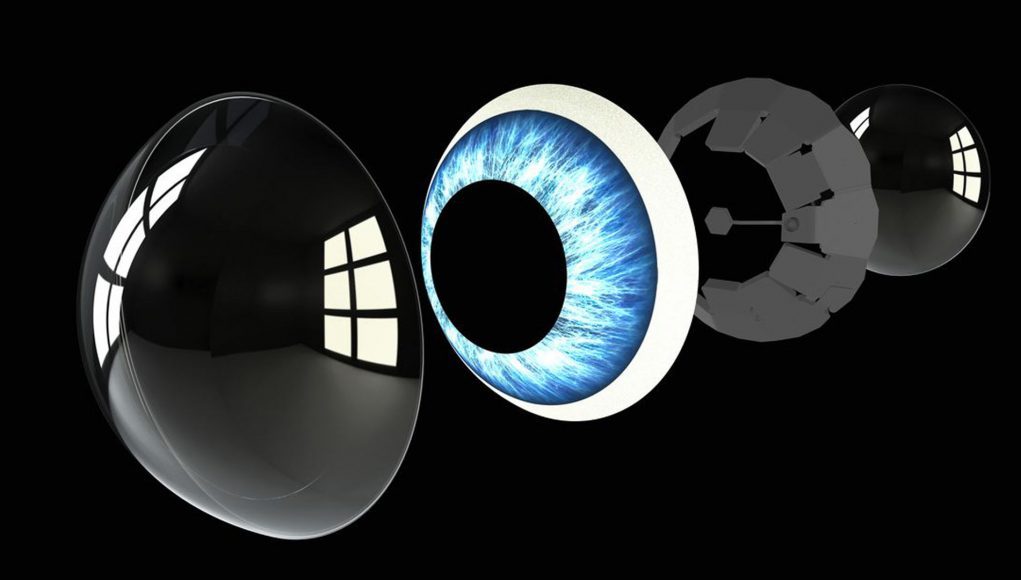

Fast Company reports that Mojo integrates a thin solid-state battery within the lens, which is meant to last all day and be charged via wireless conduction in something similar to an AirPods case when not in use. The farther-reaching goal however is continuous charging via a thin, necklace-like device. All of this tiny tech, which will also include a radio for smartphone tethering, will be covered with a painted iris.

Mojo also maintains that its upcoming version will have eye-tracking and some amount of computer vision—two elements that separate smart glasses from augmented reality glasses.

Smart glasses overlay simple information into the user’s field of view although it doesn’t interact naturally with the environment. Augmented reality, which is designed to insert digital objects and information seamlessly into reality, requires accurate depth mapping and machine learning. That typically means more processing power, bigger batteries, more sensors, and larger optics for a wide enough field of view to be useful. Whether Mojo’s lenses will be able to do that remains to be seen, but it at least has a promising start as a basically invisible pair of smart glasses.

Whatever the case, it appear investors are pitching into Mojo Vision’s vision. It’s thus far garnered $108 million in venture capital investments, coming from the likes of Google’s Gradient Ventures, Stanford’s StartX fund, HP Tech Ventures, Motorola Solutions Venture Capital, and LG.