McCauley says MTS is a ‘vector’ system because control of the laser is done by analog means. The laser isn’t stuck to a quantized grid of possible positions; it can be pointed essentially anywhere within its field of view, only limited by how carefully you can apply voltage (which corresponds to the tilt of the mirror). This means it can be highly precise, even at a great distance.

[gfycat data_id=”EverlastingOptimalBighornedsheep” data_autoplay=true data_controls=false]

A look at a tiny MEMS mirror in motion, less than 1mm across.

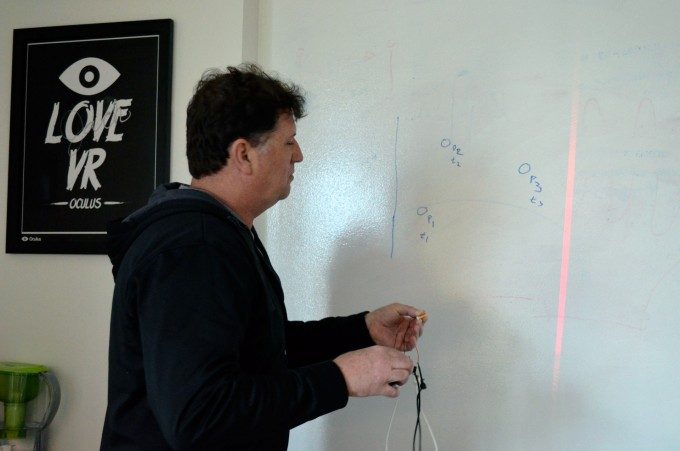

McCauley showed me the system locking onto a tracking point and maintaining it some 20 feet from the base station. He told me the laser can “easily go 60 feet and track” with the gain turned up (though he did mention it might not have been eye-safe at that amplitude!).

He talks about the strengths of the system compared to a camera-based approach.

“I can’t emphasize enough the computational simplicity and low cost of my system. No frame buffers, large memory arrays, USB 10.0 cables or anything like that,” says McCauley. “Just some control theory, a simple processor and some electronics. It’s also much, much faster than a camera will ever be. It is clearly simpler with much greater range and bandwidth.”

McCauley is clear to point out that much of what comprises MTS are off the shelf parts and algorithms pioneered for other purposes.

The MEMS mirror is a complex manufacturing task which he says requires a chip foundry to create, though they’re already in production for a range of applications and could be cheap at scale.

“That little mirror can be flicked into position very quickly. It has little mass and it has a powerful actuator. The entire thing is machined onto a single piece of silicon.”

He calls the tracking algorithms, responsible for identifying and following the reflective markers, “well known in the art” and says they were pioneered by professor Kris Pister of UC Berkeley.

“Why nobody [applied this tech] for VR/MOCAP, I do not know. Perhaps nobody thought of it recently but it’s 16 years old and very established. They use these mirrors for optical switches in fiber optic bundles,” McCauley told me. “Sometimes people get stuck in a mode of thinking about a problem. If you learned only to work with cameras then you will use a camera. I’m not a camera guy so I would not consider using a camera so I have to find some other way of doing it.”

There’s more than can be done with MTS beyond what was demonstrated. For one, the laser that’s tracking the object can be modulated for transmitting data to the headset. That could mean that MTS could work (like Lighthouse does now) as a self-contained ‘dumb’ system, which doesn’t need to be connected to a host PC or to a headset. It could simply broadcast the tracking data through the laser, which could be received by anything on the other end, whether it is a VR headset tethered to a PC, or a mobile headset. In this scenario, the MTS basestation doesn’t need any knowledge about the object it is tracking, it just needs to be able to aim well and shoot the right data at it.

McCauley also supposes the system can achieve full 6DOF tracking from a single marker when combined with data from an IMU in the tracked object. He admits he isn’t 100% on the math for this and says he has someone working on it. My gut tells me this might be technically possible, but would probably lack sufficient correction for IMU drift to be useful. It’s a seemingly moot point anyway, as additional markers are simple to add.