Positional audio for VR experiences—where noises sound as if they are coming from the correct direction—has long been understood as an important part of making VR immersive. But knowing which direction sounds are coming from is only one part of the immersive audio equation. Getting that directional audio to interact in realistic ways with the virtual environment itself is the next challenge, and getting it right came make VR spaces feel far more real.

Positional audio in some form or another is integrated into most VR applications today (some use better integrations and mixes than others). Positional audio tells you about the direction of various sound sources, but it misses out completely on telling you about the environment in which the sound is located, something that we are unconsciously tuned to understand as our ears and brain interpret direct sounds mixed in with reverberations, reflections, diffractions, and more complex audio interactions that change based on the shape of the environment around us and the materials of that environment. Sound alone can give us a tremendous sense of space even without a corresponding visual component. Needless to say, getting this right is important to making VR maximally immersive, and that’s where physically-based audio comes in.

Physically-based audio is a simulation of virtual sounds in a virtual environment, which includes both directional audio and audio interactions with scene geometry and materials. Traditionally these simulations have been too resource-intensive to be able to do quickly and accurately enough for real-time gaming. NVIDIA has dreamt up a solution which takes those calculations and runs them on their powerful GPUs, fast enough, the company says, for real-time use even in high-performance VR applications. In the video heading this article, you can hear how much information can be derived about the physical shape of the scene from the audio alone. Definitely use headphones to get the proper effect; it’s an impressive demonstration, especially to me toward the end of the video when occlusion is demonstrated as the viewing point goes around the corner from the sound source.

That’s the idea behind the company’s VRWorks Audio SDK, which was released today during the GTC 2017 conference; it’s part of the company’s VRWorks suite of tools to enhance VR applications on Nvidia GPUs. In addition to the SDK, which can be used to build positional audio into any application, Nvidia is also making VRWorks Audio available as a plugin for Unreal Engine 4 (and we’ll likely see the same for Unity soon), to make it easy for developers to begin working with physically-based audio in VR.

The company says that VRWorks Audio is the “only hardware-accelerated and path-traced audio solution that creates a complete acoustic image of the environment in real time without requiring any ‘pre-baked’ knowledge of the scene. As the scene is loaded by the application, the acoustic model is built and updated on the fly. And audio effect filters are generated and applied on the sound source waveforms.”

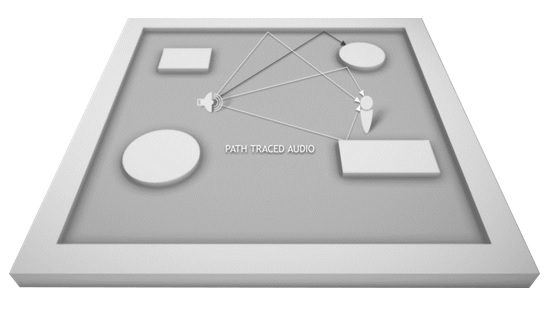

VRWorks Audio repurposes the company’s OptiX ray-tracing engine which is typically used to render high-fidelity physically-based graphics. For VRWorks Audio, the system generates invisible rays representing sound wave propagation, tracing the path from its origin to the various surfaces it will interact with, and eventually to its arrival at the listener.

Road to VR is a proud media sponsor of GTC 2017.