Multi-Resolution Shading

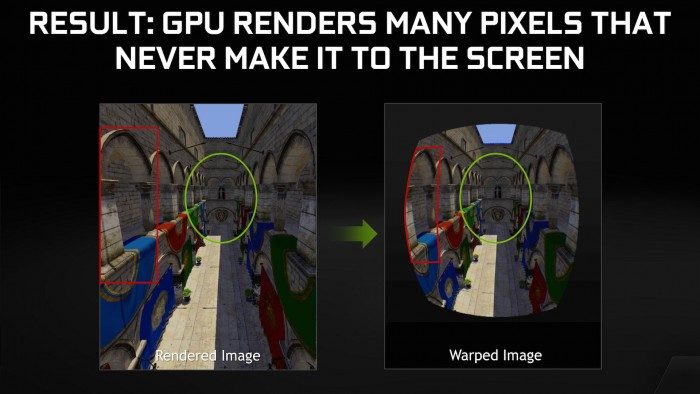

One feature common to all the emerging VR headsets is that they have lenses between you and the screen. The lenses give you a wider field of view and enable you to focus comfortably, but they also cause significant distortions in the image. To compensate for this, the VR runtime software performs a counter-distortion: it takes frames rendered out from a game, and warps them into a nonlinear, oddly curved format before presenting it on the display. When you view this distorted image through the lenses, it appears normal.

The problem is that this warping pass significantly compresses the edges of the image, while leaving the center alone or even slightly expanding it. In order to get full detail at the center, you have to render at a higher resolution than the display—only to throw away most of those pixels during the warping pass.

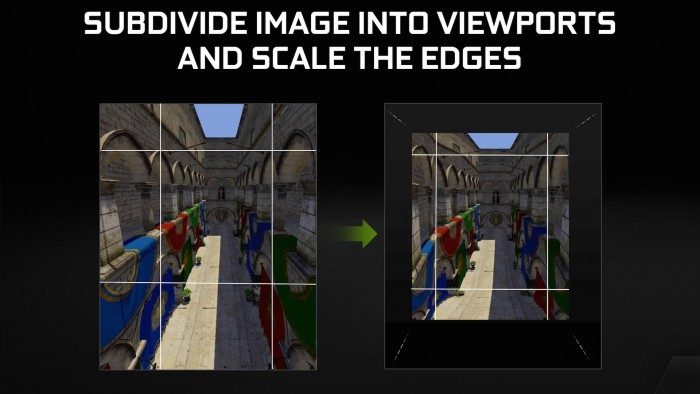

Ideally, we would render directly to the final warped image. But GPUs can’t handle this kind of nonlinear distortion natively—their rasterization hardware is designed around the assumption of linear perspective projections.

However, NVIDIA Maxwell GPUs, including the GeForce GTX 900 series and Titan X, have a hardware feature called “multi-projection” that enables us to very efficiently rasterize geometry into multiple viewports within a single render target at once. These viewports can be arbitrary different shapes and sizes. So, we can split our rendered image up into a few different viewports, keeping the center one its usual size, but shrinking the outer ones. This effectively forms a multi-resolution render target that we can draw into with a single pass, as efficiently as an ordinary render target.

That, in a nutshell, is what we call multi-resolution shading. It allows us to effectively render at full resolution in the center of the image, and reduce resolution in the periphery, in one pass. This better approximates the shading rate of the warped image that will eventually be displayed—in other words, it avoids rendering a ton of extra pixels that weren’t going to make it to the display anyway, and gives you a substantial performance boost for no perceptible reduction in image quality.

Like VR SLI, multi-res shading will require developers to integrate the technique in their games and engines—it’s not something that can be turned on automatically by a driver. Beyond simply enabling multi-res rendering in the main pass, engine developers will also have to modify any postprocessing passes that operate on the multi-res render target, such as SSAO, deferred shading or depth of field effects.

We are working with a few key partners to develop multi-res shading, and are seeing some very promising initial results.