NVIDIA’s Deep Learning Supersampling (DLSS) technology uses AI to increase the resolution of rendered frames by taking a smaller frame and intelligently enlarging it. The technique has been lauded for its ability to render games with high resolution with much greater efficiency than natively rendering at the same resolution. Now the latest version, DLSS 2.1, includes VR support and could bring a huge boost to fidelity in VR content.

DLSS is a technology which can allow more detail to be crammed into each frame by increasing rendering efficiency. It leverages hardware-accelerated AI-operations in Nvidia’s RTX GPUs to increase the resolution of each frame by analyzing and reconstructing new, higher resolution frames.

The goal is to achieve the same resolution and level of detail as a natively rendered frame of the same resolution, and to do the whole thing more efficiently. Doing so means more graphical processing power is available for other things like better lighting, textures, or simply increasing the framerate overall.

For instance, a game with support for DLSS may render its native frame at 1,920 × 1,080 and then use DLSS to up-res the frame to 3,840 × 2,160. In many cases this is faster and preserves a nearly identical level of detail compared to natively rendering the frame at 3,840 × 2,160 in the first place.

Because of the need to keep latency to a minimum, VR already has a high bar for framerate. PC VR headsets expect games to render consistently at 80 or 90 FPS. Maintaining the frame-rate bar requires a trade-off between visual fidelity and rendering efficiency, because a game rendering 90 frames per second has half the time to complete each frame compared to a game which renders at 45 frames per second.

NVIDIA has announced that the latest version, DLSS 2.1, now supports VR. This could mean substantially better looking VR games that still achieve the necessary framerate.

For instance, a VR game running on Valve’s Index headset could natively render each frame at 1,440 × 800 and then use DLSS to up-res the frame to meet the headset’s display resolution of 2,880 × 1,600. By initially rendering each frame at a reduced resolution, more of the GPUs processing power can be spent elsewhere, like on advanced lighting, particle effects, or draw distance. In the case of Index, which supports framerates up to 144Hz, the extra efficiency could be used to raise the framerate overall, thereby reducing latency further still.

NVIDIA hasn’t shared much about how DLSS 2.1 will work with VR content, or if there’s any caveats to the tech that’s unique to VR. And since DLSS needs to be added on a per-game basis, we don’t yet have any functional examples of it being used with VR.

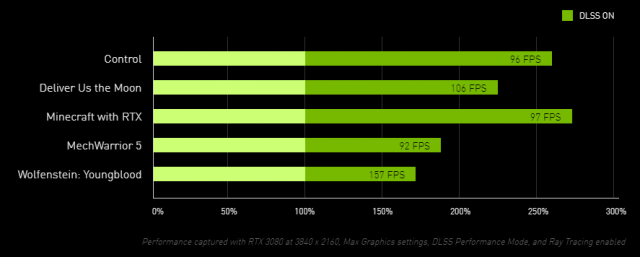

However, DLSS has received quite a bit of praise in non-VR games. In some cases, DLSS can even look better than a frame natively rendered at the same resolution. The folks at Digital Foundry have an excellent overview of what DLSS means for non-VR content.

It isn’t clear yet when DLSS 2.1 will launch, but it seems likely that it will be released in tandem with NVIDIA’s latest RTX 30-series cards which are set to ship starting with the RTX 3080 on September 17th. Our understanding is that DLSS 2.1 will also be available on the previous generation RTX 20-series cards.

Way back in 2016 NVIDIA released a demo called VR Funhouse which showed off a range of the company’s VR-specific rendering technologies. We’d love to see a re-release of the demo incorporating DLSS 2.1—and how about some ray-tracing while we’re at it?