In his latest presentation at Oculus Connect 5, Oculus Chief Scientist Michael Abrash took a fresh look at the five-year VR technology predictions he made at OC3 in 2016. He believes his often-referenced key predictions are “pretty much on track,” albeit delayed by about a year, and that he “underestimated in some areas.”

Optics & Displays

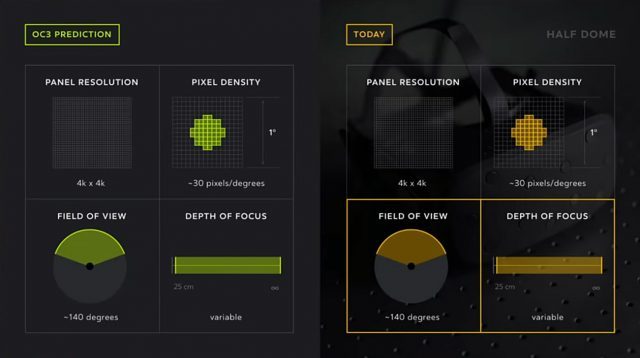

Revisiting each area of technology in turn, Abrash began by discussing optics and displays. His predictions for headset capabilities in the year 2021 were 4K × 4K resolution per-eye, a 140 degree field of view, and variable depth of focus.

“This is an area where I clearly undershot,” he said, noting that Oculus’ own Half Dome prototype shown earlier this year had already met two of these specifications (140 degree FOV and variable focus), and that display panels matching the predicted resolution have already been shown publicly.

Abrash highlighted the rapidly progressing area of research around varifocal displays, saying that they had made “significant progress in solving the problem” with the AI-driven renderer DeepFocus that can achieve “natural, gaze-contingent blur in real time,” and that they would be publishing their findings in the coming months.

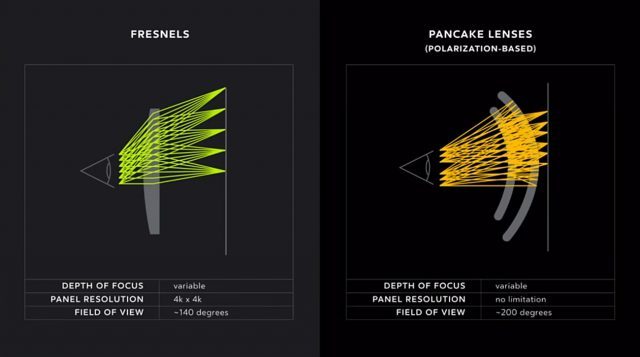

Beyond Half Dome, Abrash briefly mentioned two potential solutions for future optics: pancake lenses and waveguides. Like Fresels, the pancake lens isn’t a new innovation, but is “only now becoming truly practical.” By using polarization-based reflection to fold the optic path into a small space, Abrash says pancake lenses have the potential of reaching retinal resolution and a 200 degree field of view, but there would have to be a tradeoff between form-factor and field of view. Because of the way pancake lenses work “you can get either a very wide field of view or a compact headset […] but not both at the same time,” he said.

But waveguides—a technology being accelerated by AR research and development—theoretically have no resolution or field of view limitations, and are only a few millimetres thick, and could eventually result in an incredibly lightweight headset at any desired field of view and at retina resolution (but that is still many years away).

Foveated Rendering

Moving on to graphics, Abrash’s key prediction in 2016 was that foveated rendering would be a core technology within five years. He extended his prediction by a year (saying that he now expects it will happen within four years from now), and that the rendering approach will likely be enhanced by deep learning. He showed an image with 95% of the pixels removed, with the distribution of remaining pixels dissipating away from the point of focus. The rest of the image was reconstructed efficiently through a deep learning algorithm, and it was impressively similar to the original full resolution version, ostensibly close enough to fool your peripheral vision. Foveated rendering ties closely with eye tracking, the technology that Abrash thought was the most risky of his predictions in 2016. Today, he is much more confident that solid eye tracking will be achieved (it is already part of the way there in Half Dome), but this prediction was also extended by a year.

Spatial Audio

Spatial audio was the next topic, and Abrash conceded that his prediction of personalised Head-Related Transfer Functions (the unique geometry of each person’s ear which influences how they perceive the soundfield around them) becoming a standard part of the home VR setup within five years might also need to be extended, but he described how a recent demo experience convinced him that “audio Presence is a real thing.” Clearly the technology already works, but the personalised HRTF used for this demonstration involved a 30-minute ear scan followed by “a lengthy simulation,” so it’s not yet suitable for a consumer-grade product.

Controllers & Input

Regarding controllers, Abrash stood by his predictions of Touch-like controllers remaining the primary input device in the near future (alongside hand tracking). After running a short clip of one of Oculus’ haptic glove experiments, he adjusted his previous opinion that haptic feedback for hands wasn’t even on the distant horizon, saying that “we’ll have useful haptic hands in some form within ten years.”

Ergonomics & Form Factor

On the subject of ergonomics, Abrash referred to the increasingly significant technology overlap between VR and AR research, noting that future VR headsets will not only be wireless, but could be made much lighter by using the two-part architecture already introduced on some AR devices, where heavy components such as the battery and compute hardware could be placed in a puck that goes in your pocket or on your waist. He said this companion device could also link wirelessly to the headset for complete freedom of motion.

Even still, optical limitations are largely the bottleneck keeping VR headsets from approaching a ski-goggle like design, but advances in pancake and waveguide optics could make for significantly more slender headsets.

Continued on Page 2 »

Page: 1 2